Deep learning

Deep learning ( German : multilayered learning , deep learning or deep learning ) describes a method of machine learning that uses artificial neural networks (ANN) with numerous intermediate layers ( English hidden layers ) between the input layer and the output layer, thereby creating an extensive internal structure. It is a special method of information processing.

The problems solved in the early days of artificial intelligence were intellectually difficult for humans , but easy for computers to process. These problems could be described by formal mathematical rules. The real challenge to artificial intelligence, however, was to solve tasks that are easy for humans to perform, but whose solution is difficult to formulate using mathematical rules. These are tasks that humans solve intuitively , such as speech or face recognition .

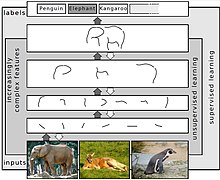

A computer-based solution to this type of task involves the ability of computers to learn from experience and understand the world in terms of a hierarchy of concepts. Each concept is defined by its relationship to simpler concepts. By gaining knowledge from experience, this approach avoids the need for human operators to formally specify all of the knowledge the computer needs to do its job. The hierarchy of concepts allows the computer to learn complicated concepts by assembling them from simpler ones. If you draw a diagram that shows how these concepts are built on top of each other, then the diagram is deep, with many layers. For this reason, this approach is called “deep learning” in artificial intelligence.

It is difficult for a computer to understand the meaning of raw sensory input data, such as in handwriting recognition , where a text initially only exists as a collection of pixels. The conversion of a set of pixels into a chain of numbers and letters is very complicated. Complex patterns have to be extracted from raw data. Learning or evaluating this assignment seems insurmountably difficult if it were programmed manually.

One of the most common techniques in artificial intelligence is machine learning . Machine learning is a self-adaptive algorithm. Deep learning, a subset of machine learning, uses a number of hierarchical layers or a hierarchy of concepts to carry out the machine learning process. The artificial neural networks used here are built like the human brain, with the neurons connected to one another like a network. The first layer of the neural network, the visible input layer, processes raw data input, such as the individual pixels of an image. The data input contains variables that are accessible for observation, hence the “visible layer”.

This first shift forwards its output to the next shift. This second layer processes the information from the previous layer and also forwards the result. The next layer receives the information from the second layer and processes it further. These layers are called hidden layers ( English hidden layers ), respectively. The features they contain are becoming increasingly abstract. Their values are not given in the original data. Instead, the model must determine which concepts are useful in explaining the relationships in the observed data. This continues across all levels of the artificial neural network. The result is output in the last visible shift. This divides the desired complex data processing into a series of nested simple associations, each described by a different layer of the model.

History, development and use

The Group method of data handling -KNNs (GMDH-ANN) of the 1960s of Oleksiy Iwachnenko were the first Deep-learning systems of the feedforward multilayer perceptron type. Further deep learning approaches, especially in the field of machine vision , began with the neocognitron , which was developed by Kunihiko Fukushima in 1980. In 1989, Yann LeCun and colleagues used the backpropagation algorithm for training multi-layer ANNs with the aim of recognizing handwritten postal codes.

The term "deep learning" was first used in the context of machine learning in 1986 by Rina Dechter , whereby she describes a process in which all solutions used in a search area are recorded that did not lead to a desired solution. The analysis of these recorded solutions should make it possible to better control subsequent tests and thus to prevent possible dead ends in the solution finding at an early stage. Nowadays, however, the term is mainly used in connection with artificial neural networks and first appeared in this context in 2000, in the publication Multi-Valued and Universal Binary Neurons: Theory, Learning and Applications by Igor Aizenberg and colleagues.

The latest successes of deep learning methods, such as the Go tournament win of the AlphaGo program against the world's best human players, are based on the increased processing speed of the hardware and the use of deep learning to train the neural network used in AlphaGo. These networks use artificially generated neurons ( perceptrons ) to recognize patterns.

Yann LeCun , Yoshua Bengio and Geoffrey Hinton received the Turing Award in 2018 for contributions to neural networks and deep learning .

Complexity and limits of explainability

Deep neural networks can have a complexity of up to a hundred million individual parameters and ten billion arithmetic operations per input data. The ability to interpret the parameters and explain how the results came about is only possible to a limited extent and requires the use of special techniques, which are summarized under Explainable Artificial Intelligence . Another side effect of deep learning is the susceptibility to incorrect calculations, which can be triggered by subtle manipulations of the input signals that are invisible to images, for example. This phenomenon is summarized under Adversarial Examples .

Program libraries

In addition to the possibility, usually presented in training examples to understand the internal structure, of programming a neural network completely by hand, there are a number of software libraries , often open source , usually executable on several operating system platforms , which are in common programming languages such as C , C ++ , Java or Python . Some of these program libraries support GPUs or TPUs for computing acceleration or provide tutorials on how to use these libraries. With ONNX models can be exchanged between some of these tools.

- TensorFlow (C ++, Python, Swift, js) from Google

- Keras (Python, from version 1.4.0 also included in the TensorFlow API) - popular framework (2018) alongside Tensorflow.

- Caffe from the Berkeley Vision and Learning Center (BVLC)

- PyTorch (Python), developed by the Facebook artificial intelligence research team

- Torch (C, Lua) (Community) and the Facebook framework Torrechner based on it

- Microsoft Cognitive Toolkit (C ++)

- PaddlePaddle (Python) from the search engine manufacturer Baidu

- OpenNN (C ++), implements an artificial neural network.

- Theano (Python) from the Université de Montréal

- Deeplearning4j (Java) from Skymind

- MXNet from the Apache Software Foundation

literature

- François Chollet: Deep Learning with Python and Keras: The practical manual from the developer of the Keras library . mitp, 2018, ISBN 978-3-95845-838-3 .

- Ian Goodfellow , Yoshua Bengio, Aaron Courville: Deep Learning: Adaptive Computation and Machine Learning . MIT Press, Cambridge USA 2016, ISBN 978-0-262-03561-3 .

- Jürgen Schmidhuber : Deep learning in neural networks: An overview. In: Neural Networks , 61, 2015, p. 85, arxiv : 1404.7828 [cs.NE] .

Web links

- Luis Serrano: A friendly introduction to Deep Learning and Neural Networks on YouTube , December 26, 2016, accessed November 7, 2018.

- Deep Learning - Introduction. Review article on deep learning

- Topic: Deep Learning . heise online

- Deep learning: how machines learn . Spektrum.de - Translation of the article The learning machines . In: Nature , 505, pp. 146-148, 2014

Individual evidence

- ↑ Hannes Schulz, Sven Behnke: Deep Learning: Layer-Wise Learning of Feature Hierarchies . In: AI - Artificial Intelligence . tape 26 , no. 4 , November 2012, ISSN 0933-1875 , p. 357-363 , doi : 10.1007 / s13218-012-0198-z ( springer.com [accessed January 13, 2020]).

- ↑ Herbert Bruderer: Invention of the computer, electronic calculator, developments in Germany, England and Switzerland . In: Milestones in computing . 2nd, completely revised and greatly expanded edition. tape 2 . De Gruyter, 2018, ISBN 978-3-11-060261-6 , dictionary of technical history , p. 408 ( limited preview in Google Book Search [accessed November 23, 2019]).

- ^ A b c d e f Ian Goodfellow, Yoshua Bengio, Aaron Courville: Deep Learning. MIT Press, accessed February 19, 2017 .

- ↑ a b David Kriesel : A small overview of neural networks. (PDF; 6.1 MB) In: dkriesel.com. 2005, accessed January 21, 2019 .

- ^ Li Deng, Dong Yu: Deep Learning: Methods and Applications . May 1, 2014 ( microsoft.com [accessed May 17, 2020]).

- ↑ a b Michael Nielsen: Neural Networks and Deep Learning. Determination Press, accessed February 21, 2017 .

- ^ Li Deng, Dong Yu: Deep Learning: Methods and Applications . In: Microsoft Research (Ed.): Foundations and Trends in Signal Processing Volume 7 Issues 3-4 . May 1, 2014, ISSN 1932-8346 (English, microsoft.com [accessed February 22, 2017]).

- ^ Ivakhnenko, AG and Lapa, VG (1965). Cybernetic Predicting Devices. CCM Information Corporation.

- ↑ Jürgen Schmidhuber : Deep learning in neural networks: An overview. In: Neural Networks. 61, 2015, p. 85, arxiv : 1404.7828 [cs.NE] .

- ^ Jürgen Schmidhuber: Critique of Paper by "Deep Learning Conspiracy" (Nature 521 p 436). people.idsia.ch, June 2015, accessed on April 12, 2019.

- ↑ Yann LeCun et al .: Backpropagation Applied to Handwritten Zip Code Recognition, Neural Computation, 1, pp. 541-551, 1989 , accessed May 11, 2020.

- ^ Rina Dechter: Learning while searching in constraint satisfaction problems. (PDF; 531 KB) In: fmdb.cs.ucla.edu. University of California, Computer Science Department, Cognitive Systems Laboratory, 1985, accessed July 9, 2020 .

- ↑ Horváth & Partners: “Artificial intelligence will change everything” (from 0:11:30 am) on YouTube , May 9, 2016, accessed on November 6, 2018 (presentation by Jürgen Schmidhuber).

- ↑ Jürgen Schmidhuber: Deep Learning since 1991. In: people.idsia.ch. March 31, 2017, accessed December 1, 2018 .

- ↑ Igor Aizenberg, Naum N. Aizenberg, Joos PL Vandewalle: Multi-Valued and Universal Binary neuron: Theory, Learning and Applications . Springer Science & Business Media, March 14, 2013, ISBN 978-1-4757-3115-6 .

- ↑ Demis Hassabis: AlphaGo: using machine learning to master the ancient game of Go. In: blog.google. Google, January 27, 2016, accessed July 16, 2017 .

- ↑ Alexander Neumann: Deep Learning: Turing Award for Yoshua Bengio, Geoffrey Hinton and Yann LeCun - heise online. In: heise.de. March 28, 2019, accessed March 29, 2019 .

- ↑ Leilani H. Gilpin, David Bau, Ben Z. Yuan, Ayesha Bajwa, Michael Specter: Explaining Explanations: An Overview of Interpretability of Machine Learning . In: 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA) . IEEE, Turin, Italy 2018, ISBN 978-1-5386-5090-5 , pp. 80–89 , doi : 10.1109 / DSAA.2018.00018 ( ieee.org [accessed December 8, 2019]).

- ^ Dan Clark: Top 16 Open Source Deep Learning Libraries and Platforms. KDnuggets, April 2018, accessed January 8, 2019 .

- ^ Keras Documentation. In: Keras: Deep Learning library for Theano and TensorFlow. Retrieved March 6, 2017 (English).

- ↑ Why use Keras? In: keras.io. Retrieved January 8, 2020 (English): “Keras is also a favorite among deep learning researchers, coming in # 2 in terms of mentions in scientific papers uploaded to the preprint server arXiv.org . Keras has also been adopted by researchers at large scientific organizations, in particular CERN and NASA. "

- ↑ Torch | Scientific computing for LuaJIT. Accessed February 17, 2017 .

- ↑ Rainald Menge-Sonnentag: Machine Learning: Facebook publishes open source framework for Torch. In: heise.de. June 24, 2016. Retrieved February 17, 2017 .

- ^ The Microsoft Cognitive Toolkit. In: microsoft.com. Retrieved August 11, 2017 (American English).

- ↑ Home. In: paddlepaddle.org. Accessed February 17, 2017 .

- ↑ Alexander Neumann: Baidu releases deep learning system as open source. In: heise.de. September 2, 2016. Retrieved February 17, 2017 .

- ^ Theano. In: deeplearning.net. Retrieved September 20, 2019 .

- ↑ Apache MxNet (Incubating) - A flexible and efficient library for deep learning. In: mxnet.apache.org. Retrieved September 5, 2019 .