Freenet (software)

| Freenet

|

|

|---|---|

|

|

| Basic data

|

|

| Maintainer | The Freenet Project |

| Publishing year | March 2000 |

| Current version |

0.7.5 build 1485 ( January 27th 2020 ) |

| operating system | Platform independent |

| programming language | Java |

| category | Security software , anonymity , peer-to-peer |

| License | GNU General Public License |

| German speaking | Yes |

| freenetproject.org | |

Freenet is a peer-to-peer - software for setting up a computer network distributed whose goal is to store data while censorship to thwart and anonymous to allow exchange of information. This goal is to be achieved through decentralization , redundancy , encryption and dynamic routing . Freenet is developed as free software under the GPL . In simplified terms, the Freenet can be viewed as a vagabond data store , which all participants can access equally ( peer-to-peer ). Central name servers, server hard drives and similar structures are consistently avoided in a constructive manner. Only the transfer functionality of the regular Internet is required. According to the developers' rating, it is still a trial version .

history

In 1999, the Irish student Ian Clarke described a "distributed decentralized information storage and retrieval system" in a paper. Shortly after it was released, Clarke and a small number of volunteers began implementing this idea as free software .

In March 2000 the first version was ready for publication. In the following period there was a lot of coverage about Freenet, but the press was mainly concerned with the effects on copyrights and less with the goal of free communication. The academic world was also concerned with Freenet: According to CiteSeer, Clarke's paper was the most cited scientific document in computer science in 2000.

Freenet is developed in a distributed manner with the help of the Internet. The project founded the non-profit "The Freenet Project Inc." and has employed Matthew Toseland as a full-time programmer since September 2002, who is paid for by donations and the proceeds from offshoot products . In addition, others work freely.

In January 2005 it was planned to completely rewrite the next version, Freenet 0.7 . In mid-April 2005, the Freenet developers started talking on the open mailing list about designing Freenet as a so-called Darknet , which means that access can only take place at the “invitation” of existing participants. (See the Darknet section for details .) This element is the most important new feature in 0.7.

The idea behind the planned Freenet 0.7 was then presented by Ian Clarke and the mathematician Oskar Sandberg at two hacker events, on July 29, 2005 at the 13th DEFCON and on December 30, 2005 at the 22nd Chaos Communication Congress (22C3).

At the beginning of April 2006 the first alpha version of Freenet 0.7 was published.

Others

By the end of 2003 a group called Freenet-China had translated the program into Chinese and distributed it in the People's Republic of China on CDs and floppy disks . The Chinese computer and Internet filters block the word “Freenet” (as of August 30, 2004). Internet connections from Freenets are blocked for versions prior to 0.7. This was possible because up until then a few predictable bytes were generated at the beginning of a connection establishment.

An analysis published in May 2004 states that most of the Freenet users at that time came from the USA (35%), Germany (15%), France (11%), Great Britain (7%) and Japan (7%). This analysis is probably based on the IP address list of the participants, which was provided by the project for versions prior to 0.7, and thus does not take into account separate Freenet networks such as Freenet-China.

use

Each user provides Freenet with part of their hard drive as storage. You don't share certain folders or files with others, as is the case with normal file sharing , but reserve a certain amount of hard disk space (in the order of gigabytes ), which Freenet uses independently with data from the network.

The use of Freenet is comparable to that of the World Wide Web (WWW). Using any browser, you can surf the Freenet, as you are used to from the WWW. The FProxy program module works as a local server program and can generally be reached at http: // localhost: 8888 /. From this entry page you can request or upload individual Freenet addresses, and links to a small number of Freenet sites are provided, which serve as the first point of contact in Freenet.

A freesite represents to Freenet roughly the same thing that websites represent to the WWW. These are documents that are accessible through the Freenet gateway. They can contain links to other free sites or to other data that can be accessed using a Freenet key.

With the help of so-called Activelinks , the distribution of selected free sites can be promoted. If you include a small image from another free site on your own free site, this will be automatically promoted when your own free site is loaded with the image.

It takes a relatively long time to load content, as Freenet sets security priorities. It can take several minutes, especially at the beginning, as the subscriber is not yet well integrated into the Freenet network. Only through the integration does the program learn which other participants can best send requests to.

field of use

In addition to the simple publication of information, Freenet is suitable for time-delayed communication, which means that both a kind of e-mail system and discussion forums can be based on Freenet.

A very common type of free site are blogs (weblogs), which Freenet users refer to as “flogs” (freelogs). A special incentive for the establishment is the anonymity with which one can publish his reports on Freenet.

WebOfTrust: Spam-resistant, anonymous communication

With the WebOfTrust plug-in, Freenet offers an anti-spam system that is based on anonymous identities and trust values. Any anonymous identity can give positive or negative trust to any other anonymous identity. The trust values of the known identities are merged in order to be able to assess unknown identities. Only the data of identities with non-negative trust will be downloaded.

New identities can be made known via Captcha images. They solve captchas of other identities and get a trust of 0 from these identities. As a result, they are visible to these identities, but not to any others. If they then write meaningful posts, they can receive positive trust (> 0) so that they are visible to all identities who trust the trusting identities.

Software for Freenet

There is special software for uploading data, which in particular facilitates the exchange of larger files. These are internally broken down into small pieces ( split files ) and, if necessary, provided with redundant data blocks ( error correction ).

Technical details

functionality

All content is stored in so-called keys . The key is clearly derived from the hash value of the content and has nothing to do with the content in terms of appearance. (For example, a text file with the Basic Law could have the key YQL .) The keys are stored in a so-called distributed hash table .

Each participant not only saves the content that they offer themselves. The so-called - instead, all content on the various computers are nodes (english nodes ) - distributed. The selection of where a file is saved is made by routing . Over time, each node specializes in certain key values.

The storage on the computer is encrypted and without the knowledge of the respective user. This function was introduced by the developers to give Freenet users the opportunity to credibly deny knowledge of the data that happens to be held ready for the network in the local memory reserved for Freenet. So far, however, no cases are known in the case law, at least in Germany, in which the option was used to deny knowledge, so that the effectiveness of this measure in German courts has not been clarified.

When a file is to be downloaded from the Freenet , it is searched for using the routing algorithm. The request is sent to a node whose specialization is as similar as possible to the key sought.

Example: We are looking for the key HGS. We are connected to other Freenet nodes that have the following specializations: ANF, DYL, HFP, HZZ, LMO. We choose HFP as the addressee of our request, as its specialization comes closest to the key we are looking for.

If the addressee does not have the key in his memory, he repeats the procedure as if he wanted the key himself: He sends the request on to the node that, in his opinion, is best specialized.

And so it continues. When a node finally has the file it is looking for, it is transported from the location to the original requester. But this transport takes place via all nodes involved in the request chain . This design is a central feature of Freenet. It serves to preserve the anonymity of the source and recipient. Because if you receive a request yourself, you cannot know whether the requestor wants to have the file himself or just forward it.

When the file is transferred, some computers save a copy in their memory. Popular files reach many computers in the Freenet network. This increases the probability that further requests for this file will be successful more quickly.

Uploading a file to Freenet works in a very similar way: Here, too, Freenet looks for the node whose specialization comes closest to the key. This is useful so that the file is where the requests for such keys are sent.

Since the aim of uploading is not to find someone with the data after a few forwardings, a value is set beforehand for how often the data is forwarded.

Problems

In order for Freenet to work, you need the address of at least one other user in addition to the program itself. The project supports the integration of new nodes by offering an up-to-date collection of such addresses ( seednodes ) on its website . However, when this offer disappears or is inaccessible to someone with limited access to the internet, the first connection point becomes a problem. In addition, for reasons of security and network topology, it is more desirable for private seed nodes to be distributed among friends.

Low-speed, asymmetrical, or short-lived nodes can hinder the flow of data. Freenet tries to counteract this with intelligent routing (see routing ).

Freenet cannot guarantee permanent storage of data. Since storage space is finite, there is a trade-off between publishing new content and keeping old content.

key

Freenet has two types of keys.

Content-hash key (CHK)

CHK is the system -level ( low-level ) data storage key. It is created by hashing the contents of the file to be saved. This gives each file a practically unique, absolute identifier ( GUID ). Up to version 0.5 / 0.6 SHA-1 is used for this .

In contrast to URLs , you can now be sure that the CHK reference refers to exactly the file you meant. CHKs also ensure that identical copies that are uploaded to Freenet by different people are automatically merged, because each participant calculates the same key for the file.

Signed subspace key (SSK)

The SSK uses an asymmetric cryptosystem to create a personal namespace that everyone can read, but only the owner can write to. First a random key pair is generated. To add a file, first choose a short description, for example politics / germany / scandal . Then the SSK of the file is calculated by hashing the public half of the subspace key and the descriptive character string independently, concatenating the results, and then hashing the result again. Signing the file with the private half of the key enables verification as each node processing the SSK file verifies its signature before accepting it.

To obtain a file from a sub-namespace, you only need the public key of this space and the descriptive character string from which the SSK can be reproduced. To add or update a file, you need the private key to create a valid signature. SSKs enable trust by guaranteeing that all files in the subnamespace were created by the same anonymous person. So the various practical areas of application of Freenet are possible (see area of application ).

Traditionally, SSKs are used to indirectly store files by containing pointers that point to CHKs rather than holding the data itself. These "indirect files" combine readability for humans and the authentication of the author with the quick verification of CHKs. They also allow data to be updated while the referential integrity is preserved: in order to update, the owner of the data first uploads a new version of the data, which receives a new CHK, since the contents are different. The owner then updates the SSK so that it points to the new version. The new version will be available under the original SSK, and the old version will remain accessible under the old CHK.

Indirect files can also be used to split large files into many pieces by uploading each part under a different CHK and an indirect file pointing to all of the pieces. In this case, however, CHK is mostly used for the indirect file. Finally, indirect files can also be used to create hierarchical namespaces in which folder files point to other files and folders.

SSK can also be used to implement an alternative domain name system for nodes that frequently change their IP address. Each of these nodes would have their own subspace and could be contacted by retrieving their public key to find the current address. Such address-resolution keys actually existed up to version 0.5 / 0.6, but they have been abolished.

Protection against excessive requests

Inquiries and uploads are provided with an HTL (English hops to live based on TTL , that is: how often can they be forwarded?), Which is reduced by 1 after each forwarding. There is an upper limit for the start value so that the network is not burdened by actions with ridiculously high values. The current value is 20: If a request does not return a result after so many hops, the content is probably not available - or the routing does not work, but higher HTLs do not help either. The same applies to uploading: after 20 hops, information should be sufficiently disseminated. (This spread can certainly increase by querying the data.)

Routing

In Freenets routing a request from the processing node is at a forwarded another whose specialization, is as similar as possible in the opinion of the forwarder, the searched key.

Freenet has thus decided against the two main alternatives:

- A central index of all available files - the simplest routing, used for example by Napster - is vulnerable because of the centralization.

- Disseminating the request to all connected nodes - used by Gnutella , for example - wastes resources and is not scalable .

The way in which Freenet decides who to forward it to forms the core of the Freenet algorithm.

Old routing

Freenet's first routing algorithm was relatively simple: if a node forwards a request for a certain key to another node and the latter can fulfill it, the address of a returning node is listed in the response. That can - only possibly - be the person who saved the data locally. It is assumed that the specified node is a good address for further inquiries for similar keys.

An analogy to clarify: Because your friend Heinrich was able to successfully answer a question about France, he should also be a good contact for a question about Belgium.

Despite its simplicity, this approach has proven to be very effective, both in simulations and in practice. One of the expected side effects was that nodes tend to specialize in certain key areas. This can be seen analogously to the fact that people specialize in certain areas. This effect was observed with real Freenet nodes in the network; the following picture represents the keys stored by a node:

The x-axis represents the key space , from key 0 to key 2 160 -1. The dark stripes show areas where the node has detailed knowledge of where requests for such keys should be routed.

If the node had just been initialized, the keys would be evenly distributed across the key space. This is a good indicator that the routing algorithm is working correctly. Nodes specialize, as in the graphic, through the effect of feedback when a node successfully responds to a request for a particular key - it increases the likelihood that other nodes will forward requests for similar keys to it in the future. Over a longer period of time, this ensures the specialization that is clearly visible in the diagram above.

Next Generation Routing (NGR)

The aim of NGR is to make routing decisions much smarter by collecting extensive information for each node in the routing table, including response time when requesting certain keys, the percentage of requests that successfully found information, and the time it took to establish an initial connection. When a new request is received, this information is used to estimate which node is likely to be able to obtain the data in the least amount of time, and that will be the node to which the request will be forwarded.

DataReply estimate

The most important value is the estimate of how long it will take to get the data when a query is made. The algorithm must meet the following criteria:

- He must be able to guess sensibly about keys that he has not yet seen.

- It has to be progressive: if the performance of a node changes over time, that should be represented. But he must not be overly sensitive to the latest fluctuations, which deviate significantly from the average.

- It has to be scale-free : Imagine a naive implementation that divides the key space into a number of sections and has an average for each. Now imagine a node where most of the incoming requests are in a very small section of the key space. Our naive implementation would not be able to represent variations in response time in this small range and would therefore limit the ability of the node to accurately estimate the routing times.

- It must be efficiently programmable.

NGR fulfills these criteria: Reference points are maintained - it is configurable, 10 is a typical value - which are initially evenly distributed over the key space. If there is a new routing time value for a particular key, the two points closest to the new value will approximate it. The extent of this approximation can be changed to adjust how “forgetful” the estimator is.

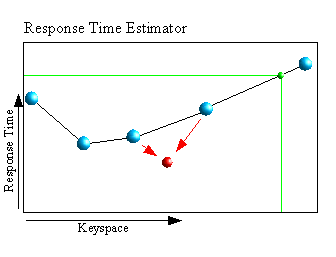

The diagram opposite shows how two reference points ( blue ) are moved towards the new value ( red ).

If an estimate is to be created for a new key, the green line shows the assignment of the estimated answer to the key.

Handling different file sizes

There are two time values that need to be considered when we receive a DataReply:

- The time until the beginning of the answer arrives.

- The time to transfer the data.

To combine these two aspects into one value, both numbers are used to estimate the total time between sending the request and completing the transfer . For the transfer time, it is assumed that the file has the average length of all data in local storage.

Now we have a single value that can be directly compared with other time measurements on requests, even if the files are of different sizes.

What to do if the data is not found

When a request has passed through the maximum number of nodes according to HTL (see Protection against excessive requests ), the message “File not found” ( DataNotFound , DNF for short ) is sent back to the requestor.

A DNF can have two causes:

- The data is available in Freenet, but could not be found. This DNF would reveal a deficiency in the routing of the nodes involved. We call this DNF illegitimate in the following .

- The data doesn't even exist. This DNF would not show any shortcomings. We'll call it legitimate below .

There is no practical way to find out whether a DNF is legitimate or illegitimate. Let us assume for a moment that we can identify illegitimate DNFs. Then the cost would be the time to receive the illegitimate DNF plus send a new request elsewhere.

We can estimate the former by looking how long previous DNFs from the particular node took - in proportion to the HTL of the request: A request with HTL = 10 will go through twice as many nodes as one with HTL = 5 and therefore about twice take so long to return DNF. We can estimate the second value by taking the average time it takes to successfully receive data.

Now let's imagine a Freenet node with perfect routing, whose only DNF output would be legitimate: because if the data were in the network, it would find it with its perfect routing. DNF's share of the responses that this node would return would be equal to the legitimate DNF's share. Such a node cannot practically exist, but we can approximate it by looking for the node with the lowest proportion of DNF in our routing table.

Now we can calculate the time cost of DNFs, and we can also approximate what proportion of DNFs is legitimate - and which is therefore not considered a loss of time. With this we can add estimated routing time costs for each node to account for DNFs.

Dealing with failed connections

We cannot interact with heavily overloaded nodes. We can account for this possibility by storing the average number of failed connections for each node and how long each one lasted. These values are added to the estimated routing time for each node.

Knowledge gained

One of the problems in Freenet currently being observed by NGR is the time it takes for a Freenet node to accumulate sufficient knowledge of the network to route efficiently. This is particularly detrimental to Freenet's ease of use as first impressions are critical to new users, and this is usually the worst as it occurs before the Freenet node can effectively route.

The solution is to build some qualified trust between Freenet nodes and allow them to share the information gathered on top of each other, albeit in a rather suspicious manner.

There are two ways in which a Freenet node learns about new nodes.

- When the program starts, it loads a file that contains the routing experience of another, more experienced node. With NGR, this information is enriched with statistical data so that a node, even when it is started for the first time, already has the knowledge of an experienced node. This knowledge is adapted in the course of his activity according to his own experience.

- The other option is the " DataSource " field , which is sent back to successful requests. This field contains one of the nodes in the chain and statistical information about it. However, since this information could be manipulated, it will be adapted by each node that forwards it if the node itself knows about the address mentioned.

Benefits of NGR

- Adapts to the network topology.

- The old routing ignored the underlying Internet topology: nodes connected quickly and slowly were treated equally. In contrast, NGR bases its decisions on actual routing times.

- Performance can be evaluated locally.

- With the old routing, the only way to evaluate its performance was to test it. With NGR, the difference between estimated and actual routing time gives you a simple value of how effective you are. Now if a change to the algorithm results in better estimates, we know that it is better; and vice versa. This greatly accelerates further development.

- Approaching the optimum.

- In an environment where only one's knot can be trusted, it is reasonable to say that all decisions should be based on one's own observations. If the previous own observations are used optimally, then the routing algorithm is optimal. Of course, there is still room in terms of how the algorithm estimates the routing times.

safety

Darknet

When using Freenet (status: version 0.5 / 0.6) you can quickly connect with a large number of other users. It is therefore no problem for an attacker to collect the IP addresses of large parts of the Freenet network in a short time . This is called harvesting (English for "harvesting").

If Freenet is illegal in a country like China and allows harvesting , the rulers can simply block it: You start a node and collect addresses. This gives you a list of nodes within the country and a list of nodes outside of it. You block all nodes outside the country, and cut the connection of all nodes within the country so that they can no longer reach the Internet.

In mid-April 2005, the Freenet developers started talking on the open mailing list about designing Freenet as a so-called Darknet , which means that access can only take place at the “invitation” of existing participants.

With the plan to create a global darknet, however, the developers are breaking new ground. They believe in the small world property of such a darknet - that means that every participant can reach everyone else via a short chain of hops . Every person (social actor) in the world is connected to everyone else via a surprisingly short chain of acquaintance relationships. Because there has to be a certain amount of trust to be invited to Freenet, they hope that the resulting network structure will reflect the network of relationships between people.

Discussions about this approach had the consequence that not only a darknet should be developed, but that there will also be an open network, as was the whole Freenet before 0.7. There are two ways in which these two should be related to each other:

- There are connections between the open network and the darknet. A so-called hybrid network would be created. Contents that are published in one network can also be called up from the other network. This approach becomes a problem when many small, unconnected darknets depend on the connection through the open network. This corresponds to the current implementation of version 0.7.0.

- The two networks are unconnected. Content that is published in one network is initially not available in the other. However, it would be possible to transfer data without the involvement of the author, even with SSK keys. This option was favored by the main developer in the past (as of May 2005), as it enables the functioning of a stand-alone global darknet to be checked despite the alternative open network.

Possibility of democratic censorship

On July 11, 2005, full-time developer Matthew Toseland presented a proposal on how democratic censorship could be implemented in the Darknet. For this, data would have to be stored for a longer period of time via uploads. When a node "complains" about content, that complaint is spread and other nodes can join. If a sufficient majority of neighboring nodes support a complaint, the upload process is traced back to the nodes that do not support the complaint using the stored data.

As soon as these have been found, possible sanctions (in ascending order of the required majority)

- Reprimand

- Deactivation of premix routing for the node

- Separation of the connection to the node

- Disclosure of the identity of the node

While the main criticism of this proposal was that Freenet was thereby distancing itself from absolute freedom from censorship and thus no longer acceptable to libertarian people, Toseland countered that Freenet in its previous state was not acceptable to all those who did not have libertarian views and would be deterred, for example, by child pornography.

Another point of criticism was the possibility of group- think behavior with the complaints.

Two days after the presentation, Toseland moved away from his proposal because the extensive data collection could endanger the Darknet if individual nodes were identified and evaluated by an attacker.

future

HTL

HTL (see Protection against excessive requests ) is being redesigned for security reasons. It was even discussed to abolish HTL, since an attacker can extract information from the number. The concept would then be such that for each node there is a certain probability that the request will be terminated. However, some inquiries would cover insane short distances and terminate after a hop, for example. In addition, the nodes “learn” poorly from each other when such unsafe requests are made. A compromise was found:

- The highest HTL is 11.

- Requests leave a node with HTL = 11.

- There is a 10% chance of going down HTL from 11 to 10. This is determined once per connection in order to make "correlation attacks" more difficult.

- There is a 100% chance of downgrading HTL from 10 to 9, from 9 to 8, and so on down to 1. This ensures that requests always go through at least ten nodes; this way the estimates are not confused too much. (The nodes can "learn" better about each other.)

- There is a 20% chance of canceling requests with HTL = 1. This is to prevent some attacks, for example uploading different content to different nodes, or testing the memory of a node.

protocol

Freenet uses UDP from version 0.7 . A TCP transport could be implemented later, but UDP is the preferred transport. Older versions only use TCP .

key

Either all content should be stored in 32 KB or in 1 KB keys. As before, smaller files are expanded by random-looking but clearly resulting data; larger files are split up.

Pre-mix routing

The concept of pre-mix routing is only used for security. Before an order is factually processed by other nodes, it is first encrypted and tunneled through a couple of nodes so that the first one knows us, but not the order, and the last one, the order, but not us. The nodes are randomly selected within a reasonable framework. The concept of "onion routing" ( onion routing ) illustrates: The request is encrypted several times (to an onion), and each node in the premix course "peels" encryption instance so that only the last node detects the request - but she does not know from whom the order comes.

I2P

In January 2005 it was discussed to use the related project I2P as a transport layer. However, this consideration was rejected.

Passive and inverse passive requests

Passive requests are requests that remain in the network until the file being searched for can be reached. Inverse passive requests are supposed to provide content more or less permanently using a mechanism similar to passive requests.

The security aspects are not yet fully understood. They will be clarified before implementation. Passive and inverse passive requests will probably only come after version 1.0.

Steganography

Steganography will only come after 1.0, the Transport Plugin Framework from Google Summer of Code 2012 was a first approach, but did not reach production maturity.

Related projects

- GNUnet - another program with similar objectives (mainly file sharing)

- I2P - related project, but with a different objective (implementation of a complete system similar to the Internet, including all known areas of application such as e-mail, IRC or BitTorrent )

- RetroShare - another program with a similar objective, but more intended for file sharing, messengers and newsgroups.

- Tor - related network based on onion routing

Awards

- 2014: SUMA Award

Web links

- Freenet Project - the official project website

- A Distributed Anonymous Information Storage and Retrieval System (PDF, English; 246 kB)

- More beautiful swapping - a detailed article among other things about Freenet in the online magazine Telepolis

- Freenet - An anonymous network against censorship and surveillance, article in the Computerwoche

- Anonymous, secure file sharing? - detailed article about the security of one-click-hosts and file-sharing networks, especially Freenet.

Individual evidence

- ↑ Freenet: People . September 22, 2008. Archived from the original on September 21, 2013. Retrieved on September 22, 2008.

- ↑ github.com .

- ^ Ian Clarke : A Distributed Anonymous Information Storage and Retrieval System. ( Memento of September 27, 2007 in the Internet Archive ) (PDF, English; 246 kB) 1999.

- ↑ Brief information on the DefCon lecture

- ↑ Brief information on the 22C3 lecture

- ↑ Freenet-China ( Memento from February 19, 2014 in the Internet Archive )

- ↑ Xiao Qiang : The words you never see in Chinese cyberspace ( Memento from August 26, 2010 in the Internet Archive ). in: China Digital Times . August 30, 2004.

- ↑ Antipiracy Analysis ( Memento from June 3, 2004 in the Internet Archive )

- ↑ The Freenet Project: Understand Freenet ( Memento from December 28, 2011 in the Internet Archive ) , accessed on May 15, 2008, English, quote: “It is hard, but not impossible, to determine which files that are stored in your local Freenet Datastore. This is to enable plausible deniability as to what kind of material that lies on your harddrive in the datastore. "

- ↑ SUMA Award for the Freenet Project , Heise News from February 12, 2015, accessed March 3, 2016