Pivot procedure

Pivot methods (also basic exchange methods ) are algorithms of mathematical optimization , in particular of linear optimization . For a given system of linear equations in nonnegative variables (essentially the same as a system of linear inequalities ), the best possible alternative solution (a so-called optimal solution ) is searched for, and the system of equations is converted step by step without changing the solution set. Important pivot processes are the simplex process and the criss-cross process.

Pivot methods play an analogous and similarly important role for the treatment of linear inequalities as the Gaussian elimination method for the solution of linear systems of equations in unlimited variables. The main area of application of the pivot method is linear optimization: they are among the most widely used solution methods in business research , economics , and goods transport , and they are also increasingly used in many other areas such as civil engineering (structural optimization ), statistics (regression analysis) and game theory used. Tasks with tens of thousands of variables and inequalities are the order of the day.

Pivot approach

Problem

A pivot method always starts from a special kind of linear system of equations in which all variables, except perhaps one, should assume non-negative values. Each system of linear inequalities or equations, and also any linear optimization problem , namely can be in the following (English dictionary called) book form bring:

Here are real (mostly rational in practice ) numbers. The above notation is intended to indicate that a solution is sought in the unknowns that satisfies the corresponding equations and inequalities and thereby selects the so-called target variable as large as possible.

- comment

- When the task is transformed into the above form, the inequalities of the system do not diminish: they remain present in at least the same number and now appear as nonnegative variables. A common linear inequality such as

- is transformed into

- with .

With the help of the index sets

this task can also be expressed in compact form as follows:

In each step of a pivot method, as above, a subset of the variables is highlighted as independent , while the remaining variables, called base variables, are expressed as linear-affine functions of the independent variables. In successive steps, one of the variables changes from independent to basic variable and a second changes in the opposite direction; such pairs of variables are called pivots .

Optimum conditions

If the following two optimum conditions are met in the linear system of equations established above

- for all (admissibility) and

- for everyone (target restriction),

then one can get a solution to the above problem by setting the independent variables to the values . On the one hand, the values of the exposed variables are then nonnegative, as required. On the other hand, other possible solutions may only contain independent variables with likewise non-negative values, so that the inequality applies to each of these solutions .

In the following example system,

the optimum conditions are violated in two places, there and is. First, the test solution would contain the negative value , and second, its target variable value could possibly be increased in solutions with .

Exchange of the basic variables

If the optimum conditions are not met, which is usually the case, the above system of linear equations can also be expressed differently by selecting another, equally large subset of the unknowns instead of and exposing them . It is a change of the unknown. Based on the following distribution of the variables,

into new independent variables with and new base variables with , the system of equations is now converted to

Please note that entries are only defined with and for index pairs . The entries of the equation system converted in this way can now be checked again for the optimum conditions,

- for all (admissibility) and

- for everyone (target restriction),

which in turn may lead to a solution to the problem.

A standard result of linear optimization says that for every solvable problem there is a set of basic variables that lead to a solution. If the optimum conditions are met, the basic variables form a so-called optimum basis of the system.

Pivots and pivot elements

Each non-vanishing system of equations above, the pivot system , is called a pivot element and allows the independent variable to be exposed instead of the base variable in order to continue searching for a solution. This is the procedure of a general pivot procedure, whereby not any pivot elements are selected, but only allowed (admissible) pivots that must meet the following:

- Either and (admissibility pivot) apply simultaneously ,

- or it applies at the same time and (target progress pivot).

In the example system above,

Pivoting with pivot and pivoting with pivot are allowed due to the violation of optimality . Because of the violation of optimality , pivot elements with pivot and pivot elements with pivot are also allowed.

The restriction to allowed pivots prevents the same pivot from being selected twice in a row. The rules according to which one of these permitted pivot elements is selected in each step depend on the respective procedure; A minimum requirement is, of course, that the process continues after a finite number of steps, which is not the case with an unsuitable selection of permitted pivots . Fukuda & Terlaky proved in 1999 that for every solvable task and for every starting point there is a sequence of maximally allowed pivots that leads to an optimal basis. Unfortunately, their proof does not provide a procedure to find these pivots in every optimization step.

As can be seen from the definition, optimal bases do not have allowed pivots; in such a case , the procedure can not be continued. On the other hand, as can be easily shown in the above section of arguments based on that a non-optimal basis without allowing Pivots always belongs to a task that no solution has ; either because the system of equations and inequalities has no solution at all (impermissible problem), or because solutions of any size can be found (unlimited problem).

Examples

A direct implementation

In order to avoid rounding errors, we will work with fractions in the following and choose a common denominator for all entries. In order to find a common denominator for the overall system in every step, we do n't have to examine the entries additionally. If the starting system is an integer (which can usually be achieved by expansion), the following rule applies:

- The numerator of the chosen pivot element is a common denominator for the following system.

If the entries of the following system are multiplied by this common denominator, whole-number values are obtained. When the subsequent system is set up, the common denominator of the predecessor system becomes obsolete, which is why all entries in the subsequent system can be shortened without checking using this obsolete denominator.

A table with the entries of a pivot system is often called a tableau . The following diagram shows how the entries of the pivot systems change from one step to the next:

|

|

The symbol stands for the common denominator of the equation system, the symbol for the numerator of the pivot element , for another entry in the pivot line , for another entry in the pivot column , and for any entry apart from the pivot line and pivot column. Entries in the target contribution line ( ) and the base value column ( ) are converted according to the same rules.

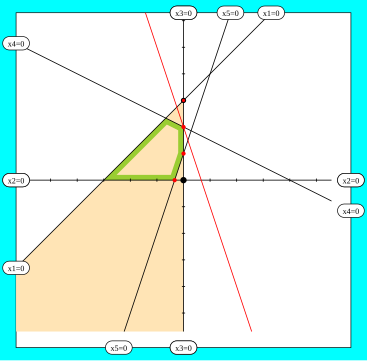

The pictures for the steps in the following examples all show the same system of equations in different orthogonal coordinates ; where:

- The area outlined in green is the permissible range in which all variables have nonnegative values.

- Coordinate axes correspond to the equations of independent variables; other straight lines describe exposed variables.

- Intersections of allowed pivot points are marked in red; the intersection with a black border shows the selected pivot.

- The yellow area becomes the non-negative quadrant in the next step .

A sure-fire pivot selection rule

For now we will choose an example without a target variable, that is, with . In such a case, none of the variables are maximized; only arbitrary (non-negative) values are searched for for the unknowns which satisfy a given system of equations. In each step we want to choose the allowed pivot according to the following rule:

- Choose ,

- then choose .

This (not particularly efficient) selection rule coincides with the Smallest Index Pivot Selection given below ; it can be proven that this selection leads to an optimal basis for every solvable task .

We are now looking for values for the unknowns that make up the system of equations

fulfill. The allowed pivots in the above system of equations are and ; Due to the above selection rule, we expose the independent variable instead of the basic variable :

We now get the following converted system of equations:

In the new system the allowed pivots are and ; this time we lay bare instead of :

We now get the following system:

The only pivot allowed here is ; therefore we can only expose instead of :

Well we get

This system fulfills the optimality conditions and accordingly has no allowed pivots. By setting all independent variables to zero, we get the following solution:

Circulatory pivot selection rules

The following is an example of improper pivot selection; If the pivot selection is unsuitable, a pivot procedure can get into an infinite cycle (an endless loop ). Again, as suggested in the following rule, we might succumb to the temptation to select the pivot row only from the "most violated" constraints, and understand "most violated" as those with the most negative constants:

- Choose ,

- then choose .

To show that something like this can go wrong, let's start with the system:

We choose here and expose instead . This gives us the system:

We choose basic variables , expose them in their place , and get:

The entries in this system of equations are the same as in the start system, which is why the entries in the following systems are repeated every two steps if the pivot sequence is similar. After selecting the basic variables to expose in their place , we get:

According to the cycle-free rule in the previous example, we would now have to choose to expose. Instead, we follow the modified rule and choose the basic variable for it , which leads to the following system:

This system of equations again has the same entries as the start system; but because these are still assigned to other variables, the cycle is not finished after these 4 steps. Nevertheless, it is easy to check that the algorithm returns in a total of 6 steps to the start system in the reverse order and in 12 steps to the exact start system. The total system of equations and inequalities actually has no solution at all, but the pivot method cannot find out the one with the upper pivot choice.

The order in which the variables and equations of a pivot system are listed is basically arbitrary. Nevertheless, the first pivot selection strategies, which deal with variables and equations independently of their representation in the pivot system (and were also easy to implement), were only introduced by Bland in 1977.

In the early days of the pivot method (1950–1970), when no strict distinction was made between algorithms and data structures, pivot selection strategies were more likely to be described on the basis of data structures (so-called tableaus ), and with this type of strategy the finiteness of the method was usually possible without additional calculations cannot be guaranteed. If, for example, the considered pivot selection rule is changed in the sense of the originally used Dantzig selection , in which the first of the rows and columns in question is simply selected, then that does not help either. The selection rule would then be

- Choose the smallest with ,

- Then choose the smallest with ,

- The Pivot is ,

but in the above example this leads into exactly the same endless loop.

duality

Dual task

Each linear optimization task , which in this context is also called the primal task , can be assigned a second optimization task depending on the above book form ; the coefficient matrix of this so-called dual problem is the negative transpose of the coefficient matrix of the original problem:

In a squat form, this becomes:

(Caution: When deriving from this formulation, it must not be replaced by! Often the dual task is also defined with the objective function instead of , which is feasible but also more confusing.)

As will be shown in a moment, the maximum of the dual task (if any) is exactly the negative maximum of the primary task.

Gradual conversion

The above relationship of the coefficients between the primal task and the dual task does not only apply to the starting basis, but is retained as long as the basis variables are converted according to the same pivot. It applies

This duality relationship can most easily be seen in a pivot system that contains only two independent unknowns and two exposed unknowns. We get the same system if we first exchange two of the unknowns and then derive the dual task, or if we do these steps in reverse order; the following commutative diagram shows this relationship:

|

|

|||

|

|

From the dual relationship it follows that an optimal system for the primary task also provides an optimal system for the dual task.

The task in the first calculation example includes the following dual task (the zeros come from ):

The above algorithm then leads to the optimal system

and the optimal solution to this is of course for everyone . The primary task had an implicit objective function ; all optimal solutions for the primal and also the dual task would therefore have a target value, if any . This is the same value that the initial solution of the dual task had, but the existence of an optimal solution is not evident from the first system of equations: there could have been solutions with an infinitely large and thus no optimal solution at all.

Solution pairs

A theoretically significant consequence of the duality theory is: We do not necessarily need a maximization algorithm to solve linear optimization problems; any algorithm that solves systems of linear inequalities is sufficient. From the duality relation it follows that every optimal basis of the original task also directly supplies an optimal basis for the dual task; the optimal value of the target variable is then the negative of the optimal value of . Accordingly, the following applies to admissible pairs of solutions to the two problems

and applies to optimal solution pairs

From this it follows that the optimal solutions of both tasks are exactly the solutions of the above equation systems with the following inequalities:

That is written out

In practice, such a procedure is of course only competitive if the common data structure of both tasks is also used.

Special pivot procedure

The simplest of all pivot methods belong to the criss-cross methods , which were developed in the 1980s for tasks in the context of oriented matroids . The much more complex simplex methods were published by George Dantzig as early as 1947 for the solution of linear optimization problems and, thanks to their widespread use, have significantly motivated the search for criss-cross methods. Further pivot methods have been developed for the linear complementarity problem with sufficient matrices (including quadratic programming) and for linear-fractional optimization problems.

When working out different pivot procedures, the main thing is to keep the number of pivot steps and thus the duration of the procedure low. While the currently known simplex methods all require an over- polynomially limited runtime - that is a runtime that cannot be limited by a polynomial in the data storage size - runtime bounds for the criss-cross methods are an open research topic (until 2010). In summary, it can be said that criss-cross methods have more degrees of freedom than simplex methods, and that precisely for this reason a criss-cross method can be faster with a good pivot selection and slower with a poor pivot selection than simplex methods.

Primal simplex method

Primal simplex methods (mostly just called simplex methods ) assume a so-called admissible basis with for all and only examine admissible bases until an optimal basis is found. An important property of the primal simplex method is that the value of the target variable, that is , increases monotonically with each step ; if it were to grow strictly monotonously, the finiteness of the process would be assured.

A primal simplex method has to choose its pivots as follows:

- Pick any one that satisfies. For example, find the smallest with this property (Bland's rule).

- Pick any one that satisfies. For example, find the smallest with this property (Bland's rule).

In order to obtain a valid starting point, an auxiliary task must be solved in a so-called phase 1 . This can be done by inserting a new objective function with any non-positive entries and by maximizing the dual task for which the starting solution is now permissible. From a statistical point of view, it is advantageous to randomly select the entries of the new objective function negatively.

A standard result of linear optimization says that for every solvable task and for every admissible base there is a sequence of allowed pivots that leads to an optimal basis using only admissible bases; However, it is not known whether there is a sequence of this type whose length can be restricted polynomially in the memory size of the data.

Dual simplex processes

Dual simplex method are Pivot procedures described by a so-called dual-permissible basis to all go out and investigate in their search for an optimum basis exclusively dual-permissible bases; the value of the target variable decreases monotonically.

A dual simplex method chooses its pivots as follows:

- Pick any one that satisfies. For example, find the smallest with this property (Bland's rule).

- Pick any one that satisfies. For example, find the smallest with this property (Bland's rule).

Dual simplex processes generate the same pivot sequences as the primal simplex processes applied to the dual task and therefore basically have the same properties as the primal processes. The fact that they are nevertheless preferred to the primal method for solving many applied tasks is due to the fact that it is easier to find a dual-permissible starting basis for many applied tasks.

Criss-cross method

Criss-Cross method (English: criss-cross ) are common pivot processes which start from an arbitrary basis; As a rule, this name is used for combinatorial pivot methods, that is, for pivot methods which only take into account the signs of the system coefficients and not the coefficients themselves for pivot selection.

The best-known of all criss-cross methods extends the smallest index pivot selection from the first example. To do this, the unknowns are arranged in a more or less fixed order and the pivots are selected as follows (as usual, the minimum of the empty set is infinitely large):

- Find the indices and .

- If is, choose Pivot with .

- If is, choose Pivot with .

This of course leaves the question of how the variables should be arranged.

Example of a criss-cross method

In the following example we are using the Smallest Index Pivot Selection above. The aim is to find values for the variables that make up the system of equations

meet and thereby bring the additional target variable to a maximum. We use the pivot selection of the smallest index mentioned above.

All pivots are allowed in our original system; However, the selection rule stipulates that we expose and exchange for:

This leads to the new system of equations:

Here the pivots , and , are allowed; based on the selection rule, we disclose instead of :

We get the system:

The allowed pivots of this system of equations are and ; we therefore disclose instead of :

Now we get the system:

This system of equations is optimal; are the values of the unknowns for the corresponding solution

Big jobs

An implementation of the pivot method for practical tasks is often far from trivial. The entries of large systems of equations - with tens of thousands of variables - almost always have some structure that needs to be exploited to perform the calculation quickly and with few rounding errors:

- In the start system of large tasks (not in the converted systems of equations) the overwhelming majority of the entries are zero (the system is sparsely populated ), which makes it possible to save a large part of the calculations if one also partially starts from the start system in later conversions.

- In the procedures with delayed evaluation (by changing the start matrix, partial LR decomposition of the coefficient matrix, product form of inverse matrices and other things), an entry is only calculated and only when it is really needed to find the pivot. In doing so, however, you often have to fall back on entries from older systems of equations, so that the formulas for calculation become more complicated and diverse.

- For some special structures, such as the network flow problem , particularly efficient implementations have been developed, and these special structures are often embedded in larger systems.

Nevertheless, in practice there are also smaller tasks for which the direct implementation described above makes sense.

literature

- George B. Dantzig : Linear programming and extensions (= econometrics and corporate research . Volume 2 ). Springer, Berlin et al. 1966 (Original edition: Linear Programming and Extensions. Princeton University Press, Princeton NJ 1963, (PDF; 9.1 MB) ).

- Vašek Chvátal : Linear Programming . Freeman and Company, New York NY 1983, ISBN 0-7167-1587-2 .

- Robert J. Vanderbei: Linear Programming. Foundations and Extensions (= International Series in Operations Research & Management Science . Volume 114 ). 3. Edition. Springer, New York NY 2007, ISBN 978-0-387-74387-5 ( (PDF; 2.3 MB) , alternative edition: Linear Programming; Foundations and Extensions , Kluwer, ISBN 978-0-7923-9804-2 ).

Individual evidence

- ↑ a b c d e Robert Vanderbei: Linear Programming. Foundations and Extensions (= International Series in Operations Research & Management Science. Vol. 114). 3rd edition. Springer, New York NY 2007, ISBN 978-0-387-74387-5 .

- ^ Robert Vanderbei: Linear Programming. Foundations and Extensions (= International Series in Operations Research & Management Science. Vol. 114). 3rd edition. Springer, New York NY 2007, ISBN 978-0-387-74387-5 , Chapter 21.4: Simplex Method vs Interior-Point Methods.

- ↑ a b c d e Vašek Chvátal: Linear Programming. Freeman and Company, New York NY 1983, ISBN 0-7167-1587-2 .

- ↑ a b Komei Fukuda, Tamás Terlaky: On the Existence of a Short Admissible Pivot Sequences for Feasibility and Linear Optimization Problems. In: Pure Mathematics and Applications. Vol. 10, 1999, ISSN 1218-4586 , pp. 431-447, ( PDF ).

- ↑ a b c d Komei Fukuda, Tamás Terlaky: Criss-cross methods: A fresh view on pivot algorithms. In: Mathematical Programming. Vol. 79, No. 1/3, 1997, ISSN 0025-5610 , pp. 369–395, doi: 10.1007 / BF02614325 , ps file ( page no longer available , search in web archives ) Info: The link was automatically defective marked. Please check the link according to the instructions and then remove this notice. .

- ^ A b c Robert G. Bland: New finite pivoting rules for the simplex method. In: Mathematics of Operations Research. Vol. 2, No. 2, pp. 103-107, doi: 10.1287 / moor.2.2.103 .

- ↑ Shuzhong Zhang: New variants of finite criss-cross pivot algorithms for linear programming. In: European Journal of Operations Research. Vol. 116, No. 3, 1999, ISSN 0377-2217 , pp. 607-614, doi: 10.1016 / S0377-2217 (98) 00026-5 , (PDF; 164.4 kB) .

- ↑ Komei Fukuda & Bohdan Kaluzny: The criss-cross method can take Ω (n d ) pivots. In: Proceedings of the Twentieth Annual Symposium on Computational Geometry (SCG '04). June 9-11, 2004, Brooklyn, New York, USA. ACM Press, New York NY 2004, ISBN 1-58113-885-7 , pp. 401-408, doi: 10.1145 / 997817.997877 , ps file ( memento of the original from September 17, 2013 on the Internet Archive ) Info: The archive link was inserted automatically and not yet tested. Please check the original and archive link according to the instructions and then remove this notice. .

- ^ Robert Vanderbei: Linear Programming. Foundations and Extensions (= International Series in Operations Research & Management Science. Vol. 114). 3rd edition. Springer, New York NY 2007, ISBN 978-0-387-74387-5 , Chapter 8: Implementation Issues.

- ^ Robert Vanderbei: Linear Programming. Foundations and Extensions (= International Series in Operations Research & Management Science. Vol. 114). 3rd edition. Springer, New York NY 2007, ISBN 978-0-387-74387-5 , Chapter 13: Network Flow Problems.

Web links

- Interactive pivot method applet by Robert Vanderbei from 1997. The applet allows the user to set up a linear system of equations with exposed basic variables and then to rearrange any variables of this system of equations. Although the applet is called "Simplex Pivot Tool", it is designed for very general pivot methods. The coefficients can also be viewed as fractions without rounding, but are not brought to a common denominator.

- Additional information (English) on the criss-cross method .

![{\ begin {matrix} z & = & f & + & ~~~~~ d_ {1} \, x_ {1} & + & \ cdots & + & ~~~~~ d_ {n} \, x_ {n} \ \ [3pt] x _ {{n + 1}} & = & b _ {{n + 1}} & + & G _ {{n + 1,1}} \, x_ {1} & + & \ cdots & + & G _ {{ n + 1, n}} \, x_ {n} \\\ vdots && \ vdots && \ vdots &&&& \ vdots \\ x _ {{n + m}} & = & b _ {{n + m}} & + & G_ { {n + m, 1}} \, x_ {1} & + & \ cdots & + & G _ {{n + m, n}} \, x_ {n} \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f09bbf72db1fcfbcd303a394cd2b9d896d89c800)

![{\ begin {matrix} && z & = & f & + & \ sum _ {{j \ in D}} \, d_ {j} \, x_ {j} \\ [6pt] \ forall ~ i \ in B && x_ {i} & = & b_ {i} & + & \ sum _ {{j \ in D}} \, G _ {{i, j}} \, x_ {j} \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/794d9b6a3388e029e919524b86bb0475a800bf25)

![{\ begin {matrix} z & = & ~~~ 0 & - ~ 3x_ {1} & + ~~ {\ mathbf {x_ {2}}} \\ [2pt] x_ {3} & = & ~~~ 3 & - ~~ x_ {1} & - ~~ x_ {2} \\ [2pt] x_ {4} & = & ~~~ 8 & + ~ 2x_ {1} & - ~ 4x_ {2} \\ [2pt] x_ { 5} & = & - ~ {\ mathbf {1}} & + ~ 3x_ {1} & + ~~ x_ {2} \ ,, \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/83ee0a46614d4a24aa32b5c07167f58b0d55db10)

![{\ displaystyle {\ begin {matrix} && z & = & f ^ {\ pi} & + & \ sum _ {j \ in D (\ pi)} \, d_ {j} ^ {\ pi} \, x_ {j} \\ [6pt] \ forall ~ i \ in B (\ pi) && x_ {i} & = & b_ {i} ^ {\ pi} & + & \ sum _ {j \ in D (\ pi)} \, G_ {i, j} ^ {\ pi} \, x_ {j} \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a94ebfb6ee88e4f6b35e70a0a1e7a810390755e1)

![{\ begin {matrix} z & = & ~~~ 0 & - ~ 3x_ {1} & + ~~~ {\ mathbf {x_ {2}}} \\ [2pt] x_ {3} & = & ~~~ 3 & - ~~ x_ {1} & - ~~~ \ underline {{\ mathbf {x_ {2}}}} \\ [2pt] x_ {4} & = & ~~~ 8 & + ~ 2x_ {1} & - ~ \ underline {{\ mathbf {4x_ {2}}}} \\ [2pt] x_ {5} & = & - ~ {\ mathbf {1}} & + ~ \ underline {{\ mathbf {3x_ {1} }}} & + ~~~ \ underline {{\ mathbf {x_ {2}}}} \ ,, \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8843209cdeaf836610ea941fe382442894fe35aa)

![{\ begin {matrix} \ delta \, x_ {i} & \! = \! & (~~ \ alpha) & \! \! \! x_ {j} & \! + \! & (~~ \ sigma ) & \! \! \! x_ {s} \\ [6pt] \ delta \, x_ {r} & \! = \! & (~~ \ zeta) & \! \! \! x_ {j} & \! + \! & (~~ p) & \! \! \! x_ {s} \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6d0c16c9d414104fbef3eece921fac518843e3e8)

![{\ begin {matrix} p \, x_ {i} & \! = \! & (\ textstyle {\ frac {\ alpha p \, - \, \ zeta \ sigma} {\ delta}}) & \! \ ! \! x_ {j} & \! + \! \! & (~ \ sigma) & \! \! \! x_ {r} \\ [6pt] p \, x_ {s} & \! = \! & (~ - \ zeta ~) & \! \! \! x_ {j} & \! + \! \! & (~ \ delta) & \! \! \! x_ {r} \\\ end {matrix }}](https://wikimedia.org/api/rest_v1/media/math/render/svg/de60e809db57a4b70121bd2c1b367a6d9cafae7a)

![{\ begin {matrix} x_ {3} & = & (& - ~ {\ mathbf {2}} & - ~ 7x_ {1} & + ~ \ underline {{\ mathbf {2x_ {2}}}} &) ~ / ~ 1 \\ [2pt] x_ {4} & = & (& - ~ 4 & - ~ 5x_ {1} & + ~ 2x_ {2} &) ~ / ~ 1 \\ [2pt] x_ {5} & = & (& ~~~ 9 & + ~ 2x_ {1} & - ~ 3x_ {2} &) ~ / ~ 1 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d756f762e61c4a8d485295c611df8c1dfdc1d13c)

![{\ begin {matrix} x_ {2} & = & (& ~~~ 2 & + ~~ 7x_ {1} & + ~~~~ x_ {3} &) ~ / ~ 2 \\ [2pt] x_ {4 } & = & (& - ~ {\ mathbf {4}} & + ~~ \ underline {{\ mathbf {4x_ {1}}}} & + ~~~ 2x_ {3} &) ~ / ~ 2 \\ [2pt] x_ {5} & = & (& ~~ 12 & - ~ 17x_ {1} & - ~~~ 3x_ {3} &) ~ / ~ 2 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b989c48f52ce7c22d6a52b0687d34abed75ecd75)

![{\ begin {matrix} x_ {2} & = & (& ~~ 18 & + ~~ 7x_ {4} & - ~~ 5x_ {3} &) ~ / ~ 4 \\ [2pt] x_ {1} & = & (& ~~~ 4 & + ~~ 2x_ {4} & - ~~ 2x_ {3} &) ~ / ~ 4 \\ [2pt] x_ {5} & = & (& - {\ mathbf {10}} & - ~ 17x_ {4} & + ~ \ underline {{\ mathbf {11x_ {3}}}} &) ~ / ~ 4 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3a751d58c9c09942b25037b36dba943c0ed6a049)

![{\ begin {matrix} x_ {2} & = & (& ~~ 37 & - ~~ 2x_ {4} & - ~~~ 5x_ {5} &) ~ / ~ 11 \\ [2pt] x_ {1} & = & (& ~~~ 6 & - ~~ 3x_ {4} & - ~~~ 2x_ {5} &) ~ / ~ 11 \\ [2pt] x_ {3} & = & (& ~~ 10 & + ~ 17x_ {4} & + ~~~ 4x_ {5} &) ~ / ~ 11 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5659c33c9714cce76035a79f2832736b8dc06fb3)

![{\ displaystyle {\ begin {matrix} x_ {3} & = & (& - ~ \ mathbf {2} & + ~~ {\ underline {\ mathbf {x_ {1}}}} & + ~~ x_ {2 } &) ~ / ~ 2 \\ [2pt] x_ {4} & = & (& ~~~ 6 & - ~ 7x_ {1} & - ~ 3x_ {2} &) ~ / ~ 2 \\ [2pt] x_ {5} & = & (& ~~~ 0 & - ~ 3x_ {1} & - ~~ x_ {2} &) ~ / ~ 2 \\ [2pt] x_ {6} & = & (& ~~~ 4 & + ~ 7x_ {1} & + ~~ x_ {2} &) ~ / ~ 2 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f3e08ba99842d1c0bf118ad3e24560626005ddf0)

![{\ displaystyle {\ begin {matrix} x_ {1} & = & (& ~~~ 2 & + ~ 2x_ {3} & - ~~ x_ {2} &) ~ / ~ 1 \\ [2pt] x_ {4 } & = & (& - ~ \ mathbf {4} & - ~ 7x_ {3} & + ~ {\ underline {\ mathbf {2x_ {2}}}} &) ~ / ~ 1 \\ [2pt] x_ { 5} & = & (& - ~~ 3 & - ~ 3x_ {3} & + ~~ x_ {2} &) ~ / ~ 1 \\ [2pt] x_ {6} & = & (& ~~~ 9 & + ~ 7x_ {3} & - ~ 3x_ {2} &) ~ / ~ 1 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4404997705ea0deb944cb8483e14a899e122e98e)

![{\ displaystyle {\ begin {matrix} x_ {1} & = & (& ~~~ 0 & - ~ 3x_ {3} & - ~~ x_ {4} &) ~ / ~ 2 \\ [2pt] x_ {2 } & = & (& ~~~ 4 & + ~ 7x_ {3} & + ~~ x_ {4} &) ~ / ~ 2 \\ [2pt] x_ {5} & = & (& - ~ \ mathbf {2 } & + ~~ {\ underline {\ mathbf {x_ {3}}}} & + ~~ x_ {4} &) ~ / ~ 2 \\ [2pt] x_ {6} & = & (& ~~~ 6 & - ~ 7x_ {3} & - ~ 3x_ {4} &) ~ / ~ 2 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d89bbc937f287b16f0b778ebbee70ce21694b407)

![{\ displaystyle {\ begin {matrix} x_ {1} & = & (& - ~~ 3 & - ~ 3x_ {5} & + ~~ x_ {4} &) ~ / ~ 1 \\ [2pt] x_ {2 } & = & (& ~~~ 9 & + ~ 7x_ {5} & - ~ 3x_ {4} &) ~ / ~ 1 \\ [2pt] x_ {3} & = & (& ~~~ 2 & + ~ 2x_ {5} & - ~~ x_ {4} &) ~ / ~ 1 \\ [2pt] x_ {6} & = & (& - ~ \ mathbf {4} & - ~ 7x_ {5} & + ~ {\ underline {\ mathbf {2x_ {4}}}} &) ~ / ~ 1 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0fbc097ee338568de5244e3429720f4237861e51)

![{\ displaystyle {\ begin {matrix} x_ {1} & = & (& - ~ \ mathbf {2} & + ~~ {\ underline {\ mathbf {x_ {5}}}} & + ~~ x_ {6 } &) ~ / ~ 2 \\ [2pt] x_ {2} & = & (& ~~~ 6 & - ~ 7x_ {5} & - ~ 3x_ {6} &) ~ / ~ 2 \\ [2pt] x_ {3} & = & (& ~~~ 0 & - ~ 3x_ {5} & - ~~ x_ {6} &) ~ / ~ 2 \\ [2pt] x_ {4} & = & (& ~~~ 4 & + ~ 7x_ {5} & + ~~ x_ {6} &) ~ / ~ 2 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7d3df86e20144c7af36b4ce61a75fc56d64942bd)

![{\ begin {matrix} w & = & - \; f & - & b_ {1} \, y _ {{n + 1}} & - & \ cdots & - & b_ {m} \, y _ {{n + m}} \ \ [3pt] y_ {1} & = & - \; d_ {1} & - & G _ {{n + 1,1}} \, y _ {{n + 1}} & - & \ cdots & - & G _ {{ n + m, 1}} \, y _ {{n + m}} \\\ vdots && \ vdots && \ vdots &&&& \ vdots \\ y_ {n} & = & - \; d_ {n} & - & G_ { {n + 1, n}} \, y _ {{n + 1}} & - & \ cdots & - & G _ {{n + m, n}} \, y _ {{n + m}} \ end {matrix} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/b69740dc2bd698664779fec772dc9aed203779e8)

![{\ begin {matrix} && w & = & (- f) & + & \ sum _ {{i \ in B}} \, (- b_ {i}) \, y_ {i} \\ [6pt] \ forall ~ j \ in D && y_ {j} & = & (- d_ {j}) & + & \ sum _ {{i \ in B}} \, (- G _ {{i, j}}) \, y_ {i} \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/97cfb41d976d5ca7639dc59afeacdb2b9f21e7a3)

![{\ begin {matrix} && w & = & (- f ^ {\ pi}) & + & \ sum _ {{i \ in B (\ pi)}} \, (- b_ {i} ^ {\ pi}) \, y_ {i} \\ [6pt] \ forall ~ j \ in D (\ pi) && y_ {j} & = & (- d_ {j} ^ {\ pi}) & + & \ sum _ {{i \ in B (\ pi)}} \, (- G _ {{i, j}} ^ {\ pi}) \, y_ {i} \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/dc04bdbd82b7b93d78f6266c3f911ef2f00c1b18)

![- \, [\ cdots] ^ {{\, T}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2331baecd2351bac704e20ba3665c9d89f7cf742)

![{\ begin {matrix} \ delta \, y_ {j} & \! = \! & (- \ alpha) & \! \! \! y_ {i} & \! + \! & (- \ zeta) & \! \! \! y_ {r} \\ [6pt] \ delta \, y_ {s} & \! = \! & (- \ sigma) & \! \! \! y_ {i} & \! + \! & (- p) & \! \! \! y_ {r} \\\ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ca3a749640a8ec5915c25e4f9ce875b77e426708)

![{\ begin {matrix} p \, y_ {j} & \! = \! & (\ textstyle {\ frac {\ zeta \ sigma \, - \, \ alpha p} {\ delta}}) & \! \ ! \! y_ {i} & \! + \! \! & (~~ \ zeta) & \! \! \! y_ {s} \\ [6pt] p \, y_ {r} & \! = \ ! & (~ - \ sigma ~) & \! \! \! y_ {i} & \! + \! \! & (- \ delta) & \! \! \! y_ {s} \\\ end { matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3c489d21e80a4c5509a6ff8dde5f8c1d495082b9)

![{\ displaystyle {\ begin {matrix} ~ w ~ & = & (& 0 & + ~~ 2y_ {3} & + ~~ 4y_ {4} & - ~~ 9y_ {5} &) ~ / ~ 1 \\ [2pt ] y_ {1} & = & (& 0 & + ~~ 7y_ {3} & + ~~ 5y_ {4} & - ~~ 2y_ {5} &) ~ / ~ 1 \\ [2pt] y_ {2} & = & (& 0 & - ~~ 2y_ {3} & - ~~ 2y_ {4} & + ~~ 3y_ {5} &) ~ / ~ 1 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/00761975e87bc2c13f59b2048169bb46766caeb7)

![{\ displaystyle {\ begin {matrix} ~ w ~ & = & (& 0 & - ~ 37y_ {2} & - ~~ 6y_ {1} & - ~ 10y_ {3} &) ~ / ~ 11 \\ [2pt] y_ {4} & = & (& 0 & + ~~ 2y_ {2} & + ~~ 3y_ {1} & - ~ 17y_ {3} &) ~ / ~ 11 \\ [2pt] y_ {5} & = & (& 0 & + ~~ 5y_ {2} & + ~~ 2y_ {1} & - ~~ 4y_ {3} &) ~ / ~ 11 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a6d31ca0cbfa88fec9ad8c5ab0dae7f7b7fbedb9)

![{\ displaystyle {\ begin {matrix} \ sum _ {j \ in D} \, d_ {j} \, x_ {j} ~~ + ~~ \ sum _ {i \ in B} \, (- b_ { i}) \, y_ {i} ~~ \ geq ~~ 0 \\ [6pt] \ forall ~ j \ in D \ quad y_ {j} ~ = ~ (-d_ {j}) ~ + ~ \ sum _ {i \ in B} \, (- G_ {i, j}) \, y_ {i} \\ [6pt] \ forall ~ i \ in B \ quad x_ {i} ~ = ~ b_ {i} ~ + ~ \ sum _ {j \ in D} \, G_ {i, j} \, x_ {j} \\ [6pt] \ forall ~ k \ in D \ cup B \ qquad x_ {k} \ geq 0, \ quad y_ {k} \ geq 0 \ end {matrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a807537746eba2740941f39ffd167af0ceb104d4)

![{\ begin {matrix} z & = & (& ~~~ 0 & + ~ {\ mathbf {3x_ {1}}} & + ~ 2x_ {2} &) ~ / ~ 1 \\ [2pt] x_ {3} & = & (& ~~~ 3 & - ~ \ underline {{\ mathbf {2x_ {1}}}} & - ~~ x_ {2} &) ~ / ~ 1 \\ [2pt] x_ {4} & = & (& ~~~ 7 & - ~ 2x_ {1} & - ~ 3x_ {2} &) ~ / ~ 1 \\ [2pt] x_ {5} & = & (& ~~~ 4 & - ~ 3x_ {1} & - ~~ x_ {2} &) ~ / ~ 1 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9bcc63ee2d5f4d8bb3003f632c6dbe3ea4541810)

![{\ begin {matrix} z & = & (& ~~~ 9 & - ~ 3x_ {3} & + ~~ {\ mathbf {x_ {2}}} &) ~ / ~ 2 \\ [2pt] x_ {1} & = & (& ~~~ 3 & - ~~ x_ {3} & - ~~ \ underline {{\ mathbf {x_ {2}}}} &) ~ / ~ 2 \\ [2pt] x_ {4} & = & (& ~~~ 8 & + ~ 2x_ {3} & - ~ 4x_ {2} &) ~ / ~ 2 \\ [2pt] x_ {5} & = & (& - ~ 1 & + ~ 3x_ {3} & + ~~ x_ {2} &) ~ / ~ 2 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7a285d35f38bfe29a9baca8d5b20cec6888440d5)

![{\ begin {matrix} z & = & (& ~~~ 6 & - ~ 2x_ {3} & - ~~ x_ {1} &) ~ / ~ 1 \\ [2pt] x_ {2} & = & (& ~ ~~ 3 & - ~~ x_ {3} & - ~ 2x_ {1} &) ~ / ~ 1 \\ [2pt] x_ {4} & = & (& - ~ {\ mathbf {2}} & + ~ 3x_ {3} & + ~ \ underline {{\ mathbf {4x_ {1}}}} &) ~ / ~ 1 \\ [2pt] x_ {5} & = & (& ~~~ 1 & + ~~ x_ {3 } & - ~~ x_ {1} &) ~ / ~ 1 \ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5dff1b909d574f9103748c18887d53444e59bf8a)

![{\ begin {matrix} z & = & (& ~~ 22 & - ~ 5x_ {3} & - ~~ x_ {4} &) ~ / ~ 4 \\ [2pt] x_ {2} & = & (& ~~ ~ 8 & + ~ 2x_ {3} & - ~ 2x_ {4} &) ~ / ~ 4 \\ [2pt] x_ {1} & = & (& ~~~ 2 & - ~ 3x_ {3} & + ~~ x_ {4} &) ~ / ~ 4 \\ [2pt] x_ {5} & = & (& ~~~ 2 & + ~ 7x_ {3} & - ~~ x_ {4} &) ~ / ~ 4 \ end { matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b7b5b1771b7e0080cfc88f743adb231e1b5a51d6)