Speech synthesis

Under speech synthesis means the artificial production of human speaking voice . A text-to-speech system ( TTS ) (or reading machine ) converts running text into an acoustic voice output .

Basically, two approaches to the generation of speech signals can be distinguished. On the one hand, voice recordings ( samples ) can be used through so-called signal modeling . On the other hand, the signal can also be generated completely in the computer by so-called physiological (articulatory) modeling. While the first systems were based on formant synthesis, the systems currently in industrial use are predominantly based on signal modeling.

A particular problem for speech synthesis is the generation of a natural speech melody ( prosody ).

history

Long before the invention of electronic signal processing , scientists tried to construct machines that could generate human speech. Gerbert von Aurillac (1003) is credited with a “speaking head” made of bronze, from which it was reported that he could say “yes” and “no”. The apparatus of Albertus Magnus (1198–1280) and Roger Bacon (1214–1294) belong more to the legends .

In 1779, the German scientist Christian Kratzenstein , who worked in Copenhagen, built a "speech organ" based on a competition held by the St. Petersburg Academy, which was able to synthesize five long vowels (a, e, i, o and u) using free-swinging lingual pipes with the human vocal tract . Wolfgang von Kempelen developed a speaking machine as early as 1760 , which he presented in 1791 in his publication "Mechanism of human language along with the description of its speaking machine". Like Kratzenstein's, this synthesis was based on a bellows as the lung equivalent, but the actual stimulation was much closer to the anatomy of a single, striking lingual whistle. This made some vowels and plosives possible. In addition, a number of fricatives could be represented using various mechanisms. A leather tube was attached to the vocal cords, which could be deformed with one hand and thus reproduced the variable geometry and resonance behavior of the vocal tract. Von Kempelen wrote:

"Achieve an admirable skill in playing in a period of three weeks, especially if you switch to the Latin, French or Italian language, because German is much more difficult [due to the frequent bundles of consonants] ."

Charles Wheatstone built a speaking machine based on this design in 1837 , a replica can be found in the Deutsches Museum . In 1857 Joseph Faber built the Euphonia , which also follows this principle.

At the end of the 19th century, interest developed away from the reproduction of human speech organs (genetic speech synthesis) towards the simulation of acoustic space (genematic speech synthesis). Hermann von Helmholtz, for example, synthesized vowels for the first time with the help of tuning forks that were tuned to the resonance frequencies of the vocal tract in certain vowel positions. These resonance frequencies are called formants . Speech synthesis by combining formants was technical mainstream until the mid-1990s.

The Vocoder , a keyboard-controlled electronic speech synthesizer that was said to be clearly understandable, was developed at Bell Labs in the 1930s . Homer Dudley improved this machine to the Voder , which was presented at the 1939 World's Fair . The Voder used electrical oscillators to generate the formant frequencies.

The first computer-based speech synthesis systems were developed in the late 1950s, and the first complete text-to-speech system was completed in 1968. The physicist John Larry Kelly, Jr. developed a speech synthesis with an IBM 704 at Bell Labs in 1961 and had him sing the song Daisy Bell . Director Stanley Kubrick was so impressed with it that he incorporated it into 2001: A Space Odyssey .

present

While early electronic speech syntheses still sounded very robotic and were sometimes difficult to understand, they have achieved a quality since the turn of the millennium that is sometimes difficult to distinguish from human speakers. This is mainly due to the fact that the technology has turned away from the actual synthesis of the speech signal and concentrates on optimally concatenating recorded speech segments.

synthesis

Speech synthesis presupposes an analysis of the human language, with regard to the phonemes , but also the prosody, because a sentence can have different meanings through the sentence melody alone.

As for the synthesis process itself, there are different methods. What all methods have in common is that they use a database in which characteristic information about language segments is stored. Elements from this inventory are linked to the desired expression. Speech synthesis systems can be classified using the inventory of the database and in particular the method of linking. The signal synthesis tends to be simpler the larger the database, since it then already contains elements that are closer to the desired expression and less signal processing is necessary. For the same reason, a more natural sounding synthesis is usually possible with a large database.

A difficulty in the synthesis lies in the joining together of inventory elements. Since these originate from different utterances, they also differ in volume, fundamental frequency and the position of the formants. When preprocessing the database or when connecting the inventory elements, these differences must be balanced out as well as possible (normalization) in order not to impair the quality of the synthesis.

Unit selection

The Unit Selection delivers the best quality, especially with a restricted domain . Synthesis uses a large database of speech in which each recorded utterance is segmented into some or all of the following units:

These segments are stored with a directory of a number of acoustic and phonetic properties such as the fundamental frequency curve, duration or neighbors.

For the synthesis, special search algorithms , weighted decision trees , are used to determine a number of segments as large as possible, which come as close as possible to the utterance to be synthesized with regard to these properties. Since this series is output with little or no signal processing, the naturalness of the spoken language is retained as long as few concatenation points are required.

Diphone synthesis

Experiments carried out at the beginning of the 21st century have shown that the correct reproduction of sound transitions is essential for the intelligibility of speech synthesis. A database with around 2500 entries is used to store all sound transitions. The time range of the stationary part, the phoneme center of a phoneme, up to the stationary part of the following phoneme is stored therein. For the synthesis, the information is put together ( concatenated ) accordingly .

Further co- articulation effects , which contribute a lot to the naturalness of speech, can be taken into account through more extensive databases. One example is Hadifix , the Ha lbsilben, Di phone and Suf fix e contains.

Signal generation

The signal generation reproduces the desired segments from the database with the specified basic frequency curve. This expression of the fundamental frequency curve can be done in different ways, in which the following methods differ.

Source filter model

In syntheses that use a source-filter separation, a signal source with a periodic waveform is used. Their period length is adjusted to match the fundamental frequency of the utterance to be synthesized. Depending on the phoneme type, additional noise is added to this excitation. The final filtering processes the sound-characteristic spectra. The advantage of this class of method is the simple basic frequency control of the source. One disadvantage arises from the filter parameters stored in the database, which are difficult to determine from speech samples. Depending on the type of filter or the underlying perspective of speaking, a distinction is made between the following procedures:

Formant synthesis

The formant synthesis is based on the observation that to distinguish the vowels it is sufficient to reproduce the first two formants accurately. Each formant is simulated by a bandpass , a polarizing filter of the 2nd order, which can be controlled in terms of center frequency and quality . The formant synthesis can be implemented comparatively easily using analog electronic circuits.

Acoustic model

The acoustic model reproduces the entire resonance properties of the vocal tract using a suitable filter. For this purpose, the vocal tract is often viewed in a simplified manner as a tube of variable cross-section, with transverse modes being neglected because the lateral extent of the vocal tract is small. The cross-sectional changes are still approximated by equidistant cross-sectional jumps. A filter type that is frequently selected is the cross-link chain filter , in which there is a direct relationship between cross-section and filter coefficient.

These filters are closely related to Linear Predictive Coding (LPC), which is also used for speech synthesis. With the LPC, the entire resonance properties are also taken into account, but there is no direct connection between the filter coefficient and the cross-sectional shape of the vocal tract.

Articulatory synthesis

Compared to the acoustic model, the articulatory synthesis establishes a relationship between the position of the articulators and the resulting cross-sectional shape of the vocal tract. In addition to time-discrete cross-link chain filters, solutions of the continuous-time Horn equation, from which the time signal is obtained by Fourier transformation , are used to simulate the resonance characteristics .

Overlap Add

Pitch Synchronous Overlap Add, abbreviated to PSOLA, is a synthesis method in which recordings of the speech signal are in the database. If the signals are periodic, they are provided with information about the basic frequency (pitch) and the beginning of each period is marked. During the synthesis, these periods are cut out with a certain environment using a window function and added to the signal to be synthesized at a suitable point: Depending on whether the desired fundamental frequency is higher or lower than that of the database entry, they are correspondingly denser or less dense than in the original put together. Periods can be omitted or output twice to adjust the sound duration. This method is also known as TD-PSOLA or PSOLA-TD (TM), where TD stands for Time Domain and emphasizes that the methods work in the time domain.

A further development is the Multi Band Resynthesis OverLap Add process, or MBROLA for short . Here the segments in the database are preprocessed to a uniform basic frequency and the phase position of the harmonics is normalized. During the synthesis of a transition from one segment to the next, less perceptible disturbances arise and the achieved speech quality is higher.

These synthesis methods are related to granular synthesis , which is used in sound generation and alienation in electronic music production.

Parametric speech synthesis from hidden Markov models (HMM) and / or stochastic Markov graphs (SMG)

Parametric speech synthesis is a group of methods based on stochastic models. These models are either hidden Markov models (HMM) , stochastic Markov graphs (SMG), or recently a combination of these two. The basic principle is that the symbolic phoneme sequences obtained from text preprocessing run through statistical modeling by first breaking them down into segments and then assigning a specific model from an existing database to each of these segments. Each of these models, in turn, is described by a series of parameters and ultimately linked with the other models. The processing into an artificial speech signal, which is based on the said parameters, then completes the synthesis. In the case of using more flexible, stochastic Markov graphs, such a model can even be optimized to the extent that it can be trained in advance to have a certain basic naturalness by adding examples of natural language. Statistical methods of this kind originate from the contrary field of speech recognition and are motivated by knowledge about the connection between the probability of a certain spoken word sequence and the approximate speech speed to be expected, or its prosody.

Possible uses of text-to-speech software

Using speech synthesis software does not have to be an end in itself. People with visual impairments - e.g. B. cataracts or age-related macular degeneration - use TTS software solutions to have texts read aloud directly on the screen. Blind people can operate a computer using screen reader software and are given control elements and text content. But lecturers also use speech synthesis to record lectures. Authors also use TTS software to check texts they have written for errors and clarity.

Another area of application is in the form of software that allows the creation of MP3 files . This means that speech synthesis software can be used to generate simple podcasts or audio blogs . Experience has shown that the production of podcasts or audio blogs can be very time-consuming.

When working with US software, it should be noted that the voices available are of different quality. English voices are of a higher quality than German. A 1: 1 copy of the texts in TTS software is not recommended, post-processing is necessary in any case. It's not just about replacing abbreviations, but also inserting punctuation marks - even if they are grammatically incorrect - can help to influence the rate of sentence. German "translations" with Anglicisms are generally an insurmountable problem for speech synthesis.

Frequent applications are announcements in telephone and navigation systems.

Speech synthesis software

- Aristech

- Balabolka (Freeware, 26 languages, SAPI4 and SAPI5 )

- BOSS, developed at the Institute for Communication Studies at the University of Bonn

- Audiodizer

- AnalogX SayIt

- Browsealoud from textHELP

- Cepstral Text-to-Speech

- CereProc

- DeskBot

- espeak (open source, many languages, SAPI5)

- festival

- Festvox

- FreeTTS (Open Source)

- GhostReader

- Gnuspeech

- Infovox

- IVONA text-to-speech

- Linguatec Voice Reader 15

- Loquendo TTS

- Logox clip reader

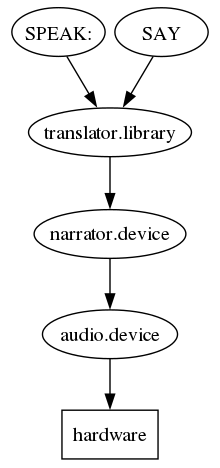

- MacinTalk and narrator.device from SoftVoice

- MARY Text-To-Speech developed by the DFKI Language Technology Lab

- MBROLA

- Modulate voice conversion software, uses Generative Adversarial Networks

- NaturalReader from NaturalSoft

- OnScreenVoices from tom weber software

- ReadSpeaker: Reading websites and podcasting

- Realspeak from Nuance (formerly ScanSoft), now Kobaspeech 3

- SAM from Don't Ask Software

- SVOX

- SpeechConcept

- Speech machine

- SYNVO

- Tacotron (Google)

- Text Aloud MP3

- Toshiba ToSpeak

- virsyn CANTOR vowel synthesis

- Virtual Voice

Speech synthesis hardware

- Votrax

- SC-01A (analog formant)

- SC-02 / SSI-263 / "Arctic 263"

-

General Instrument Speech Processor

- SP0250

- SP0256-AL2 "Orator" (CTS256A-AL2)

- SP0264

- SP1000

- Mullard MEA8000

- National Semiconductor DT1050 Digitalker (Mozer)

- Silicon Systems SSI 263 (analog formant)

- Texas Instruments

-

Oki Semiconductor

- MSM5205

- MSM5218RS (ADPCM)

- Toshiba T6721A C²MOS Voice Synthesizing LSI

See also

- voice recognition

- Prosody recognition

- Voice encryption

- Speech dialogue system

- phonetics

- Intonation (phonetics)

literature

- Karlheinz Stöber, Bernhard Schröder, Wolfgang Hess: From text to spoken language. In: Henning Lobin , Lothar Lemnitzer (Hrsg.): Texttechnologie. Perspectives and Applications. Stauffenburg, Tübingen 2004, ISBN 3-86057-287-3 , pp. 295-325.

- Jessica Riskin: Eighteenth-Century Wetware. In: Representations. Vol. 83, No. 1, 2003, ISSN 0734-6018 , pp. 97-125, doi : 10.1525 / rep.2003.83.1.97 .

- James L. Flanagan: Speech Analysis, Synthesis and Perception (= communication and cybernetics in individual representations. Vol. 3). 2nd edition. Springer, Berlin et al. 1972, ISBN 3-540-05561-4 . 1st edition 1965, 3rd edition 2008

Web links

history

- History of speech synthesis based on examples. Student thesis (PDF; 480 kB).

- Magic Voice Speech Module for the C64

Systems

- Product tests and detailed information on reading systems in INCOBS

- Product tests and detailed information on screen readers in INCOBS

- List of speech synthesis systems with examples

Web interfaces

- Pediaphon - voice output for German Wikipedia articles

- Online demo of the text-to-speech speech synthesis program MARY - text-to-speech output in various formats.

- Online demo "Text to Speech" via Google Chrome.

Footnotes

- ↑ Dennis Klatt's History of Speech Synthesis ( Memento of the original from July 4, 2006 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ↑ Sami Lemmetty: History and Development of Speech Synthesis. In: Review of Speech Synthesis Technology. HELSINKI UNIVERSITY OF TECHNOLOGY, June 1, 1999, accessed March 14, 2019 .

- ↑ Arne Hoxbergen: The history of speech synthesis using a few selected examples (PDF; 490 kB). Berlin 2005.

- ↑ Karl Schnell: tube models of the speech tract. Frankfurt 2003.

- ↑ http://www.patent-de.com/20010927/DE10040991C1.html

- ↑ Page no longer available , search in web archives: Diplomarbeit_Breitbuecher

- ↑ Archived copy ( Memento of the original from July 21, 2014 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ↑ Modulate: Unlock your voice. Retrieved March 14, 2019 .

- ↑ Technology Review: Speak Like Barack Obama. March 14, 2019, accessed March 14, 2019 .

- ↑ https://simulationcorner.net/index.php?page=sam

- ↑ Sebastian Grüner: Tacotron 2: Google's speech synthesis almost reaches human quality - Golem.de. In: golem.de. December 21, 2017, accessed March 14, 2019 .

- ↑ http://vesta.homelinux.free.fr/wiki/le_synthetiseur_vocal_mea_8000.html

- ↑ Table of contents (pdf)