Citation analysis

The citation analysis is a field of bibliometrics , which deals with the study of citations busy, that is the relations between cited and citing works, and its application as a bibliometric study method.

Various indicators are used as bibliometric parameters, which are usually calculated with the help of statistical methods, with certain assumptions being made with regard to the citation behavior of authors:

- In general, an academic paper is considered influential if it is often quoted by other authors. The citation value is used here as an indicator, which sets citation frequencies in relation to the total number of cited articles, whereby its development over time can also be taken into account.

- If one assumes a relationship between several works that are cited together or cite another one, the cluster analysis can be used to form groups of authors and / or publications who may deal with a sub-area of science.

- If it is possible to evaluate the content of publications, then the correspondence analysis is a suitable tool for creating science maps.

Because the structure of scientific publications is largely standardized and contains other data such as abstracts, full text, descriptors and addresses of the authors in addition to references, citation analysis is being used more and more frequently. For researching and analyzing citations, there are citation databases that provide the necessary information in an open format. Since the results of citation analyzes often cannot be expressed in simple numbers, methods of information visualization are also used to map the complex citation relationships.

The goals of the citation analysis are

- content-related-technical relationships between persons, institutions, publications, disciplines and their scientific influence (impact)

- Research focus of scientific work and its development over time

- Activities and topicality of research groups within the sciences as well as “hot” topics and discussions

- groundbreaking publications that form the basis for further research.

- Relations between research and industry (citations of scientific literature in patents)

- Use as an evaluation tool for peer reviews

- Partial aspect for assessing the quality of scientific work as a decision-making basis for public research institutions

history

In 1927, the siblings Gross began to use quotations as a bibliometric data source. The names of chemical journals were determined on the basis of footnotes. The more often a magazine was named, the higher its relevance was rated. Gross & Gross noticed an unequal distribution. This means that a few journals are often given more sources than other publications.

In 1955, Eugene Garfiel suggested in his article "Citation indexes for science" 1955 to systematically record citations from scientific publications and thus to make citation contexts clear. In 1963 the first printed Science Citation Index appeared , which analyzed 562 journals from 1961 and published 2 million citations. In collaboration with Irving H. Sher, the “Journal Impact Factor ” was created. Looking back over the 2 previous years, this determines how often the articles in one journal were cited in another journal. The total number of published articles is divided by the calculated number and gives the corresponding factor.

Karl Erik Rosengren developed the cocitation relationship in 1966 . If an author is quoted several times in the course of a debate, his citation counter increases. If two authors are named together, the relationship between the two is strengthened. This value indicates that both authors are obviously researching in a common field. This procedure can also be applied to documents and terms.

Robert K. Merton published the Matthew Effect in 1968 . This describes that well-known authors are cited more often than unknown ones. Merton observes that as the cited passage becomes more well known, the source is no longer mentioned or only the author is mentioned for further uses.

Alan Pritchard coined the term bibliometrics for the quantitative measurement of scientific publications in 1969 . Books, articles and journals are measured using mathematical and statistical methods. For example, it determines how often a scientist publishes articles in journals. The methods presented so far are also used in bibliometrics. Bibliometrics is a sub-discipline of scientometrics .

The first Social Sciences Citation Index was published in 1973 . This is an interdisciplinary, fee-based citation database developed by the Institute for Scientific Information , which looks at more than 3,100 mostly English-language specialist journals from more than 50 social science disciplines.

Henry Small and Irina Marshakova developed citation analysis in 1973.

In 1976 the Journal Citation Reports was published by the Institute for Scientific Information. With this application it is possible to search various literature and citation databases for relevant scientific literature. This service exists today under the name "Web of Science" and is operated by Clarivate Analytics as a paid website .

The term "scientometrics" comes from the book of the same name published by Wassili Nalimow in 1969. Derek de Solla Price founded this process with Eugene Garfield in 1978. In addition to the bibliometrics, other information such as the number of university graduates can also be measured. Scientometry is assigned to infometry and often also to the scientific sciences .

In the same year the Arts and Humanities Citation Index was created , which lists more than 1,100 specialist journals from the fields of art and humanities as a citation database that is subject to a fee .

In 1985, Terrence A. Brooks published a book in which he presented the various ways of citing and the motivation for using citations.

In 1988, China provided the Chinese Scientific and Technical Publications Database (CSTPC), which lists the reports published in Chinese scientific journals.

Citation graph

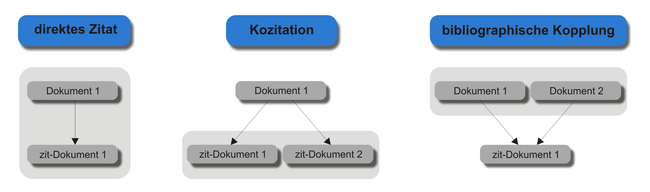

A lot of citations and related publications can be mathematically described as a graph , which is then referred to as a citation graph (also citespace ). Methods of network analysis are used for the analysis . In the citation graphs, publications (usually scientific articles) form nodes , between which citations create the edges . Alternatively, all articles in a specialist journal can be combined into one node in order to compare different journals. Other ways to construct graphs from citation data are information on co-authorship and co- citation . The main citation-based relationships between two publications A and B are:

- Citation : An article A cites an article B

- Cocitation : Two articles A and B are cited together by one article C.

- Bibliographic coupling : two articles A and B cite a common article C.

From studies of citation graphs, various regularities and structures about the publication behavior of authors can be read. It turns out that citation graphs form scale-free and small world networks . Through cluster analysis and other network analysis techniques, communities can be found by researchers who often cite each other.

In webometry , link graphs of websites and hyperlinks ( sitations ) are examined analogously to the citation graph .

Sources of error

The results of the citation analysis can be distorted by falsified indicators such as the publication rate by authors. Authors with a large number of publications are cited more often, which is reflected in the statistics, but this does not necessarily mean that they or their contributions are more important than others. Some authors publish the same scientific result several times under different titles or in different languages.

Statistical artifacts arise from the fact that not all authors are taken into account in publications with several authors. The weighting of the individual authors according to their work share can hardly be used in the analysis, since the author names are often stored alphabetically for technical reasons. Typing and transcription errors that occur when entering names in heterogeneous character set systems also fall into this category.

Incomparable comparisons arise if, for example, rankings are created for journals that neglect existing information such as publishing cycles and publication rate. Quotations from review articles that only offer an overview can hardly be filtered by statistical automatisms without a content evaluation.

Inadmissible use of mathematical or statistical methods, the requirements of which are not met or violated without making appropriate corrections, leads to inefficiencies. These can be an insufficient number of documents, as in the case of multidimensional scaling, or the lack of a normal distribution or an impermissible data independence in regression analysis .

Quoting one's own works or for reasons of courtesy is considered dubious, but it is often difficult to determine whether there are objective or more strategic reasons.

Differences in the type of referencing in different disciplines not taken into account.

Problem

Since it is assumed that good work will be cited more often, the number of citations a scientific article receives is often used as a measure of its quality . However, this common practice is not without its problems, as sometimes the assessment of the content threatens to be neglected and purely statistical criteria are decisive. The fact that a certain author is often quoted can have various reasons and does not always allow a statement about the quality of his contribution. Also it can be so called for the formation Zitierzirkel come ( "quoting me, O zitier 'I love you"), which distort the result (also: Zitierkarussell , malicious: Zitierkartell ).

Concrete points of criticism from citation analyzes

- The impact of a work, measured by the number of citations in scientific articles, ignores the influence that a work may have in other areas (for example in the industry that does not publish its results).

- Due to the Matthew effect , frequently cited works are cited more often without looking at the content. A study by M. V. Simikin and V. P. Roychowdhury indicates that only around a quarter of the cited works are even read by the authors.

- Some citations are only added to increase the impact factor of an author or a journal. Publications are also optimized to achieve a high number of citations instead of optimizing quality.

- The importance of articles in specialist journals and proceedings and monographs varies significantly depending on the subject. These differences are not taken into account in citation databases, so that individual subject areas are underestimated or overestimated.

- The most frequently cited papers are often exceptions that are mainly cited because it is customary to cite these papers. Other works with just as much influence, however, are no longer explicitly cited because their content has become a matter of course.

- The calculation of the impact factor is always time-related with a maximum period of 6 to 8 years (two PhD periods), with the citation peak being reached on average after the first two years. However, depending on the activity of the research area, this value can vary greatly, which can lead to errors in the calculation of the impact factor and half-life of the citation frequency. Similar errors can arise if the periodicals of the publication organs are not taken into account.

- Different citation methods mean that individual works in citation databases are treated as different publications. So the famous paper was, for example, Initial sequencing and analysis of the human genome of the human genome project in the 2001 ISI initially listed with different authors, so it did not show up among the most cited papers.

- Some non-English language journals also appear in an English edition. However, this is often incorrectly counted as an independent magazine.

- The measured impact does not take into account the purpose for which the publication is cited. For example, a citation can be used to point out scientific errors in the cited publication.

The Science Impact Index (SII) tries to come to an objective measure of the research quality of scientists by considering many of these aspects through weighting.

See also

literature

Monographs

- Eugene Garfield : Citation Indexing - Its Theory and Application in Science, Technology, and Humanities. Wiley, New York NY 1979, ISBN 0-471-02559-3 .

- Otto Nacke (Hrsg.): Quotation analysis and related procedures . IDIS - Institute for Documentation and Information on Social Medicine and Public Health, Bielefeld 1980, ISBN 3-88139-024-3 .

- Ирина В. Маршакова: Система цитирования научной литературы как средство слежения за развитием науки. Наука, Москва 1988, ISBN 5-02-013311-6 .

- Henk F. Moed: Citation Analysis in Research Evaluation (= Information Science and Knowledge Management. Vol. 9). Springer, Dordrecht a. a. 2005, ISBN 1-4020-3713-9 .

Individual representations

- Henry Small : Co-citation in the scientific literature: A new measure of the relationship between two documents. In: Journal of the American Society for Information Science. Vol. 24, No. 4, 1973, pp. 265-269, doi: 10.1002 / asi.4630240406 , digital version (PDF; 328 KB) .

- Henry Small, E. Sweeney: Clustering the Science Citation Index® using co-citations. I. A comparison of methods . In: Scientometrics. Vol. 7, No. 3-6, 1985, pp. 391-409, doi: 10.1007 / BF02017157 .

- Henry Small, E. Sweeney, Edward Greenlee: Clustering the Science Citation Index using co-citations. II. Mapping science. In: Scientometrics. Vol. 8, No. 5-6, 1985, pp. 321-340, doi: 10.1007 / BF02018057 .

- Philip Ball: Index aims for fair ranking of scientists. In: Nature . Vol. 436, No. 7053, 2005, p. 900, doi: 10.1038 / 436900a .

- Jorge E. Hirsch: An index to quantify an individual's scientific research output . In: Proceedings of the National Academy of Sciences of the United States of America . Vol. 102, No. 46, 2005, pp. 16569-16572, doi: 10.1073 / pnas.0507655102 .

- Paul LK Gross, Elsie M. Gross: College libraries and chemical education. In: Science . Vol. 66, No. 1713, 1927, pp. 385-389, doi: 10.1126 / science.66.1713.385 .

- Alan Pritchard: Statistical Bibliography or Bibliometrics? In: Journal of Documentation. Vol. 25, No. 4, 1969, pp. 348-349, doi: 10.1108 / eb026482 .

- Jürgen Rauter: Citation Analysis and Intertextuality. Intertextual citation analysis and citation-analytical intertextuality (= Poetica series of publications. Vol. 91). Kovač, Hamburg 2006, ISBN 3-8300-2383-9 (also: Düsseldorf, University, dissertation, 2005).

Web links

- CiteSpace - Tool by Chaomei Chen (English)

- Scientometry: Quotation Analysis - Lecture Notes ( Memento from May 7, 2010 in the Internet Archive )

- University and State Library Tyrol : Citation analysis

- CiteSeer x Scientific Literature Digital Library and Search Engine

- What can citation comparisons ... not necessarily? Examples of the problem of citation comparisons

swell

- ↑ O. Nacke: citation analysis and related methods. 1980.

- ↑ Dirk Tunger: Bibliometric procedures and methods as a contribution to trend observation and recognition in the natural sciences. (PDF) Forschungszentrum Jülich, 2007, p. 33 , accessed on November 10, 2018 .

- ^ Eugene Garfield: Citation indexes for science . In: Science . tape 122 , no. 3159 , 1955, pp. 108-111 .

- ^ Frank Havemann: Introduction to Bibliometrics. (PDF) Society for Science Research Berlin, 2009, p. 9 , accessed on November 10, 2018 .

- ^ Frank Havemann: Introduction to Bibliometrics. Cocitation analysis. (PDF) Gesellschaft für Wissenschaftsforschung Berlin, 2009, pp. 32–33 , accessed on November 10, 2018 .

- ^ Databases - Clarivate . In: Clarivate . ( clarivate.com [accessed November 10, 2018]).

- ^ Terrence A. Brooks: Private acts and public objects: An investigation of citer motivations . In: Journal of the American Society for Information Science . tape 36 , no. July 4 , 1985, ISSN 0002-8231 , pp. 223–229 , doi : 10.1002 / asi.4630360402 ( wiley.com [PDF; accessed November 10, 2018]).

- ↑ Yishan Wu Yuntao Pan, Yuhua Zhang, Zheng Ma, Jingan Pang: China Scientific and Technical Papers and Citations (CSTPC): History, impact and outlook . In: Scientometrics . tape 60 , no. 3 , 2004, ISSN 0138-9130 , p. 385–397 , doi : 10.1023 / b: scie.0000034381.64865.2b ( springer.com [accessed November 10, 2018]).

- ↑ Mikhail V. Simkin, Vwani P. Roychowdhury: Read before you cite! In: Complex Systems. Vol. 14, No. 3, 2003, ISSN 0891-2513 , pp. 269-274, arxiv : cond-mat / 0212043 .