Audio data compression

Audio data compression (often indistinctly referred to as audio compression for short) is data reduction (“ lossy ” algorithm ) or data compression (“ lossless ” algorithm).

Audio data compression describes specialized types of data compression in order to effectively reduce the size of digital audio data . As with other specialized types of data compression (especially video and image compression ), specific properties of the corresponding signals are used in various ways to achieve a reduction effect.

This type of compression is not to be confused with the process of dynamic narrowing (also called dynamic compression ), which is normally used to increase quieter or lower louder passages in an audio signal and does not save any data (see also Compressor ) .

Lossless audio data compression

The lossless audio data compression or shorter lossless audio compression is lossless compression of audio data, so the generation of packed data, which allow a bitidentische reconstruction of the output signal.

The lossless audio codecs differ from generic data compression methods in that they are specially adapted to the typical data structure of audio data and therefore compress it better than generic methods such as the Lempel - Ziv -based algorithms Deflate / ZIP and RAR . The compression rate that can be achieved with today's methods is usually between 25 and 70 percent for content typical of audio CDs (music, 16 bit / 44100 Hz).

use

The processes are used in recording studios, on newer sound carriers such as SACD and DVD-Audio, and increasingly also in private music archives for quality-conscious music listeners who want to avoid generation losses, for example . In addition, many data compression methods from the audio sector are also of interest for other signals such as biological data, medical curves or seismic data.

Problem

The majority of sound recordings are natural sounds, recorded from the real world; such data is difficult to compress. Similar to how photos cannot be compressed as well as computer-generated images, although computer-generated sound sequences can also contain very complicated waveforms that are difficult to reduce with many compression algorithms.

In addition, the values of the audio samples change very quickly and there are seldom sequences of the same bytes, which is why general data compression algorithms do not work well.

Find more economical representations

The nature of the PCM representation of sound waves is generally difficult to simplify without an inevitably lossy conversion into frequency sequences like those that take place in the human ear.

In the case of audio data, you can

- Similarities between the (stereo) channels and

- Dependencies between successive samples (through de- correlation ) and afterwards

- Entropy of the samples of the residual signal

be exploited.

technology

Channel coupling

By coupling channels, dependencies between channels can be exploited. By describing a channel via the difference to an existing or a new center channel, the repeated description of common contents can be avoided.

The difference signals can either be stored without loss, quantized and coded accordingly lossy or, for example, can also be stored abstracted for parametric descriptions .

forecast

To exploit dependencies between successive sample values, a de- correlation is carried out by attempting to predict the course of the sound curve. As a result, a residual / difference signal can be calculated which, if the prediction is good, is correspondingly weak (that is to say has few significant digits) and, moreover , can be compressed using an entropy coding method. For this purpose, in most cases, samples are extrapolated from others using sophisticated, adaptive prediction methods.

Entropy coding

The entropy coding of the decorrelated residual signal uses different probabilities of occurrence and similarities for its sample values. Rice codes , for example, are often used for this.

A method is symmetrical if, for decoding, the signal goes through the same steps in reverse as for encoding, and the computational effort for coding depends on the computational effort required for decoding.

Procedural features

In the case of lossless codecs, by definition, differences in quality of the audio signal should be ruled out; procedural differences lie in the following features:

- compression ratio

- direct playback of the compressed data

- Jumping to any position in an audio stream

- Resource requirements for compression and decompression

- Software and hardware support

- Flexibility in dealing with metadata

- Type of license

- Cross-platform availability

- Support of multi-channel signals

- Support of different resolutions - temporally ( sampling frequency ) or the sound depth ( sampling depth )

- possibly additional lossy or even hybrid modes (lossy + correction file)

- Streaming support

- Fault tolerance / correction mechanisms

- Embedded checksums to quickly check a file for completeness

- Symmetrical and asymmetrical coding options (independence / dependency of the decoding speed on the coding speed)

- Supports the creation of self-extracting files

- Compatibility with the replay gain standard

- Support of embedded cuesheets

- possible storage of header data of the original format

Lossless audio formats

Lossless audio formats are:

- Adaptive Transform Acoustic Coding - Advanced Lossless (ATRAC)

- Apple Lossless , also Apple Lossless Encoding or Apple Lossless Audio Codec (ALAC)

- Free Lossless Audio Codec (FLAC)

- Lossless Audio (LA)

- Meridian Lossless Packing (MLP)

- Monkey's Audio (APE)

- MPEG-4 Audio Lossless Coding (ALS)

- MPEG-1 Audio Layer 3 (mp3HD)

- OptimFROG

- Shorten

- TAK Toms lossless audio compressor

- The True Audio (TTA)

- WavPack (WV / WVC)

- Windows Media Audio Lossless (WMA Lossless)

- Emagic ZAP

Lossy audio data compression

As a lossy audio data compression , less precise, less lossy audio compression or in an appropriate context Lossy compression or English "lossy" (lossy), it refers to methods that reject perform data reduction and targeted approximately save less relevant signal components usually with poor precision or irretrievably .

With simple methods such as μ-law and A-law , only the individual sampling points of the PCM data stream are quantized using a logarithmic characteristic curve depending on the level. Methods like ADPCM already use the correlations of successive sampling points. Modern methods are mostly based on frequency transformations in connection with psychoacoustic models that simulate the properties of the human (inner) ear and reduce the display precision of masked signal components according to its inadequacies. For specialized processes, models are also used that simulate the sound generator and thus enable sound synthesis in the receiver or in the decoder, with which a large signal portion can then be described with parameters for controlling the synthesizer.

Psychoacoustics

Most modern methods do not try to reduce the mathematical error, but to improve the subjective human perception of the tone sequences. Since the human ear cannot analyze all information of an incoming sound, it is possible to change a sound file considerably without impairing the subjective perception of the listener. For example, a codec can store some of the sound components in very high and very low frequency ranges that are on the edge of the audible range with less precision or, in exceptional cases, even discard them completely. Quiet sounds can also be reproduced with less accuracy, since they are covered (“masked”) by loud sounds of neighboring frequencies. Another type of overlay is that a soft sound cannot be recognized if it comes immediately before or after a loud sound (temporal masking). Such a model of the ear-brain connection, which is responsible for these effects, is often called a psychoacoustic model (also: “ Psychoaccoustic Model ”, “ Psycho-model ” or “ Psy-model ”). Properties of the human ear such as frequency group formation , auditory range limits, masking effects and signal processing of the inner ear are used here .

Most of the lossy compression algorithms that work according to a psychoacoustic model are based on simple transformations, such as the modified discrete cosine transformation (MDCT), which convert the recorded waveform into its frequency sequences and thus find approximate representations of the source material that can be efficiently quantified, since the representation of the closer to human perception. Some modern algorithms use wavelets , but it is not yet certain whether such algorithms work better than those based on MDCT.

quality

Lossy compressing methods only allow the reconstruction of an approximately similar signal due to their principle. Transparency can be achieved with many processes, i.e. a degree of similarity can be achieved for the auditory perception (of the person) in which no difference to the original can be perceived. The compression artifacts introduced into the signal are audible below the transparency threshold. At the upper end of the scale is the transparency, with which no difference to the original is noticeable. It can be determined in blind hearing tests. Usually a threshold value in the amount of the bit rate is roughly shown, from which transparency becomes possible, with a more or less high risk of exceptional situations that cannot (yet) be encoded transparently. This risk usually decreases with a further increase in the bit rate and depends, among other things, on the architecture of the respective method. Here, more modern methods can often come up with better mechanisms for mastering problem areas. Below the transparency threshold of the compression method, the compression artifacts may still be masked to a certain extent by the disturbances that inferior devices introduce into the playback. In the case of perceptible compression artifacts, an objective comparison of different methods is much more difficult, as it often largely depends on the subjective preferences of the listener. The criteria here can be, for example, the naturalness of the sound image - for example, whether the artifacts resemble naturally occurring disturbances such as noise. At the lower end of the quality scale, speech codecs usually also consider the intelligibility threshold below which speech content can no longer be reproduced in an understandable manner.

Compression artifacts

In the case of compression methods based on frequency transformations, typical artifacts include a noticeably thinned out, poorer sound spectrum, which, for example, leads to chirping artifacts ("birdie artifact") or characteristic dull, bubbling or gurgling sound and leading echoes (English "pre-echo artifacts") for sharp, high-energy sound events ( transients ).

Loss of generation

Since the lossy parts of a compression process usually generate (further) loss with each run, there is a so-called generation loss if, for example, a file is compressed during transcoding , then decompressed and then compressed again. In practice, this mainly happens when an audio CD is burned from lossy audio files (audio CDs are uncompressed) and the material is later read out and compressed. This makes lossy files unsuitable for applications in professional sound processing areas ( "data reduction is audio destruction" ). However, such files are very popular with end users, as one megabyte, depending on the complexity of the sound material, is enough for about a minute of music of acceptable quality, which corresponds to a compression rate of about 1:11.

Exceptions here are, for example, lossy pre-filters for combination with lossless methods such as lossyWAV, which process the PCM data in order to subsequently achieve greater compression with a (certain) lossless compression method. The data generated by the prefilter can of course be compressed and decompressed as often as desired with the lossless compression method - at least as long as they are not changed further afterwards - without suffering further losses.

Quality assessment

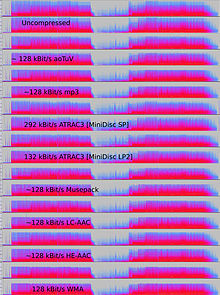

The following assessments are based on various hearing tests from hydrogenaudio.org. This forum represents a platform that is visited by interested and experienced users as well as by the developers of various audio compression methods such as MP3 ( LAME encoder), Vorbis or Nero-AAC. The high number of participating test persons results in statistically secured quality statements.

From the development of MP3 (around 1987) through the initial use of the codec (around 1997-2000) to the world's most widely used audio format (since around 2003), the output quality has been steadily improved. Other formats such as Vorbis, WMA or AAC were also developed to represent an alternative to MP3 or to replace it in the long term. These formats have also been continuously developed.

An MP3 file with a bit rate of ~ 128 kbit / s sounded very modest in 1997. The promised CD-like quality was not yet achieved at that time. In 2005, according to current listening tests, the LAME encoder for the same format at ~ 128 kbit / s already offered a transparent quality for the clear majority of listeners, i.e. a quality that cannot be distinguished from the original recording.

According to a hearing test from August 2007, comparable quality can be achieved with the AAC format at 96 kbit / s.

The latest listening tests with bit rates of 48 and 64 kbit / s show that at these low bit rates a quality can be achieved that is suitable for use in portable devices or for web radio.

Currently (August 2007) it can be said that with a good quality encoder and the right format, a quality can be achieved at 96 to 128 kbit / s which for the clear majority of users cannot be distinguished from the CD.

Lossy audio formats

In the examples, as far as known, the bit rates are also given at which a compressed file can no longer be distinguished from the original by most people, i.e. sounds transparent - if you listen carefully with good accessories and a sophisticated codec of the respective compression scheme; depending on the type of music. It must be noted, however, that transparency is not felt by everyone at the same bit rate. The quality of the D / A converters, amplifiers and boxes plays an important role here. While lossy compression is usually very clearly audible on studio equipment, even for laypeople, it cannot be differentiated from the original on inferior playback devices, even for professionals. The information is therefore a reference value for the average listener with average equipment. The bit rate of CDs is 1411.2 kbit / s (kilobits per second).

For comparisons of various audio codecs see web links .

- AC-3 , also called Dolby Digital or similar

- AAC (MPEG-2, MPEG-4 ): 96-320 kbit / s

- ATRAC ( MiniDisc ): 292 kbit / s

- ATRAC3 (MiniDisc in MDLP mode): 66–132 kbit / s

- ATRAC3plus (for Hi-MD and other portable audio devices from Sony): 48–352 kbit / s

- DTS

- MP2 : MPEG-1 Layer 2 Audio Codec ( MPEG-1 , MPEG-2 ): 280-400 kbit / s

- MP3 : MPEG-1 Layer 3 Audio Codec (MPEG-1, MPEG-2, LAME ): 180–250 kbit / s

- mp3PRO

- Musepack : 160–200 kbit / s (open source)

- Ogg Vorbis : 160–220 kbit / s (open source)

- opus

- WMA

- LPEC

- TwinVQ

See also

- Uninterrupted playback

- Mean Opinion Score (assessment of the sound quality of compression methods)

- Spectral band replication (Spectral Band Replication, SBR)

- Subband coding

literature

- Roland Enders: The home recording manual . 3. Edition. Carstensen, Munich 2003, ISBN 3-910098-25-8 .

- Thomas Görne: Sound engineering . 1st edition. Carl Hanser, Leipzig 2006, ISBN 3-446-40198-9 .

- R. Beckmann: Manual of PA technology, basic component practice . 2nd Edition. Elektor, Aachen 1990, ISBN 3-921608-66-X .

- A. Lerch: Bit rate reduction . In: Stefan Weinzierl (Ed.): Manual of audio technology . 1st edition. Springer, Berlin 2008, ISBN 978-3-540-34300-4 , pp. 849-884 .

Web links

- Compare lossless audio codecs on Speek (English)

- Comparison of lossless audio codecs by Josef Pohm (English)

Individual evidence

- ↑ http://wiki.hydrogenaudio.org/?title=lossyWAV

- ↑ Results of Public, Multiformat Listening Test @ 128 kbps (December 2005) ( Memento from June 5, 2008 in the Internet Archive )

- ↑ a b Results of Public, Multiformat Listening Test @ 48 kbps (November 2006) ( Memento from June 5, 2008 in the Internet Archive ), on www.listening-tests.info, November 2006 (English).

- ↑ Results of Public, Multiformat Listening Test @ 64 kbps (July 2007) ( Memento from June 5, 2008 in the Internet Archive )