Convolutional Neural Network

A Convolutional Neural Network ( CNN or ConvNet ), in English, for example, “ folding neural network”, is an artificial neural network . It is a biological process-inspired concept in the field of machine learning . Convolutional Neural Networks are used in numerous artificial intelligence technologies , primarily in the machine processing of image or audio data.

Yann LeCun is considered to be the founder of the CNNs .

construction

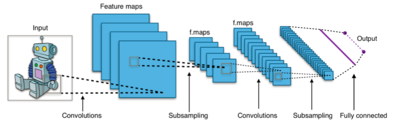

In principle, the structure of a classic convolutional neural network consists of one or more convolutional layers, followed by a pooling layer. In principle, this unit can be repeated any number of times; if it is repeated enough, it is called Deep Convolutional Neural Networks, which fall into the field of deep learning . Architecturally, three essential differences can be noted compared to the multi-layer perceptron (for details, see Convolutional Layer ):

- 2D or 3D arrangement of neurons

- Shared weights

- Local connectivity

Convolutional Layer

As a rule, the input is available as a two- or three-dimensional matrix (e.g. the pixels of a grayscale or color image). The neurons are arranged accordingly in the convolutional layer.

The activity of each neuron is calculated using a discrete convolution (hence the addition convolutional ). A comparatively small convolution matrix (filter kernel) is moved gradually over the input. The input of a neuron in the convolutional layer is calculated as the inner product of the filter kernel with the currently underlying image section. Correspondingly, neighboring neurons in the convolutional layer react to overlapping areas (similar frequencies in audio signals or local surroundings in images).

It should be emphasized that a neuron in this layer only reacts to stimuli in a local environment of the previous layer. This follows the biological model of the receptive field . In addition, the weights for all neurons of a Convolutional Layers are identical (weights divided, English: shared weights ). As a result, for example, each neuron in the first convolutional layer codes the intensity at which an edge is present in a specific local area of the input. Edge recognition as the first step in image recognition has a high level of biological plausibility . From the shared weights it follows immediately that translational invariance is an inherent property of CNNs.

The input of each neuron determined by means of discrete convolution is now converted by an activation function, usually Rectified Linear Unit , ReLU ( ) for short , into the output that is supposed to model the relative fire frequency of a real neuron. Since backpropagation requires the calculation of the gradients , a differentiable approximation of ReLU is used in practice:

Analogous to the visual cortex, both the size of the receptive fields (see section Pooling Layer ) and the complexity of the recognized features (e.g. parts of a face) increase in the lower convolutional layers .

Pooling layer

In the next step, pooling, superfluous information is discarded. For object recognition in images, for example, the exact position of an edge in the image is of negligible interest - the approximate localization of a feature is sufficient. There are different types of pooling. By far the most widespread is max pooling, whereby from each 2 × 2 square of neurons of the convolutional layer only the activity of the most active (hence "max") neuron is retained for the further calculation steps; the activity of the other neurons is rejected (see picture). Despite the data reduction (75% in the example), the network performance is usually not reduced by the pooling. On the contrary, it offers some significant advantages:

- Reduced space requirements and increased calculation speed

- The resulting possibility of creating deeper networks that can solve more complex tasks

- Automatic growth of the size of the receptive fields in deeper convolutional layers (without the need to explicitly increase the size of the convolution matrices)

- Preventive measure against overfitting

Alternatives such as mean pooling have proven to be less efficient in practice.

The biological counterpart to pooling is the lateral inhibition in the visual cortex.

Fully-connected layer

After a few repetitive units consisting of convolutional and pooling layers, the network can end with one (or more) fully-connected layers according to the architecture of the multi-layer perceptron . This is mainly used in classification . The number of neurons in the last layer then usually corresponds to the number of (object) classes that the network should differentiate. This very redundant, so-called one-hot-encoding, has the advantage that no implicit assumptions are made about the similarities of classes.

The output of the last layer of the CNN is usually converted into a probability distribution by a Softmax function , a translation - but not scale-invariant normalization over all neurons in the last layer .

training

CNNs are usually trained to be monitored . During the training, the appropriate one-hot vector is provided for each input shown. The gradient of each neuron is calculated via backpropagation and the weights are adjusted in the direction of the steepest drop on the error surface.

Interestingly, three simplifying assumptions that significantly reduce the computational effort of the network and thus allow deeper networks have contributed significantly to the success of CNNs.

- Pooling - Here, most of the activity in a layer is simply discarded.

- ReLU - The common activation function that projects any negative input to zero.

- Dropout - A regularization method in training that prevents overfitting . For each training step, randomly selected neurons are removed from the network.

Expressiveness and necessity

Since CNNs are a special form of multilayer perceptrons , they are basically identical in terms of their expressiveness.

The success of CNNs can be explained with their compact representation of the weights to be learned ("shared weights"). The basis is the assumption that a potentially interesting feature (e.g. edges in object recognition) is interesting at every point of the input signal (of the image). While a classic two-layer perceptron with 1000 neurons per level requires a total of 2 million weights to process an image in the format 32 × 32, a CNN with two repeating units, consisting of a total of 13,000 neurons, only requires 160,000 (divided) learners Weights, the majority of which is in the rear area (fully-connected layer).

In addition to the significantly reduced main memory requirement, shared weights have proven to be robust against translation , rotation, scale and luminance variance.

In order to achieve a similar performance in image recognition with the help of a multi-layer perceptron, this network would have to learn each feature independently for each area of the input signal. Although this works sufficiently for greatly reduced images (about 32 × 32), due to the curse of dimensionality , MLPs fail when it comes to higher-resolution images.

Biological plausibility

CNNs can be understood as a concept inspired by the visual cortex , but they are far from being a plausible model for neural processing.

On the one hand, the heart of CNNs, the learning mechanism backpropagation , is considered biologically implausible, since, despite intensive efforts, it has not yet been possible to find neural correlates of backpropagation-like error signals. In addition to the strongest counter-argument to biological plausibility, the question of how the cortex gets access to the target signal (label), Bengio et al. other reasons , including the binary, time-continuous communication of biological neurons and the calculation of non-linear derivatives of the forward neurons.

On the other hand, studies with fMRI could show that activation patterns of individual layers of a CNN correlate with the neuron activities in certain areas of the visual cortex when both the CNN and the human test subjects are confronted with similar image processing tasks. Neurons in the primary visual cortex, called “simple cells”, respond to activity in a small area of the retina . This behavior is modeled in CNNs by the discrete convolution in the convolutional layers. Functionally, these biological neurons are responsible for recognizing edges in certain orientations. This property of the simple cells can in turn be modeled precisely with the help of Gabor filters . If you train a CNN to recognize objects, the weights in the first convolutional layer converge without any “knowledge” about the existence of simple cells against filter matrices that come astonishingly close to Gabor filters, which can be understood as an argument for the biological plausibility of CNNs. In view of a comprehensive statistical information analysis of images with the result that corners and edges in different orientations are the most independent components in images - and thus the most fundamental building blocks for image analysis - this is to be expected.

Thus, the analogies between neurons in CNNs and biological neurons come to light primarily behavioristically, i.e. in the comparison of two functional systems, whereas the development of an "ignorant" neuron into a (for example) face-recognizing neuron follows diametrical principles in both systems.

application

Since the use of graphics processor programming, CNNs can be trained efficiently for the first time. They are considered the state-of-the-art method for numerous applications in the field of classification.

Image recognition

CNNs achieve an error rate of 0.23% on one of the most frequently used image databases, MNIST , which (as of 2016) corresponds to the lowest error rate of all algorithms ever tested.

In 2012, a CNN (AlexNet) improved the error rate in the annual competition of the benchmark database ImageNet (ILSVRC) from the previous record of 25.8% to 16.4%. Since then, all the algorithms placed at the front have been using CNN structures. In 2016, an error rate of <3% was achieved.

Groundbreaking results have also been achieved in the field of face recognition.

voice recognition

CNNs are successfully used for speech recognition and have achieved excellent results in the following areas:

- semantic parsing

- Search query recognition

- Sentence modeling

- Sentence classification

- Part-of-speech tagging

- Machine translation (e.g. used in the online service DeepL )

Reinforcement learning

CNNs can also be used in the area of reinforcement learning , in which a CNN is combined with Q-learning . The network is trained to estimate which actions in a given state lead to which future profit. By using a CNN, complex, higher-dimensional state spaces can also be viewed, such as the screen output of a video game .

literature

- Ian Goodfellow , Yoshua Bengio, Aaron Courville: Deep Learning (= Adaptive Computation and Machine Learning ). MIT Press, 2016, ISBN 978-0-262-03561-3 , 9 Convolutional Networks ( deeplearningbook.org ).

Web links

- TED Talk: How we are teaching computers to understand pictures - Fei Fei Li, March 2015, accessed on November 17, 2016.

- 2D visualization of the activity of a two-layer CNN, accessed November 17, 2016.

- Tutorial for implementing a CNN using the TensorFlow Python library

- University of Stanford CNN tutorial, including visualization of learned convolution matrices , accessed on November 17, 2016.

- Gradient-Based Learning Applied to Document Recognion, Y. Le Cun et al (PDF), first successful application of a CNN, accessed on November 17, 2016.

- ImageNet Classification with Deep Convolutional Neural Networks, A. Krizhevsky, I. Sutskever and GE Hinton, AlexNet - Breakthrough in Image Recognition, Winner of the ILSVRC Challenge 2012.

Individual evidence

- ↑ Masakazu Matsugu, Katsuhiko Mori, Yusuke Mitari, Yuji Kaneda: Subject independent facial expression recognition with robust face detection using a convolutional neural network . In: Neural Networks . 16, No. 5, 2003, pp. 555-559. doi : 10.1016 / S0893-6080 (03) 00115-1 . Retrieved May 28, 2017.

- ↑ unknown: Convolutional Neural Networks (LeNet). Retrieved November 17, 2016 .

- ^ DH Hubel, TN Wiesel: Receptive fields and functional architecture of monkey striate cortex . In: The Journal of Physiology . 195, No. 1, March 1, 1968, ISSN 0022-3751 , pp. 215-243. doi : 10.1113 / jphysiol.1968.sp008455 . PMID 4966457 . PMC 1557912 (free full text).

- ↑ Dominik Scherer, Andreas C. Müller, Sven Behnke: Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition . In: Artificial Neural Networks (ICANN), 20th International Conference on. Springer, 2010, pp. 92-101.

- ↑ a b Yann LeCun: Lenet-5, convolutional neural networks. Retrieved November 17, 2016 .

- ↑ A more biological plausible learning rule than Backpropagation applied to a network model of cortical area 7d (PDF)

- ↑ a b Yoshua Bengion: Towards Biologically Plausible Deep Learning. Retrieved December 29, 2017 .

- ^ Haiguang Wen: Neural Encoding and Decoding with Deep Learning for Dynamic Natural Vision. Retrieved September 17, 2017 (English).

- ↑ Sandy Wiraatmadja: Modeling the Visual Word form Area Using a Deep convolutional neural network. Retrieved September 17, 2017 (English).

- ↑ JG Daugman : Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. In: Journal of the Optical Society of America A, 2 (7): 1160-1169, July 1985.

- ↑ S. Marcelja: Mathematical description of the responses of simple cortical cells . In: Journal of the Optical Society of America . 70, No. 11, 1980, pp. 1297-1300. doi : 10.1364 / JOSA.70.001297 .

- ↑ ImageNet Classification with Deep Convolutional Neural Networks, A. Krizhevsky, I. Sutskever and GE Hinton (PDF)

- ^ The “Independent Components” of Scenes are Edge Filters (PDF), A. Bell, T. Sejnowski, 1997, accessed on November 17, 2016.

- ↑ ImageNet Classification with Deep Convolutional Neural Networks (PDF)

- ^ Dan Ciresan, Ueli Meier, Jürgen Schmidhuber: Multi-column deep neural networks for image classification . In: Institute of Electrical and Electronics Engineers (IEEE) (Ed.): 2012 IEEE Conference on Computer Vision and Pattern Recognition . , New York, NY, June 2012, pp. 3642-3649. arxiv : 1202.2745v1 . doi : 10.1109 / CVPR.2012.6248110 . Retrieved December 9, 2013.

- ↑ ILSVRC 2016 Results

- ↑ Improving multiview face detection with multi-task deep convolutional neural networks

- ^ A Deep Architecture for Semantic Parsing. Retrieved November 17, 2016 .

- ^ Learning Semantic Representations Using Convolutional Neural Networks for Web Search - Microsoft Research . Retrieved November 17, 2016.

- ^ A Convolutional Neural Network for Modeling Sentences . 17th November 2016.

- ^ Convolutional Neural Networks for Sentence Classification . Retrieved November 17, 2016.

- ^ Natural Language Processing (almost) from Scratch . Retrieved November 17, 2016.

- ↑ heise online: Machine translators: DeepL competes with Google Translate. August 29, 2017. Retrieved September 18, 2017 .

- ↑ Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness: Human-level control through deep reinforcement learning . In: Nature . tape 518 , no. 7540 , February 2015, ISSN 0028-0836 , p. 529-533 , doi : 10.1038 / nature14236 ( nature.com [accessed June 13, 2018]).