Eye tracking

With eye tracking , and eye tracking or in the medical field oculography refers to the recording of mainly of fixations (points that you just viewed), saccades (rapid eye movements) and regressions existing eye movements of a person.

As eye tracker devices and systems are referred to, which perform recording and enable the analysis of eye movements.

Eye tracking is used as a scientific method in the neurosciences , perception , cognitive and advertising psychology , cognitive or clinical linguistics , in usability tests , in product design and reading research. It is also used in the study of animals, especially in connection with researching their cognitive abilities.

history

Eye movements were recorded through direct observation as early as the 19th century. One of the first was the French Émile Javal , who described the eye movements while reading.

With the invention of the film camera it became possible to record direct observations and analyze them afterwards. This was done in 1905 by Judd, McAllister and Steel .

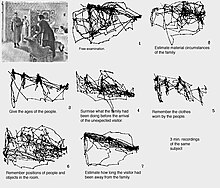

The real pioneer of gaze registration with high accuracy is considered to be the Russian ALYarbus , who particularly demonstrated the influence of the task on the eye movements when looking at pictures.

New processes were then developed in the 1970s.

- Retinal afterimages , so-called afterimages are generated on the retina by a series of strong light stimuli, the position of which allows conclusions to be drawn about eye movement.

- Electrooculograms measure the electrical voltage between the retina (negative pole) and the cornea (positive pole).

- Contact lens method that are mirrored and whose reflection is recorded by a camera.

- search coil , which also uses contact lenses which are provided with coils and exposed to a magnetic field . The eye movement can then be calculated from the induced voltage.

- Cornea reflex method , which uses the reflection of one or more light sources ( infrared or special lasers ) on the cornea and its position in relation to the pupil. The eye image is recorded with a suitable camera. The process is also known as "video based eye tracking".

Recorders

Basically, eye trackers can be divided into two different designs: mobile eye trackers that are firmly attached to the subject's head and those that are installed externally. The latter can in turn be divided into two categories: remote devices and those that are associated with a fixation of the subject's head.

Mobile systems

Structure and application

Mobile systems, so-called head-mounted eye trackers , essentially consist of three components: a) an infrared light source for implementing the cornea reflex method, b) one or more cameras that record the reflection pattern on the cornea, and c) one Field of view camera that records the area the subject is looking at. The components used to be built into a special device that was mounted on the subject's head. Today the components are so small that they can be integrated into a glasses frame. The weight of such glasses is often less than 50 g. The associated control computer - previously in the format of a notebook - can now be accommodated in a device the size of a cell phone. While external devices can only record a glance at a screen, mobile eye trackers are therefore also suitable for field studies outside of the laboratory context. The main reason is that by attaching the infrared transmitter and the cameras directly to the glasses, the direction of view can be recorded regardless of superimposed head movements. The disadvantage of mobile eye trackers is that the data cannot be parameterized to date. So only the gaze of the test person is shown in a video of his field of view camera. For a statistical evaluation, these videos still have to be manually looked through with great effort. It can be assumed that this problem can be solved in the future using methods of automated video analysis.

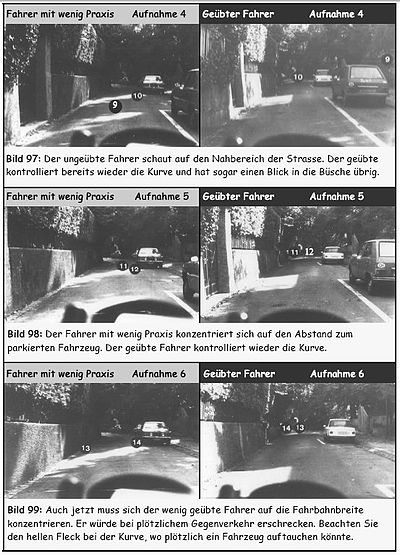

Applications of mobile eye trackers are, for example, market research (where does a test person look when walking through a supermarket) or usability research (how long a test person looks at the road while driving, how long at the navigation device). Evaluations and analyzes are essentially individual and user-centered. The method allows online or offline tracking of the gaze path and the length of time the gaze remains (fixation) on certain objects while the subject is moving freely. Generalizations about several test persons and attention analyzes on certain objects can be realized technically with 3D / 6D position detection systems (so-called head trackers).

Early examples of mobile eye trackers

The "EyeLink II (EL II)"

The "EL II" system consists of two computers equipped with the appropriate software, a "program computer" and an associated "stimulus computer" including monitors and a headset . There are two cameras attached to the side brackets for recording eye movements. Another camera located above is matched to four infrared markers mounted on one of the two computers. This makes it possible to record any kind of fixation on images or actions. The system already works according to the method of corneal reflections. Disturbing factors such as muscle tremor can also be excluded.

Assembling the headset

The headband with the cameras is put on the subject. Then the camera above is brought into position. For this purpose, it is set as precisely as possible to the mean height and mean width of one of the two monitors, namely the so-called stimulus monitor. The referential infrared markers for setting the camera are also mounted on this monitor. For the correct adjustment , either the monitor itself is simply moved or the height of the subject's chair is adjusted.

The two eye cameras on the side holding arms are swiveled so that they are not distracting in the subject's field of vision. You should be approximately at the level of the tip of your nose and can already be brought into the approximate position for later exposure. The cameras can be adjusted three-dimensionally with the swivel arm itself as well as with clamps and locking screws.

Fine tuning

With the help of the supplied software, the fine adjustment - alignment of the camera and focus setting - can be made. The latter works via the automatic threshold value setting integrated in the system for corneal reflection and pupil illumination.

During calibration , the test person is offered random points on the stimulus screen in an auto-sequence, which move across the screen. The test person is instructed to follow the points with both eyes. Validation follows after successful calibration . This works like calibration, but the maximum deviation of the eye movements is measured in degrees. The experimenter must decide whether the setting can be used or whether it should be readjusted. Once a setting has been accepted, it can be readjusted again and again during the experiment, since a neutral screen with a fixation point appears between the individual stimuli . The system itself suggests the better adjusted eye.

System configuration

The program computer and the stimulus computer are connected to one another by means of a network cable. The program computer is also connected to the eye cameras and the infrared markers. Software for the presentation of the stimuli and the programming language Visual C ++ for programming the experiments run on the stimulus computer . The monitor on which the stimuli are presented should have a size of 17 inches, since this appears to be the most suitable for carrying out reading experiments. With the help of a joystick, the test person can request the next stimulus.

Program and stimulus computers should be set up in a specific spatial position in order to prevent reflections and unwanted light stimuli during the recording. In addition, the program computer with the control monitor should be outside of the subject's field of vision in order to avoid being distracted from the stimuli presented.

The "Eye Tracking Device (ETD)"

This eye tracker was originally developed by the German Aerospace Center (DLR) for use on the International Space Station ISS. In early 2004 he was promoted to the ISS as part of the Euro-Russian space program. The device was developed by Andrew H. Clarke from the Vestibular Laboratory of the Charité in Berlin in collaboration with the Chronos Vision and Mtronix companies in Berlin and integrated for use in space by the Kayser-Threde company in Munich.

In the first experiments, carried out by Clarke's team in cooperation with the Moscow Institute for Biomedical Problems (IBMP), the ETD was used to measure Listing's plane - a coordinate system for determining eye movements relative to the head. The scientific goal was to determine how Listing's plane changes under different gravitational conditions. In particular, the influence of long-term microgravity on board the ISS and after the subsequent return to earth gravity was examined.

The results contribute to the understanding of neural plasticity in vestibular and oculomotor systems.

These experiments began in the spring of 2004 and were continued until the end of 2008 by a number of cosmonauts and astronauts who each spent six months on the ISS.

Operations

The investigation of the orientation of Listing's plane during a long-term space mission is of special interest because Listing's plane on Earth appears to be influenced by the vestibular system. This can be determined by measuring the head position relative to gravity. The experiment examines the adaptation of the astronaut's vestibular system during the stay in weightlessness and after returning to Earth. The experiment should also clarify to what extent the orientation of Listing's plane changes depending on the adaptation of the vestibular system to changed gravitational conditions, in particular to weightlessness. Another question is whether the human body uses other mechanisms to compensate for the lack of inputs from the vestibular system during a long space flight.

Missions

The ETD was used for this study between 2004 and 2008. During the six-month experiments, the experiments were repeated every three weeks, whereby the adaptation of the microgravity could be evaluated. In addition, equivalent measurements were carried out on every cosmonaut or astronaut in the first few weeks after their return to earth. The ETD system has now become a universal instrument on the ISS. It is currently used by a group of Russian scientists from the Institute of Biomedical Problems who are studying the coordination of eye and head movements in microgravity.

technology

The digital cameras are equipped with powerful CMOS image sensors and are connected to the corresponding processor board of the host PC via bidirectional, digital high-speed connections (400Mb / s). The front-end control architecture , which consists of the digital signal processor (DSP) and programmable integrated circuits ( FPGA ), is located on this PCI processor board . These components are programmed in such a way that they enable pixel-oriented online acquisition and the measurement of the 2D pupil coordinates.

For the task of eye movement registration , a substantial data reduction is carried out by the sensors and the front-end control architecture. Only preselected data is transferred from the image sensor to the host PC, on which the final algorithms and data storage are carried out. This development eliminates the bottleneck problems of conventional frame-by-frame image processing and enables a substantial increase in image refresh rates.

The control architecture is integrated in a PC and enables the visualization of the eyes and the corresponding signals or data. An important development feature is the digital storage of all image sequences from the cameras as digital files on exchangeable hard drives. In this way, the hard drive with the saved eye data can be sent back to Earth after the ISS mission is complete. This ensures extensive and reliable image data analysis in the research laboratory and minimizes the time required for the experiment on the ISS.

ETD on earth

Parallel to the version of the ETD used in space, Chronos Vision also developed a commercially applicable version that is used in many European, North American and Asian laboratories for neurophysiological research.

External systems

External systems, so-called remote eye trackers, enable non-contact measurements to be carried out. Mechanical components such as transmission cables or chin rests are not required. After successful calibration, the test person can move freely within a certain range of motion. An important aspect here is the compensation of head movements. A person can fixate a place while making head movements without losing the fixation place.

To register eye movements on a computer screen, the components of the device can either be built directly into a screen or attached below or next to it. The eye camera automatically recognizes the eye and “tracks” it. There is no contact between the test subject and the device. Opposite this are eye trackers in a "tower" design, with the subject's head being fixed in the device. The latter design is usually more expensive to purchase and rather inconvenient for the test subject, but it usually delivers more precise results at a higher frame rate .

Different techniques are used:

- Pan-Tilt Systems:

Mechanically movable components guide the camera with camera optics to the head movements of the test subject. Current systems achieve measurement rates of up to 120 Hz.

- Tilting mirror systems:

While the camera and optics remain fixed in space, servo-driven mirrors allow the eye to be tracked when the head moves .

- Fixed camera systems:

These systems dispense with any mechanically moving components and achieve freedom of movement using image processing methods.

These variants have the advantage over the mobile design that they record data that can be clearly parameterized and can thus be fed into a statistical evaluation (it is therefore possible to specify in exact values at which point in time the person looked at which area of the screen ). While remote eye trackers are mainly used in market and media research (e.g. use of websites, eye movement when watching films), tower eye trackers are used in neurosciences (e.g. research on microsaccades ).

Special forms

Simple direct recordings

Before the advent of complex recording devices, simple film recordings were used, which enabled the analysis of eye movements in certain cases by evaluating the individual images. They could be used where it was sufficient to capture the points of view above, below, right, left and center. In addition, these had the advantage that hand movements and facial expressions could also be seen.

Recording with the webcam

Nowadays, webcams are also widely used for cost-effective eye tracking. One of the first commercial providers in this area was GazeHawk, which was bought by Facebook in 2013. Since then, other providers have established themselves in this area, e.g. B. Sticky.

Recording with the computer mouse

Modern methods such as attention tracking enable gaze recording with the help of a computer mouse or a comparable pointing device. The focal points come in the form of mouse clicks.

Components of eye trackers

The technical design of an eye tracker is slightly different depending on the model. Nevertheless, there are fundamental similarities: every eye tracker has an eye camera, a field of view camera and an infrared light source.

Forms of representation

Various visualization methods are used to represent eye tracking data visually and to graphically analyze recordings of individual persons or aggregated groups of persons. The following visualizations are the most common:

- Gazeplot (alternatively Scanpath): A Gazeplot visualizes the sequence and duration of fixations of individual persons. The sequence is represented by numbering, the duration of the fixation via the size of the circles. A gauze plot can be viewed statically over a certain time window or as an animation (see illustration).

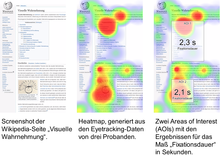

- Heat map (alternatively attention map ): A heat map is a static visualization that is mainly used for the aggregated representation of several people. In this representation, color coding highlights where people have long and often fixed their gaze. This provides a good overview of which regions are attracting a lot of attention and which regions are being left out.

The heat map is the most frequently used display for eye tracking data, but is often no longer sufficient for deeper analyzes. Other visualizations or statistical methods are used here, such as B. parallel scanpaths, visualizations for special areas (areas-of-interest) or space-time cubes in 3D space.

Analysis of eye tracking data based on areas of interest (AOIs)

Eye tracking data describes when a person fixes which point. During the analysis, the data are related to the stimulus under consideration. In the usability area, it is analyzed which elements of a website were viewed, how long they were viewed or when first.

Areas of Interest (AOIs) are used for this. AOIs are areas that are manually marked in the stimulus and for which various eye tracking measures are then calculated. There are a variety of dimensions. Some measurements only take into account the position of the fixations, such as the “Number of Fixations”, which expresses how many fixations were within an AOI. Measures are also often expressed as a percentage and are added to the total number of fixations on a stimulus.

The duration of the fixations is added up for the measure “Total dwell time”, also known as the fixation time. The total duration of the consideration of an AOI is calculated. Other measures take into account the timing of certain fixations. The measure “Time to First Fixation” identifies the time of the first fixation within an AOI. So it expresses how long it took to look at a certain area for the first time. The calculations of the dimensions on the basis of AOIs can be carried out for the data of a single subject as well as for the data of several subjects. Average values are formed for all test subjects.

The AOI analysis allows eye tracking data to be evaluated quantitatively. It is used to z. B. to assess the distribution of attention within a stimulus such as a website. However, it is also particularly suitable for comparing eye tracking recordings in A / B tests , for example on different stimuli or when two test subject groups are to be compared with one another.

application areas

Usability

The use of eye tracking analyzes in the field of software ergonomics is widespread. As part of usability tests , the perception of test subjects while performing tasks with software or on a website is analyzed. In this way, problems can be identified, for example when searching for information or interacting, and the software products can be optimized.

medicine

The laser treatment for ametropia owes its success u. a. the use of eye trackers, which ensure that the laser treats the cornea at the planned location and that eye movements are monitored. If necessary, the laser is adjusted - or switched off until the eye is back in the correct position. Other systems are used in the areas of neurology, balance research, oculomotor functions and eye malpositions . Eye trackers are also becoming more and more important in psychiatry: They are linked to the hope that this will enable more reliable and earlier diagnoses in the future (e.g. for autism or ADHD ).

Neuroscience

Eye trackers are used as comparison systems in functional imaging in magnetic resonance imaging ( fMRI ), in studies with magnetoencephalography devices (MEG), or electroencephalography (EEG) systems.

psychology

- Image perception

- Movement perception

- Analysis of learning progress (training analysis)

Market research

- Package design

- Point of Sale (POS)

- Advertising (print, online analysis, etc.)

Communication science

- Selection and reception behavior of users on websites and when searching for information online

Computer science

- Activity detection, such as reading or searching

- User authentication via Windows Hello

- Virtual Reality and Augmented Reality applications and games ; for the first time, eye trackers are directly integrated in the latest generations of VR (e.g. HTC Vive Pro Eye ) and AR / MR headsets (e.g. Microsoft Hololens 2 ).

Human-machine interaction

- Computer controls for the physically challenged by an eye mouse. Usually the cursor point represents the point of view. An interaction is generally triggered by a fixation on an object for a certain time (dwell time). Since Windows 10, eye control has been integrated for users with disabilities, on the one hand to control the mouse pointer, on the other hand to operate the integrated on-screen keyboard or to communicate with other people using a text-to-speech function.

- For adapting autostereoscopic displays to the observer's point of view. The screen content and / or the beam splitter located in front of the screen is changed in such a way that the appropriate partial stereo image arrives at the left or right eye.

- Interaction in computer games. More than 100 games (including Tom Glancy's The Division , Far Cry ) already support eye tracking functions, such as B. gaze-controlled aiming, shifting the field of view depending on the point of view or a sharp rendering of the representation in the area of the point of view, while the rest of the screen remains slightly blurred (foveated rendering).

literature

- Alan Dix, Janet Finlay, Gregory Abowd, RussellBeale: Man, Machine, Method . Prentice Hall, New York NY et al. 1995, ISBN 3-930436-10-8 .

- Andrew Duchowski: Eye Tracking Methodology. Theory and Practice. 2nd Edition. Springer, London 2007, ISBN 978-1-84628-608-7 .

- Frank Lausch: Splitscreen as a special form of advertising on TV. An exploratory study on the reception of TV content with the help of eye tracking. AV Akademikerverlag, Saarbrücken 2012, ISBN 978-3-639-39093-3 (Also: Ilmenau, Technical University, Master's thesis, 2011).

Web links

Individual evidence

- ^ Andreas M. Heinecke: Human-computer interaction: basic knowledge for developers and designers . 2011.

- ↑ Tobias C. Breiner: Color and Form Psychology . ISBN 978-3-662-57869-8 , pp. 111-117 , doi : 10.1007 / 978-3-662-57870-4_7 .

- ↑ Danny Nauth: Through the eyes of my customer: Practical manual for usability tests with an eye tracking system . 2012, p. 17 .

- ↑ Christian Bischoff, Andreas Straube: Guidelines for Clinical Neurophysiology . 2014, p. 63 ff .

- ^ Fiona J. Williams, Daniel S. Mills, Kun Guo: Development of a head-mounted, eye-tracking system for dogs. In: Journal of neuroscience methods. Volume 194, No. 2, January 2011, ISSN 1872-678X , pp. 259-265, doi: 10.1016 / j.jneumeth.2010.10.022 . PMID 21074562 .

- ↑ a b Alfred L. Yarbus: Eye Movements and Vision. Plenum Press, New York NY 1967 (Russian original edition 1964: А. Л. Ярбус: Роль Движения Глаз в Процессе Зрения. Москва).

- ↑ Edmund Burke Huey: The psychology and pedagogy of reading. With a review of the history of reading and writing and of methods, texts, and hygiene in reading (= MIT Psychology. Volume 86). MIT Press, Cambridge MA et al. 1968.

- ↑ cit. in: Hans-Werner Hunziker: In the eye of the reader. From spelling to reading pleasure. Foveal and peripheral perception. transmedia Verlag Stäubli AG, Zurich 2006, ISBN 3-7266-0068-X .

- ^ Andrew H. Clarke: Vestibulo-oculomotor research and measurement technology for the space station era. In: Brain Research Reviews. Volume 28, No. 1/2, ISSN 0165-0173 , pp. 173-184, doi: 10.1016 / S0165-0173 (98) 00037-X .

- ^ Andrew H. Clarke, Caspar Steineke, Harald Emanuel: High image rate eye movement measurement. In: Alexander Horsch (ed.): Image processing for medicine 2000. Algorithms, systems, applications. Proceedings of the workshop from 12. – 14. March 2000 in Munich. Springer, Berlin et al. 2000, ISBN 3-540-67123-4 , pp. 398-402, doi : 10.1007 / 978-3-642-59757-2_75 .

- ↑ Hans-Werner Hunziker: Visual information intake and intelligence: An investigation into the eye fixations in problem solving. In: Psychology. Swiss journal for psychology and its applications. Volume 29, No. 1/2, 1970, ISSN 0033-2976 , pp. 165-171.

- ↑ gazehawk.com ( Memento of the original from August 22, 2016 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ↑ sticky.com

- ↑ AH Clarke, J. Ditterich, K. Drüen, U. Schönfeld, C. Steineke: Using high frame rate CMOS sensors for three-dimensional eye tracking. In: Behavior Research Methods, Instruments, & Computers. Volume 34, No. 4, November 2002, ISSN 0743-3808 , pp. 549-560, doi: 10.3758 / BF03195484 .

- ↑ T. Blascheck, K. Kurzhals, M. Raschke, M. Burch, D. Weiskopf, T. Ertl: State-of-the-art of visualization for eye tracking data. In: Proceedings of EuroVis. 2014.

- ↑ a b c Holmqvist, K., Nyström, M., Andersson, R., Dewhurst, R., Jarodzka, H., & Van de Weijer, J .: Eye tracking: a comprehensive guide to methods and measures . Oxford University Press, Oxford 2011, ISBN 978-0-19-969708-3 .

- ↑ Hans-Werner Hunziker: In the eye of the reader. From spelling to reading pleasure. Foveal and peripheral perception. Transmedia, Zurich 2006, ISBN 3-7266-0068-X , p. 67 (Based on data from AS Cohen: Information absorption when driving on curves. In: Psychologie für die Praxis. Bulletin of the Swiss Foundation for Applied Psychology, SSAP. Volume 2, 1983).

- ↑ Jakob Nielsen, Kara Pernice: Eyetracking Web Usability. New Riders Publishing, Thousand Oaks, CA, USA 2009, ISBN 978-0-321-49836-6 .

- ↑ Jennifer Romano Bergstrom, Andrew Schal: Eye Tracking in User Experience Design . Morgan Kaufmann, 2014, ISBN 978-0-12-416709-4 .

- ↑ K. Bartl-Pokorny, F. Pokorny and others: Eye-Tracking: application in basic research and clinical practice. In: Clinical Neurophysiology. 44, 2013, pp. 193-198, doi: 10.1055 / s-0033-1343458 .

- ↑ Sabrina Heike Kessler, Niels G. Mede, Mike S. Schäfer: Eyeing CRISPR on Wikipedia: Using Eye Tracking to Assess What Lay Audiences Look for to Learn about CRISPR and Genetic Engineering . In: Environmental Communication . March 11, 2020, ISSN 1752-4032 , p. 1–18 , doi : 10.1080 / 17524032.2020.1723668 ( tandfonline.com [accessed March 14, 2020]).

- ^ Sabrina Heike Kessler, Arne Freya Zillich: Searching Online for Information About Vaccination: Assessing the Influence of User-Specific Cognitive Factors Using Eye-Tracking . In: Health Communication . tape 34 , no. 10 , August 24, 2019, ISSN 1041-0236 , p. 1150–1158 , doi : 10.1080 / 10410236.2018.1465793 ( tandfonline.com [accessed March 14, 2020]).

- ^ Andreas Bulling, Jamie A. Ward, Hans Gellersen, Gerhard Tröster: Robust Recognition of Reading Activity in Transit Using Wearable Electrooculography. In: Pervasive computing. 6th international conference, Pervasive 2008, Sydney, Australia, May 19-22, 2008. Proceedings. (= Lecture Notes in Computer Science. 5013). Springer, Berlin et al. 2008, ISBN 978-3-540-79575-9 , pp. 19-37, doi : 10.1007 / 978-3-540-79576-6_2 .

- ^ Andreas Bulling, Jamie A. Ward, Hans Gellersen, Gerhard Tröster: Eye Movement Analysis for Activity Recognition. In: UbiComp '09. Proceedings of the 11th ACM International Conference on Ubiquitous Computing, September 30 - October 3, 2009, Orlando, Florida, USA. Association for Computing Machinery, New York NY 2009, ISBN 978-1-60558-431-7 , pp. 41-50, doi: 10.1145 / 1620545.1620552 .

- ^ Andreas Bulling, Jamie A. Ward, Hans Gellersen, Gerhard Troster: Eye Movement Analysis for Activity Recognition Using Electrooculography. In: IEEE Transactions on Pattern Analysis and Machine Intelligence. Volume 33, No. 4, April 2011, ISSN 0162-8828 , pp. 741-753, doi: 10.1109 / TPAMI.2010.86 .

- ↑ heise online: Control Windows 10 with just a glance. Retrieved May 21, 2019 .

- ↑ Matthias Bastian: US $ 11 million for FOVE virtual reality glasses. on: vrodo.de , March 23, 2016.

- ^ Matthias Bastian: Virtual Reality: Eye-Tracking “only costs a few US dollars”. on: vrodo.de , January 3, 2016.

- ↑ Volker Briegleb: MWC 2016: Eye tracking lowers the graphics load in virtual reality. on heise.de , February 22, 2016.

- ↑ Matthias Bastian: Vive Pro Eye: Why HTC used eye tracking. In: News on VR, AR and AI | Into mixed reality. January 14, 2019, accessed on May 21, 2019 (German).

- ↑ Michael Leitner: Tested: We tried the Microsoft HoloLens 2 for you. Retrieved May 21, 2019 .

- ↑ Getting started with eye control in Windows 10. Retrieved May 21, 2019 .

- ↑ Tobii - eye tracking of the future for Office, Adobe Photoshop, TV and games. In: Future Trends. Retrieved on May 21, 2019 (German).