Moore's Law

The Moore's law ( English law moore's ; German "law" within the meaning of "law") states that the complexity of integrated circuits with minimum component cost doubles regularly; Depending on the source, 12, 18 or 24 months are given as the period.

Gordon Moore , who formulated the law in 1965, understood complexity to mean the number of circuit components on an integrated circuit . Occasionally, there is also talk of doubling the integration density, i.e. the number of transistors per unit area. This technical development forms an essential basis of the " digital revolution ".

history

Gordon Moore made his observation in an article in Electronics magazine April 19, 1965 , just a few years after the invention of the integrated circuit . The designation "Moore's Law" was coined around 1970 by Carver Mead . Moore originally predicted an annual doubling, but corrected this statement in 1975 in a speech to the Society of Photo-Optical Instrumentation Engineers (SPIE) to a doubling every two years (see also economic law ). The trigger for this was that the rapid development of semiconductor technology had slowed down in the first few years. In addition to the downsizing of elements and the enlargement of the wafers, what Moore called “ cleverness ” played a role in the early years , namely the art of intelligently integrating components on the chip. The limits of this cleverness were largely exhausted in the 1970s. Moore's then Intel colleague David House brought an estimate of 18 months into play, which is the most widespread variant of Moore's law today and also forms the framework to which the semiconductor industry bases its development plans for several years. In real terms, the performance of new computer chips doubles on average about every 20 months. In the media to this day there is mostly talk of a doubling of the integration density every 18 months.

However, this value refers to data from the mass production of a technology generation that was current at the time. In 2005, for example, chips were manufactured competitively for the world market with structures between 130 and 90 nm . The 65 nm technology was in preparation for mass production (gate length approx. 30 to 50 nm, depending on the technology), and at that time the laboratory was already dealing with smaller structure sizes. The first prototype transistors with a gate length of 10 nm have already been manufactured.

At Intel's Development Forum ( IDF ) in the fall of 2007, Moore predicted the end of his law: It will probably last 10 to 15 years before a fundamental limit is reached. However, only six months later, Pat Gelsinger , head of the digital enterprise division at Intel , predicted that Moore's law would remain in force until 2029. In October 2009, Martin Strobel, in his function as press spokesman for Intel Germany, explained in detail why the company was confident that “Moore's law will be fulfilled for a long time”.

Aside from all these technical questions, however, when reading the original article from 1965 more closely, it becomes apparent that Moore actually does not provide any real reason for why the development should proceed at this speed and in this form. At that time, the development of semiconductor technology was still so new that a (e.g. linear) extrapolation of the previous course into the future could hardly have been called serious. Instead, Moore outlines in detail - and with obvious enthusiasm - a very broad scenario of possible future applications in business, administration (and the military), which at the time - if at all - actually existed as a plan or a rough idea. He claims right at the beginning of his article that "by 1975 economic pressure would lead to the squeezing of up to 65,000 components on a single chip" ( ... by 1975 economics may dictate squeezing as many as 65,000 components on a single silicon chip ... ), but does not provide any further information on where from, in what form or by whom such economic pressure should be exerted. In retrospect, and after information technology has actually developed so rapidly over several decades, it is hardly possible to adequately assess the visionary power that Gordon Moore had to muster to make this forecast.

interpretation

Moore's law is not a scientific law of nature , but a rule of thumb that goes back to an empirical observation. At the same time, one can speak of a “ self-fulfilling prophecy ”, as various branches of industry are involved in the development of better microchips. You have to agree on common milestones (e.g. optical industry with improved lithographic methods) in order to be able to work economically. The formulation of Moore's law has changed significantly over time. While Moore still spoke of the number of components on an integrated circuit, today we are talking about the number of transistors on an integrated circuit, sometimes even about the number of transistors per unit area.

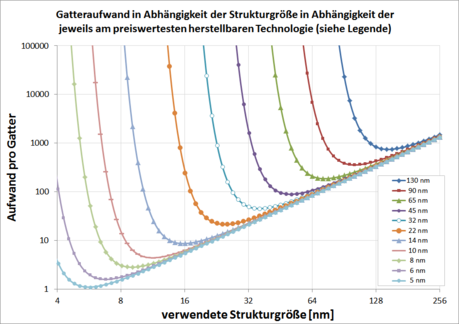

Moore found that the cost of a circuit component increased due to the process, both with decreasing and with increasing number of components. If the number of components was low, the available material was not fully used; for higher numbers of components, experimental methods had to be used that were not yet economically viable. He therefore related his observation exclusively to the respective cost optimum, i.e. the production process and the number of components per circuit for which the costs per circuit component were lowest. This theoretically clearly specifies which manufacturing process and which computer chip would have to be considered in order to check Moore's law each year.

The different formulations sometimes distort Moore's original observation beyond recognition. Even the variable interpretation of the doubling period of 12, 18 or 24 months causes considerable differences. Since computer chips vary greatly in size, it is also not the same whether one looks at the number of transistors per chip or per unit area. Leaving out the cost optimum ultimately leads to complete alienation. Any production process and any circuit can be used to confirm Moore's law without cost optimization; Whether it is a commercially available processor, extremely expensive high technology or experimental circuits that are not yet on the market is irrelevant in this lax design. Due to the different versions in circulation, Moore's law has lost much of its objective expressiveness.

Notation

The complexity in relation to time of Moore's law follows an exponential function :

The rate of increase is the reciprocal of the doubling time , multiplied by the constant :

This connection can also be used in the abbreviated form:

being represented.

Several assumptions are used for the doubling time . Often used is 0.35 per year in this case.

Computing power

Moore's law cannot infer that the computing power of the computer increases linearly with the number of transistors on a computer chip . In modern processors , more and more transistors are used for an integrated memory ( cache ), which only passively contributes to the computing power by accelerating access to frequently required data . As an example, the comparison of two processors from the Pentium III series is given here. On the one hand, it is a "Katmai" with a clock frequency of 500 MHz and an external L2 cache, and on the other hand a "Coppermine" in the 1 GHz version with an integrated L2 cache. The clock frequency of the 1 GHz Coppermine has doubled compared to the 500 MHz Katmai and the number of transistors even tripled, but these comparatively similar processors show an increase in performance by a factor of 2.2 to 2.3.

| processor | Transistors | SPEC values | |

|---|---|---|---|

| Integer | Floating point | ||

| Pentium III 500 MHz (external L2 cache) | 9.5 million | 20.6 | 14.7 |

| Pentium III 1000 MHz (internal L2 cache) | 28.5 million | 46.8 | 32.2 |

In multi-core processors , several processor cores are brought together on one chip, which work in parallel and thus provide more performance. Here, the doubling of the number of transistors is mainly achieved by doubling the number of processor cores. Here, too, there is no doubling of the computing power, because when the processor cores are operated in parallel, additional coordination work is required, which again reduces the performance (see scalability ). In addition, not all program parts in the operating system and in applications can be parallelized, so that it is difficult to fully utilize all cores at the same time. Amdahl's law provides an introductory overview of this topic .

Limits

In addition to criticism of the incorrect interpretation of the law itself, there were always doubts that the trend would continue for a long time, which has not yet been confirmed. Rather, Moore's Law began to work as a self-fulfilling prophecy in the 1990s, as plans to coordinate the development activities of hundreds of suppliers were based on it. Technological hurdles were mastered just-in-time with increasing capital expenditure, see technology nodes . If the financial outlay for developing and manufacturing integrated circuits grows faster than the integration density, the investments may soon no longer be worthwhile. This is likely when approaching physical limits, which are 2 to 3 nanometers due to the quantum mechanical tunnel current . Currently (2016) Intel manufactures 14 nm processors. The manufacturer assumed that it would be able to bring 10 nm technology onto the market by the end of 2015. However, their delivery will probably be delayed by two years. The roadmap published in 2016 no longer follows Moore's law.

Numerous approaches to replacing traditional semiconductor technology are currently being tested. Candidates for fundamentally new technologies are research into nanomaterials such as graphene , three-dimensional integrated circuits (and thus increasing the number of transistors per volume and no longer just per area), spintronics and other forms of multi-valued logic , as well as low-temperature and superconductor computers, optical and Quantum computer . With all these technologies, the computing power or storage density would be increased without increasing the density of transistors in the conventional sense, so that Moore's law would formally lose its validity, but not necessarily in terms of its effects.

Limits of user benefit

On the application side, as the integration density increases, bottlenecks become apparent elsewhere that cannot be resolved through further integration. In the area of the highest computer requirements (especially: numerical flow simulation on computers with numerous cores), however, a clear violation of Moore's law has been observed since around 2003, see Speedup . The time that is required per finite volume ( cell ) and per iteration of the solver has not decreased at all or only marginally since then. The reason is the Von Neumann bottleneck . In fact, many integrated circuits are not even operated at this limit, and higher computing power would then not be immediately reflected as a user benefit.

literature

- Scott Hamilton: Taking Moore's law into the next century . In: Computer . tape 32 , no. 1 , 1999, p. 43-48 , doi : 10.1109 / 2.738303 (English).

- The Technical Impact of Moore's Law . In: MY Lanzerotti (ed.): IEEE solid-state circuits society newsletter . tape 20 , no. 3 , 2006 (English, ieee.org [PDF; 1.3 MB ]).

- RR Schaller: Moore's law: past, present and future . In: IEEE Spectrum . tape 34 , no. 6 , 1997, pp. 52-59 , doi : 10.1109 / 6.591665 (English).

- Christoph Drösser: The how-quickly-technology-scrap-formula . In: The time . No. 16/2005. April 14, 2005.

Web links

- The Silicon Engine | 1965 - "Moore's Law" Predicts the Future of Integrated Circuits . In: Computer History Museum . (English)

- Ray Kurzweil: The Law of Accelerating Returns. In: kurzweilai.net. March 7, 2001 (English, article in which Kurzweil predicts the acceleration of technological development based on Moore's law).

- Jürgen Kuri: 40 years ago: Electronics printed Moore's law . In: Heise online . April 19, 2005. (Article about the background and circumstances of the publication).

- Ilkka Tuomi: The lives and deaths of moores law . In: First Monday . Volume 7, number 11.4 November 2002. (peer reviewed, English).

- Bernd Kling: Nvidia CEO: Moore's Law has come to an end . In: ZDNET . January 10, 2019.

Individual evidence

- ^ R. Hagelauer, A. Bode, H. Hellwagner, W. Proebster , D. Schwarzstein, J. Volkert, B. Plattner, P. Schulthess: Informatik-Handbuch . 2nd Edition. Pomberger, Munich 1999, ISBN 3-446-19601-3 , p. 298-299 .

- ↑ Robert Schanze: Moore's Law: Definition and End of Moore's Law - Simply Explained. In: GIGA . February 25, 2016, accessed October 15, 2019 .

- ↑ GE Moore: Cramming more components Onto integrated circuits . In: Electronics . tape 38 , no. 8 , 1965, p. 114-117 ( intel.com [PDF]).

- ↑ Michael Kanellos: Moore's Law to roll on for another decade . In: CNET News . February 11, 2003.

- ^ R. Chau, B. Doyle, M. Doczy, S. Datta, S. Hareland, B. Jin, J. Kavalieros, M. Metz: Silicon nano-transistors and breaking the 10 nm physical gate length barrier . In: Device Research Conference, 2003 . 2003, p. 123-126 , doi : 10.1109 / DRC.2003.1226901 .

- ↑ We are confident that we will be able to comply with Moore's Law for a long time to come. In: MacGadget. October 19, 2009. Retrieved June 20, 2011 .

- ↑ Gordon Moore describes his law as a "self fulfilling prophecy", see Gordon Moore says aloha to Moore's Law. In: The Inquirer. April 13, 2005, accessed March 4, 2009 .

- ^ A b c M. Mitchell Waldrop: The chips are down for Moore's law . News Feature, Nature 530, February 2016, doi: 10.1038 / 530144a .

- ↑ Stefan Betschon: Computer science needs a fresh start. In: Neue Zürcher Zeitung . International edition. March 2, 2016, p. 37.

- ↑ Moore's Law, Part 2: More Moore and More than Moore ( Memento of the original dated November 14, 2013 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ^ Rainald Löhner, Joseph D. Baum: On maximum achievable speeds for field solvers . In: International Journal of Numerical Methods for Heat & Fluid Flow . 2014, pp. 1537–1544, doi: 10.1108 / HFF-01-2013-0016 . (English)

artikelDo not use parameters with different content in the article namespace!