Computer vision

Computer vision is a science in the border area between computer science and engineering and tries to process and analyze the images recorded by cameras in a wide variety of ways in order to understand their content or to extract geometric information. Since it is a technical term, Computer Vision is usually not translated. In German, computer vision means something like computer-based vision (or, for short: computer vision ). Areas of application are e.g. B. the autonomous navigation of robots (driver assistance systems), the film industry for the creation of virtual worlds ( virtual reality ), the games industry for immersing and interacting in virtual spaces ( augmented reality ), the detection and tracking of objects (e.g. pedestrians) or for the registration of medical CT recordings and the detection of diseased tissue, etc. Statistical (or probabilistic ) methods are used, methods of image processing , projective geometry , artificial intelligence and computer graphics . In addition, there is a close relationship to related fields such as photogrammetry , remote sensing and cartography .

history

Since around the 1960s there have been first attempts to understand a scene by means of edge extraction and its topological structure. The extraction of various features, such as edges and corners, was an active area of research from the 1970s to 1980s. At the beginning of the 1980s, it was investigated how variations in shading are caused by topographical (height) changes, thus laying the foundation for photometry and 3D reconstruction using shadows. At the same time, the first feature-based stereo correspondence algorithms and intensity-based algorithms for calculating the optical flow were developed. In 1979, the first work on the simultaneous restoration of the 3D structure and the camera movement ( Structure from Motion ) began.

With the advent of digital cameras in the 1980s, more and more applications have been researched and developed. Image pyramids were first used by Rosenfeld in 1980 as a coarse-to-fine strategy to search for homologous pixels (correspondence search). The concept of scale-space is also based on image pyramids and has been explored significantly, which is the basis of modern methods such as SIFT (Scale Invariant Feature Transform).

In the 1990s, projective invariants began to be investigated in order to solve problems such as structure-from-motion and projective 3-D reconstruction without knowledge of camera calibration. At the same time, efficient algorithms such as factorization techniques and global optimization algorithms were developed.

Since there were inexpensive cameras and the PCs became more and more powerful, this subject has received an enormous boost.

complexity

The tasks are often inverse problems, where attempts are made to recreate the complexity of the three-dimensional world from two-dimensional images. Computer vision tries to reconstruct properties from images, such as the color shape, the lighting or their shape. B. to recognize faces, to classify agricultural areas or to recognize complex objects (cars, bicycles, pedestrians). A person can do all this in a playful way, but it is extremely difficult to teach a computer to do this.

The attempt to model our visible world in its entirety is much more difficult than, for example, to generate a computer-generated artificial voice (Szeliski 2010, p. 3). Scientists who do not work in this field often underestimate how difficult the problems are and how error-prone their solutions are. On the one hand, this means that tailor-made solutions are often required for problems. On the other hand, however, this severely limits their versatility. For this reason, among other things, there is no single solution for any task, but many different solutions, depending on the requirements, and this also explains why there are so many competing solutions in the professional world.

Overview of the methodology

The actual task of computer vision is to teach a camera connected to the computer to see and understand. Various steps are necessary for this and there are different methods depending on the task. These are to be briefly outlined here.

First of all, you need a recorded image ( image creation section ) which usually needs to be improved (e.g. brightness and contrast compensation). Then you usually try to extract features such as edges or corner points ( section Feature extraction ). Depending on the task, one uses z. B. Cornerstones for the correspondence search in stereo images. In addition, other geometric elements such as straight lines and circles can be recognized by means of the Hough transformation ( section Hough transformation ). Certain applications try to use image segmentation to select uninteresting image components such as the sky or the still background ( section Image segmentation ).

If you want to use a camera for measurement, the parameters of the camera model (inner orientation) are usually determined by a camera calibration ( section Camera calibration ). In order to estimate the mutual position of a stereo image pair from the image content, various algorithms are used to calculate the fundamental matrix ( section Fundamental matrix ).

Before you can perform a 3D reconstruction, you first need homologous (corresponding) pixels ( section Correspondence Problem ). Then you are able to determine the 3D points by cutting forward ( triangulation ) ( section 3D reconstruction ). There are also various options for determining the shape of an object three-dimensionally. In English usage, the term Shape-from-X has become established here. The X stands for one of these methods ( section Shape-from-X ).

Image creation

The image creation describes the complex process of image acquisition starting with the electromagnetic radiation , the interaction with the surface ( absorption and reflection ), the optical imaging and the detection by means of camera sensors .

Pinhole camera model

Among other ways to model a camera, the most commonly used model is the pinhole camera. The pinhole camera is an idealized model of a camera, which represents a realization of the geometric model of the central projection. With the aid of ray theorems, mapping formulas can be derived in a simple manner.

Real camera

A real camera differs from the pinhole camera model in many ways. You need lenses to capture more light and a light-sensitive sensor to capture and store the image. This leads to various deviations, which on the one hand are physically caused and on the other hand result from inevitable manufacturing inaccuracies. Both lead to distortions in the recorded image. They are caused on the one hand by the sensor and on the other hand by the lens.

There are color deviations ( radiometric or photometric deviation) and geometric deviations ( distortion ) in the sensor . Deviations that are caused by the objective, i.e. by the individual lenses, are called aberrations . It also leads to color deviations (e.g. color fringes) and geometric distortions (distortion).

It also leads to atmospheric refraction (refraction). However, at close range the effect is so small that it can usually be neglected.

Digital sensors

To detect the light, you need light-sensitive sensors that can convert light into electricity. As early as 1970 a CCD sensor (English: charge coupled device , in German: charge coupled device) was developed for image recording. A line sensor is obtained by lining up in a row and a flat sensor is obtained by arranging it in a surface. Each individual element is referred to as a pixel (English: picture element).

As an alternative to this, there is also a planar sensor called CMOS (English: complementary metal-oxide-semiconductor, in German: complementary / complementary metal-oxide- semiconductor ).

Such a sensor is usually sensitive beyond the spectrum of visible light in the ultra-violet range and far into the infrared range of light. In order to be able to record a color image, you have to have your own sensor for the respective primary colors red, green and blue (short: RGB). This can be done by dividing the light over three different surfaces (see figure on the right). Another possibility is to provide pixels lying next to one another with different color filters. Usually a pattern developed by Bayer is used for this ( Bayer pattern ).

In addition, other - mostly scientifically motivated - color channels are also in use.

Camera calibration

In a narrower sense, camera calibration is understood to mean determining the internal orientation . These are all model parameters that describe the camera geometry. This usually includes the coordinates of the main point , the camera constant and distortion parameters . In a broader sense, camera calibration is also understood to mean the simultaneous determination of the external orientation. Since one usually has to determine both anyway, at least when performing a calibration using known 3D coordinates, this is often used synonymously in computer vision. In photogrammetry, on the other hand, it is still quite common to carry out a laboratory calibration (e.g. using a goniometer ), where the internal orientation can be determined directly.

Most often, a camera is calibrated using a known test field or calibration frame. The 3D coordinates are given and the mapped image coordinates are measured. Thus, using the known mapping relationships, a system of equations can be set up in order to determine the parameters of the mapping model. A suitable camera model is used depending on the accuracy requirements. An exact model is shown in the picture (see fig. Right).

Optical terms in cameras

A real camera differs in many ways from the pinhole camera model. It is therefore necessary to define some optical terms.

A lens usually contains a diaphragm (or the frame of the lens, which has the same effect) and the question arises: Where is the projection center? Depending on which side you look into the lens, you will see a different image of the aperture . The two images can be constructed according to the rules of geometric optics . The light enters the lens from the object space (from the left in the figure) and creates the entrance pupil (EP) as an image of the diaphragm . The light exits again towards the image space and creates the exit pupil (AP). The respective center points of the entrance pupil and the exit pupil lie on the optical axis and are the points through which the main ray (corresponds to the projection ray in the pinhole camera model) passes unbroken. Therefore the center of the EP is the projection center and the center of the AP is the image-side projection center .

The projection center on the image side is used to establish the relationship between a camera coordinate system and an image coordinate system . It is projected vertically into the image plane and creates the main point . The distance between and is defined as the camera constant . Due to unavoidable manufacturing inaccuracies, the extension of the optical axis is not (exactly) perpendicular to the image plane and creates the point of symmetry of the distortion (also called the center of distortion ) as the intersection point . However, it is often common practice to equate the center of the distortion with the main point for the computational determination. Because the two points are usually close to each other, which leads to a strong correlation. The precision during camera calibration suffers as a result.

To define the recording direction, imagine that the main point is projected back into the object space. Because this ray goes through the projection center on the image side , it must also go through the projection center . This one ray is thus quasi a main ray and, moreover, the only ray that is projected perpendicularly onto the image plane. This beam thus corresponds to the recording axis and is at the same time the Z-axis of the camera coordinate system .

The angle between the recording axis and an object point changes when it exits the image space and creates the image point . This change in angle is an expression of distortion .

Distortion correction

Distortion includes all deviations caused by the lens compared to the ideal model of the pinhole camera. Therefore, the error must be corrected as if the images were taken by a perfect linear camera ( pinhole camera ). Since the lens distortion occurs when the object point is originally mapped onto the image, the resulting error is modeled with the following equation:

Are there

- the ideal pixels without distortion,

- the recorded image coordinates,

- the radial distance from the center of the distortion (usually the center of the image) and

- the directory factor, which only depends on.

The correction is then made using

and are the measured and corrected image coordinates and , the center of the distortion with . is only defined for positive . An approximation is usually made using a Taylor approximation . Because of the symmetry of the distortion curve with respect to the center of the distortion, only odd powers are necessary (hence also called the Seidel series). is then

In addition, there is a close correlation between the first term and the camera constant , because of . The first term is therefore often removed, which can significantly increase the precision of the adjustment.

The coefficients are part of the camera's internal calibration. They are mostly determined using iterative methods of adjustment calculation.

One possibility is to use straight lines such as B. suspended plumb bobs. If corrected, these must be mapped in straight lines. The solution is then to minimize a cost function (for example the distance between the ends of the line and the center point). This method is also known as plumbline calibration .

The main point is usually assumed - within the framework of the accuracy requirements - as the center of the distortion. The directory correction together with the camera calibration matrix thus completely describes the mapping of the object point onto an image point.

Image processing (filtering, smoothing, noise reduction)

Objective: exposure correction, color balancing, suppression of image noise, improvement of sharpness

Principle: linear filters that fold a signal (e.g. difference formation between neighboring points)

Different kernels and their effects (difference, Gauss)

Feature extraction and pattern recognition

Edge detection

With the help of different image processing algorithms one tries to extract edges in order to z. B. derive geometric models.

Corner detection (point detection, corner detection)

Image processing methods can also be used to extract points that stand out well from their surroundings. To find such points, gradient operators are used which examine neighboring pixels along two main directions for changes in their brightness values. A good point is defined by the fact that the gradient is as large as possible along both main directions. This can be described mathematically as an error ellipse , which should be as small as possible. The axes of the error ellipse are determined by calculating the eigenvalues of the covariance matrix (see Förstner operator ). Such identified points have a variety of purposes, including for estimating the fundamental matrix (see epipolar geometry # fundamental matrix ).

Image segmentation

In image segmentation, one tries to identify coherent image areas. Here, methods of feature extraction are combined with image areas that have approximately the same color. A prominent example is the watershed transformation . B. extract individual bricks from a house wall. The image segmentation is used, among other things, to classify different areas in remote sensing and enables z. B. differentiate different stages of plant growth. In medicine, this can support the detection of diseased tissue in X-ray or CT images.

Hough transformation

Using the Hough transformation, it is possible to detect lines and circles. This is z. B. used to identify lane markings ( lane departure warning ) or street signs.

Object detection

Object recognition is a complex interplay of feature extraction, pattern recognition and self-learning decision algorithms of artificial intelligence . For example, one would like to distinguish pedestrians from other road users for driver assistance systems such as cars, bicycles, motorcycles, trucks, etc.

Basics of projective geometry

Concept of homogeneous coordinates

Homogeneous coordinates are used to advantage for the mathematical description of projective processes. By adding another component to a two-dimensional point vector, a three-dimensional vector is created, whereby addition and multiplication can be expressed in an entire transformation matrix. Sequential transformations can thus be combined into a single overall transformation matrix. In addition to the advantage of the compact display, rounding errors are avoided.

Projective Transformation (Homography)

A projective transformation is often used to convert from one level to another. In English usage, this is known as homography. A square 3x3 matrix with full rank describes such a reversibly unique mapping.

Standard imaging model (central projection)

This describes the mapping of an object point into the image.

Correspondence problem (pixel assignment)

The search for associated (homologous) image points between stereo images is referred to as a correspondence problem in Computer Vision. In the English technical jargon this is also known as image matching . This is a core problem which is particularly difficult because conclusions are drawn from the two-dimensional image as to its three-dimensional equivalent. There are therefore many reasons why the search for corresponding pixels can fail:

- the perspective distortion causes differently depicted geometric shapes of a surface section in the images

- Cover-ups mean that the corresponding point cannot be found

- Differences in the lighting conditions (difference in brightness and contrast) can also make assignment more difficult

- the different perspective also leads to differences in the reflectance in the direction of the camera of the light hitting the surface

- repeating patterns can lead to incorrectly assigned pixels

Accordingly, there are a number of very different methods. A distinction is made between gray value-based (areal) and feature-based methods. The two-dimensional methods examine small image sections and compare the respective gray values (brightness values). The feature-based methods first extract features (e.g. corner points) and compare feature vectors based on them.

Stereo image processing

Epipolar geometry

The epipolar geometry describes the imaging geometry of a 3D object point in a stereo image pair. The relationship between the image coordinates of corresponding points is described by a fundamental matrix. It can be used to determine the associated epipolar line in the second image for a given point in the first image, on which the corresponding image point is located.

The fundamental matrix can be estimated from a number of corresponding pixels. There are two widely used calculation methods for this purpose: the minimum 7-point algorithm and the 8-point algorithm .

Image sequence processing (structure from movement)

Based on these distributed pairs of pixels (sparse image matching), it is possible to estimate the fundamental matrix in order to determine the mutual relative orientation of the images. This is usually followed by a dense correspondence search (dense image matching). Alternatively, the corresponding points are estimated with the help of global optimization methods .

Shape-from-X

Shape-from-Stereo

This is a brief summary of stereo reconstruction to give a complete overview of all methods. For a detailed consideration see p. 3D reconstruction section .

In the stereo reconstruction, two images are used from different points of view. Human spatial vision ( stereoscopic vision ) serves as a model . If one knows the mutual relative orientation, then one can use corresponding homologous image point pairs to calculate the 3D object points by means of triangulation. The tricky thing is finding correspondence, especially for surfaces with little texture or hidden areas.

Shape-from-Silhouette / Shape-from-Contour

In this process, several images are used, which depict the object from different directions, in order to derive its geometric shape from its outer outline (the silhouette). In this process, the contour is cut out of a rough volume, much like a sculptor carves a bust out of a rough block of wood. In English usage, this is also referred to as shape-from-contour or space carving.

The prerequisite for this technology is that the object to be determined (foreground) can be separated from the background. Image segmentation techniques are used. The result is then a representation of a volume by means of voxels shown and will visual sheath (in English: visual hull ) mentioned.

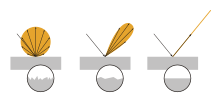

Shape-from-Shading / Photometric Stereo

This method tries to determine the shape of an object based on its shading. It is based on two effects: firstly, the reflection of parallel radiation hitting a surface depends on the surface normal and the texture (especially roughness) of the surface, and secondly, the brightness seen by the observer (camera) depends on the perspective, more precisely on the Angle at which the surface is viewed.

In the case of a reflection on a rough surface, one speaks of diffuse reflection, which is described by Lambert's law of cosines (see fig. Left). The direction of the illumination source only plays a role insofar as the total radiation energy is reduced, depending on the angle of incidence. The reflection (the angle of reflection) is however completely independent of the angle of incidence, it is only dependent on the angle to the surface normal. Assuming diffuse reflection, the radiation reflected to the viewer (camera) is therefore only dependent on the cosine of the angle to the surface normal. This can be used advantageously if one knows the illuminance in order to calculate the direction of the surface normals.

Shape-from-motion / optical flow

With optical flow , a sequence of images is examined to determine whether and how the images (or the camera) have moved. For this purpose, local changes in brightness between neighboring images are examined. Various methods of feature extraction and methods of correspondence analysis are used to identify corresponding points. The difference between these corresponding points then corresponds to the local movement.

Based on these points, it is possible to determine the shape of the object through 3D reconstruction (see section 'Structure from movement'). However, because only a few points are used, the result is very rough and is only suitable for recognizing obstacles in order to aid navigation. However, it is unsuitable for accurate 3D modeling.

Shape-from-texture

Do you know the texture applied to a surface, e.g. B. a piece of fabric with a repeating pattern, then the pattern changes due to local bumps. More precisely, the angle at which the surface (and thus surface normal) is viewed changes and thus distorts the visible geometric shape of the texture. In this respect, this method is similar to shape-from-shading. Many steps are necessary to derive the shape including the extraction of the repeating patterns, the measurement of local frequencies to calculate local affine deformations and finally to derive the local orientation of the surface.

In contrast to the light strip method (see section Structured Coded Light), the texture is actually present on the surface and is not artificially created by a projector.

Structured coded light

If you replace a camera in a stereo camera system with a projector which emits structured (coded) light, you can also perform a triangulation and thus reconstruct the three-dimensional shape of the object. The structured light creates a well-known texture, which is depicted on the surface in a distorted manner due to the relief . The camera "recognizes" the respective local coded structure based on this texture and can calculate the 3D position by means of a ray section (see also strip light scanning and light section method ). This is sometimes mistakenly equated with shape-from-texture.

Shape-from- (de-) focus

The lens equation describes the basic mapping of an object point and its sharply mapped image point for a camera with a lens (see geometric optics ). The diameter of the blur is proportional to the change in the focus setting (corresponds to the change in the image width). Provided that the distance to the object is fixed, the object distance (corresponds to the distance to the object) can be calculated from a series of blurred images and measurement of the diameter of blurred points.

Active and other sensors

LiDAR

LiDAR ( light detection and ranging , in German: light detection and distance measurement) is an active method for non-contact distance measurement. The measuring principle is based on measuring the transit time of an emitted laser signal. This method is used, among other things, in robotics for navigation.

3D TOF camera

A 3D ToF camera (time of flight) is a camera with an active sensor. The difference to other methods such as laser scanning or lidar is that it is a two-dimensional sensor. Similar to a normal digital camera, the image plane contains evenly arranged light sensors and additional tiny LEDs (or laser diodes) that emit a pulse of infrared light. The light reflected from the surface is captured by the optics and mapped onto the sensor. A filter ensures that only the emitted color is allowed through. This enables the simultaneous determination of the distance of a surface piece. It is used in autonomous navigation for object recognition.

Kinect

Kinect is a camera system with structured light for object reconstruction.

Omnidirectional cameras

An omnidirectional camera is able to take a picture from all directions (360 °). This is usually achieved by a camera which is aligned with a conical mirror and thus the environment reflected by the mirror is recorded. Depending on the orientation, it is thus possible to take a complete horizontal or vertical all-round image with just one recording.

Other methods

SLAM

SLAM ( English Simultaneous Localization and Mapping ; German Simultaneous Position Determination and Map Creation ) is a process that is primarily used for autonomous navigation. A mobile robot is equipped with various sensors in order to capture its surroundings in three dimensions. The special thing about this process is that the position determination and the map creation are carried out simultaneously. The determination of the absolute position is actually only possible if one already has a map and can determine its position within the map using landmarks that the robot identifies. However, the maps are often not detailed enough, which is why a mobile robot cannot find any landmarks that are present on the map. In addition, the identification of such landmarks is extremely difficult because the perspective of a map is completely different from the perspective of the robot. One tries to solve such problems with SLAM.

Individual evidence

- ↑ a b "COMPUTER VISION - A MODERN APPROACH", Forsyth, Ponce, 2nd Edition, 2012, Pearson Education (Prentice Hall), USA, New Jersey, ISBN 978-0-13-608592-8 (ISBN-10: 0- 13-608592-X)

- ^ "Concise Computer Vision - An Introduction into Theory and Algorithms", Reinhard Klette, Springer-Verlag London 2014, ISBN 978-1-4471-6320-6 (eBook), ISBN 978-1-4471-6319-0 , DOI 10.1007 / 978-1-4471-6320-6

- ^ A b "Computer Vision - Algorithms and Applications", Richard Szeliski, Springer-Verlag London Limited 2011, ISBN 978-1-84882-934-3 , e- ISBN 978-1-84882-935-0 , DOI 10.1007 / 978 -1-84882-935-0, http://szeliski.org/Book

- ↑ a b Richard Szeliski: Computer Vision (= Texts in Computer Science ). Springer London, London 2011, ISBN 978-1-84882-934-3 , doi : 10.1007 / 978-1-84882-935-0 ( springer.com [accessed May 25, 2020]).

- ^ Karl Kraus .: Photogrammetry (Volume 1, Fundamentals and Standard Methods) . With contributions by P. Waldhäusl. 6th edition Dümmler, Bonn 1997, ISBN 3-427-78646-3 .

- ↑ Ludwig Seidel: About the theory of the errors with which the images seen through optical instruments are afflicted, and about the mathematical conditions for their removal. In: Royal Bavarian Academy of Sciences in Munich (Hrsg.): Treatises of the scientific-technical commission . tape 1 . Munich 1857, p. 227–267 ( OPACplus Bayerische Staatsbibliothek ).

- ^ A b McGlone, J. Chris., Mikhail, Edward M., Bethel, James S., Mullen, Roy., American Society for Photogrammetry and Remote Sensing .: Manual of photogrammetry . 5th ed. American Society for Photogrammetry and Remote Sensing, Bethesda, Md. 2004, ISBN 1-57083-071-1 .

- ^ Thomas Luhmann: Nahbereichsphotogrammetrie . Wichmann, Heidelberg 2003, ISBN 3-87907-398-8 .

- ↑ Rodehorst, Volker .: Photogrammetric 3D reconstruction at close range by auto-calibration with projective geometry . Wvb, Wiss. Verl, Berlin 2004, ISBN 3-936846-83-9 .

- ↑ a b c Anke Bellmann, Olaf Hellwich, Volker Rodehorst, Ulas Yilmaz: A BENCHMARK DATASET FOR PERFORMANCE EVALUATION OF SHAPE-FROM-X ALGORITHMS. (PDF) In: https://www.isprs.org/proceedings/XXXVII/congress/tc3b.aspx . The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Vol. XXXVII. Part B3b. Beijing 2008, July 2008, accessed June 6, 2020 .

- ↑ Tobias Dierig: Obtaining depth maps from focus series. 2002, accessed June 6, 2020 .

- ↑ Rongxing Li, Kaichang Di, Larry H. Matthies, William M. Folkner, Raymond E. Arvidson: Rover Localization and Landing Site Mapping Technology for the 2003 Mars Exploration Rover mission. January 2004, accessed June 11, 2020 .