Semantic Web

The Semantic Web extends the Web to make it easier for computers to exchange data and for them to use it more easily; For example, the word “Bremen” in a web document can be supplemented with information as to whether it refers to the name of the ship, family or city. This additional information explicates the otherwise unstructured data. Standards for the publication and use of machine-readable data (especially RDF ) are used for implementation.

While people can infer such information from the given context (from the entire text, about the type of publication or the category in the same, images, etc.) and unconsciously build such links, machines must first be taught this context; for this purpose, the content is linked with further information. To this end, the Semantic Web conceptually describes a “ Giant Global Graph ” ( “ gigantic global graph ”). All things of interest are identified and assigned a unique address as nodes , which in turn are connected to one another by edges (also each clearly named). Individual documents on the web then describe a series of edges, and the entirety of all these edges corresponds to the global graph.

example

In the following example, in the text "Paul Schuster was born in Dresden" on a website, the name of a person is linked to their place of birth. The fragment of an HTML document shows how a small graph is described in RDFa syntax using the schema.org vocabulary and a Wikidata ID:

<div vocab="http://schema.org/" typeof="Person">

<span property="name">Paul Schuster</span> wurde in

<span property="birthPlace" typeof="Place" href="http://www.wikidata.org/entity/Q1731">

<span property="name">Dresden</span>

</span> geboren.

</div>

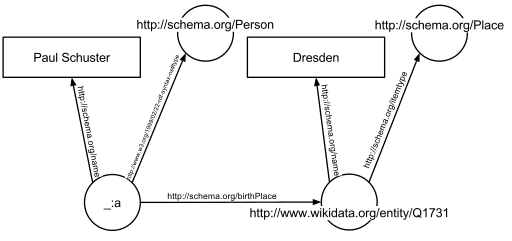

The example defines the following five triples (shown in turtle format). In this case, each triplet represents an edge (in English edge called) in the resulting graph: The first part of the triple (the subject ) is the name of the node, where the edge starts, the second part of the triple (the predicate ) the type of edge , and the third and last part of the triple (the object ) either the name of the node in which the edge ends or a literal value (e.g. a text, a number, etc.).

_:a <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://schema.org/Person> .

_:a <http://schema.org/name> "Paul Schuster" .

_:a <http://schema.org/birthPlace> <http://www.wikidata.org/entity/Q1731> .

<http://www.wikidata.org/entity/Q1731> <http://schema.org/itemtype> <http://schema.org/Place> .

<http://www.wikidata.org/entity/Q1731> <http://schema.org/name> "Dresden" .

The triples result in the graph opposite (top figure).

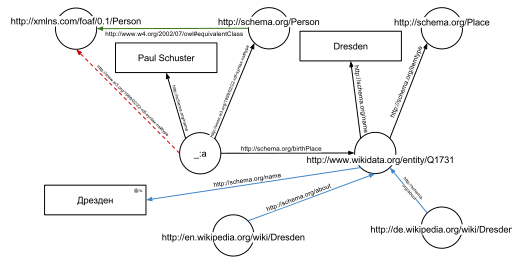

One of the advantages of using URIs is that they can be resolved using the HTTP protocol and often return a document that further describes the given URI (this is the so-called Linked Open Data principle ). In the example given, the URIs of the nodes and edges (e.g.http://schema.org/Person, http://schema.org/birthPlace. http://www.wikidata.org/entity/Q1731) resolve all and then receive further descriptions, e.g. B. that Dresden is a city in Germany, or that a person can also be fictional.

The adjacent graph (lower figure) shows the previous example, enriched by (a few exemplary) triples from the documents that are obtained when one http://schema.org/Person (green edge) and http://www.wikidata.org/entity/Q1731 (blue edges) dissolves.

In addition to the edges explicitly given in the documents, other edges can also be inferred automatically : the triple

_:a <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://schema.org/Person> .

from the original RDFa fragment, along with the triple

<http://schema.org/Person> <http://www.w3.org/2002/07/owl#equivalentClass> <http://xmlns.com/foaf/0.1/Person> .

from the document which you can find in http://schema.org/Personfound (in the graphic the green edge), allow the following triple to be concluded under the OWL semantics (in the graphic, the dashed red edge):

_:a <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://xmlns.com/foaf/0.1/Person> .

term

The name Semantic Web (German Semantic Web ) has often led to misunderstandings and discussions. Numerous other terms have been suggested, but ultimately all have the same goal:

- Based on the term Web 2.0 , according to John Markoff , one speaks of Web 3.0 when the concepts of the Semantic Web are added to the concepts of Web 2.0.

- In contrast to the term WWW , Tim Berners-Lee, the founder of the WWW, introduced the term Giant Global Graph (GGG) , which emphasizes the structure of the Semantic Web as a global, linked graph structure.

- The term Linked Open Data was introduced around 2007, partly to differentiate it from the Semantic Web, which is seen as heavy and complex .

- The W3C itself changed in December 2013 as the new term Web of Data , Web data

Basics

The concept is based on a suggestion by Tim Berners-Lee , the founder of the World Wide Web: the Semantic Web is an extension of the conventional Web, in which information is given clear meanings in order to facilitate work between man and machine (“The Semantic Web is an extension of the current web in which information is given well-defined meaning, better enabling computers and people to work in cooperation ”). The Semantic Web builds on existing web standards and work in the field of knowledge representation .

"Classic" web

The "classic" (only text based) web - the web of documents , as it was introduced by Tim Berners-Lee and has been continuously expanded since then - is based on a number of standards:

- HTML as a markup language for the content of usually text documents,

- URLs as notation for addresses of any documents (i.e. files) on the web and

- HTTP as the protocol to access and edit these documents.

Very soon, images in formats like GIF and JPEG were also embedded in HTML documents and exchanged. In the original proposal by Tim Berners-Lee from 1989, he pointed out that these standards can be used not only for the exchange of entire documents, but also for the exchange of networked data. However, like other parts of the proposal (e.g. that all pages on the web should be easy to edit), this was initially lost.

Thus, most of the content on the web today, e.g. E.g .: natural language text, images, etc. Videos, mostly unstructured in the sense that the structure of a (classic) HTML document does not explicitly state whether a piece of text is: a first name or surname, the name of a city or a company, or an address . This complicates the machine processing of the content, which would be desirable in view of the rapidly growing amount of information available.

The standards of the Semantic Web are intended to offer solutions to this problem: individual parts of text can not only be formatted for their appearance, but also marked with their content / meaning, and entire texts can be structured so that computers can easily process data read from the documents.

By using the common RDF data model and a standardized ontology language , the data can also be integrated worldwide and even implicit knowledge can be derived from the data.

Metadata

HTML documents were already given the option of storing a limited amount of metadata - in this case data about the respective documents.

In the mid-1990s, Ramanathan V. Guha (a student of McCarthy and Feigenbaum and an employee of the Cyc project) began work on the Meta Content Framework (MCF), initially at Apple and from 1997 at Netscape . The aim of the MCF was to create a general basis for metadata. At the same time, the W3C web standard consortium was working on XML . The idea of MCF was then combined with the syntax of XML to make the first version of RDF.

The first widespread use of RDF was found in RSS , a standard to feed display and subscribe. This was mainly used in blogs to which RSS readers could then subscribe.

Although initially only metadata was thought of - especially metadata on documents available on the web, which can then be evaluated by indexing and search engines - this restriction no longer applies with the development of RDF and at the latest with the article in Scientific American 2001. RDF is a standard for exchanging data and is by no means restricted to metadata. Nevertheless, in many texts on the Semantic Web, only outdated metadata is used.

Most of the syntaxes for exchanging RDF - NTriples, N3, RDF / XML, JSON-LD - are not able to be used directly in the text to mark text passages (in contrast to RDFa).

Accordingly, the expansion of Uniform Resource Locators (URLs), which are used to address documents on the web, to Uniform Resource Identifiers (URIs), which can be used to identify anything, especially things that are in of the world (e.g. houses, people, books) or are just abstract (e.g. ideas, religions, relationships).

Knowledge representation

The origins of the Semantic Web lie, among other things, in the research field of artificial intelligence , in particular the sub-area of knowledge representation . MCF was already systematically based on predicate logic .

Originally, the attributes for metadata in the documents were strictly limited: in HTML it was possible to specify keywords, publication dates, authors, etc. This area was then greatly expanded and systematically expanded by the Dublin Core group, with a great deal of experience from library studies being incorporated. But that too resulted in a limited vocabulary, i.e. H. to a small set of usable attributes and types. Such a small vocabulary can be processed by a computer program with comparatively little effort.

One goal of the Semantic Web was to be able to represent any data. To do this, it was necessary to be able to expand the vocabulary, i.e. to declare any relationships, attributes and types. The declaration of these vocabularies, and ontologies called to build on a solid formal basis, developed independently two languages spoken in the US by DARPA funded DARPA Agent Markup Language (DAML) and in the EU from Framework Program funded Ontology Inference Layer ( OIL) in Europe. Both built on previous results in the field of knowledge representation, in particular frames , semantic networks , conceptual graphs and description logics . The two languages were finally combined in a joint project under the leadership of the W3C around 2000, initially as DAML + OIL , and finally the ontology language Web Ontology Language (OWL) published in 2004 .

Standards

Standards for the publication and use of machine-readable data are used to implement the Semantic Web. Central standards are:

- URIs in the dual role for the identification of entities and to the references to further information to

- RDF as a common data model for the representation of statements

- RDFS to declare the vocabulary used in RDF

- OWL for the formal definition of the vocabulary declared in RDFS in an ontology

- RIF for the representation of rules

- SPARQL as query language and protocol

- a number of different syntaxes to exchange RDF graphs:

Identifier: URIs

URIs - English. Uniform Resource Identifiers - fulfill a twofold task in the Semantic Web: On the one hand, they serve as unique, globally valid names for all things that are referred to in the Semantic Web. This means that the same URI in different documents denotes the same thing. This makes it possible to combine data easily and translate it clearly. On the other hand, the URI can also serve as an address from which further data on the designated resource can be called up, in the case of a document the document itself. In this case the URI cannot be distinguished from a URL.

Although every URI identifies exactly one thing worldwide, the reverse is not the case that a thing is identified exactly by a URI worldwide - on the contrary, things like the city of Bremen, the person Angela Merkel or the film Das Fenster zum Hof often have many different URIs. To make the association between these different URIs easier, there are several ways to say that two URIs denote the same thing, e.g. B. by key or by explicitly linking two URIs with the sameAs relation from the OWL vocabulary.

Data model: RDF

RDF as a data model is based on triples of subject , predicate and object . A set of RDF triples result in an RDF graph. Here the subject and the object are regarded as nodes, and the predicate is the name of the directed edge from subject to object. Predicates are always URIs, subjects are usually URIs, but can also be unnamed nodes ( blank nodes ), and objects are either URIs, unnamed nodes or literals. Literals are e.g. B. Texts, numbers, dates etc.

Unlike nodes named with URIs, unnamed nodes are only named locally; that is, they do not have a globally unique name. If two different RDF graphs each have a node with the URIhttp://www.wikidata.org/entity/Q42then this node designates the same thing by default. A second graph can make further statements about the same things as the first graph and thus allows everyone to say everything about everything. However, if an unnamed node is used in an RDF graph, a second graph cannot make direct statements about the unnamed node of the first graph.

Advantages of RDF graphs are that they are very regular - they are just sets of triples - and that they are very easy to put together. Two graphs result in a graph by simply joining their sets of triples. In some triple-based syntaxes such as NTriple, this means that you can simply append the files together.

Definition of vocabularies: RDFS and OWL

The RDF schema (RDFS, initially "RDF Vocabulary Description Language" but then renamed "RDF Schema" in 2014) was defined to define classes of things and their properties and then to set them in formal relationships with one another. For example, RDFS can be used to state that the propertyhttp://purl.org/dc/elements/1.1/titlein the English title and the German title is called. In addition, a description can state that this property should be used for the title of a book. In addition to these natural language descriptions, RDFS also allows formal statements to be made: e.g. B. that everything that has the named property belongs to the classhttp://example.org/buch belongs, or that everything that belongs to this class also belongs to the class http://example.org/Medium heard.

The Web Ontology Language (OWL) extends RDFS with much more expressive elements in order to further specify the relationships between classes and properties. So OWL z. For example, the statement that two classes can not contain any elements in common , that a property is to be understood transitively , or that a property can only have a certain number of different values. This enhanced expressiveness is used primarily in biology and medicine. Vocabularies are often referred to interchangeably as ontologies , the latter are more often more formalized than vocabularies.

The definition of these terms themselves does not take place by the W3C in a generally valid vocabulary, but everyone can publish their own vocabulary in the same way as the data itself is published. As a result, there is no central institution that defines all vocabulary. The vocabularies are self-describing in that, like the data, they can be published in RDF and as Linked Open Data and are thus part of the Semantic Web itself.

Numerous vocabularies have been developed over the years, but very few of them have had any further influence. Worth mentioning here are Dublin Core for metadata on books and other media, Friend Of A Friend for describing a social network, Creative Commons for displaying licenses, and some versions of RSS for displaying feeds. A particularly widespread vocabulary became Schema.org , which was launched in 2011 as a result of the collaboration of the largest search engines and portals and which covers many different areas.

Serializations

RDF is a data model and not a specific serialization (i.e. the exact syntax in which the data is exchanged). For a long time, RDF / XML was the only standardized serialization format, but it soon became clear that RDF - with the graph model and the base in triples - and XML - which is based on a tree model - do not go very well together. Over the years, other serialization formats have spread, such as: B. the related N3 and Turtle , which are much closer to the triple model.

Two serialization formats are particularly worth mentioning because they pragmatically opened up new fields of application, RDFa and JSON-LD .

RDFa is an extension of the HTML syntax that allows data to be integrated directly into the website itself. This allows z. B. a person with their address, a concert including place and time, a book including author and publisher, etc., can be labeled on the website itself. Due to its use primarily in Schema.org and its use in most search engines, the amount of RDF on the web has grown enormously within two years: in 2013, more than four million domains had RDF content.

For web developers, JSON-LD tries to stay as close as possible to the usual use of JSON as a data exchange format. Most of the data is exchanged as simple JSON data, and a context data record defines how the JSON data can be converted to RDF. JSON-LD is widely used today to embed data in other formats, e.g. B. in e-mails or in HTML documents.

Comparable technologies

The ISO standard Topic Maps is a comparable technique for knowledge representation . A main difference between RDF and Topic Maps can be found in the associations: while in RDF associations are always directional, in Topic Maps they are undirected and role-based.

Microformats and Microdata emerged as alternative, lightweight data models and serializations to the standards of the Semantic Web. Microformats were created as a continuation of the very specific standards for exchanging e.g. B. Address data in vCard , calendar data in vCalendar etc.

criticism

The Semantic Web is often described as too complicated and too academic. Well-known reviews are:

- Clay Shirky , Ontology is Overrated ( Memento July 29, 2013 in the Internet Archive ): Ontologies no longer work in relation to libraries, but extending them to the whole of the web is hopeless. Ontologies are too strongly oriented towards a certain point of view, are created too top-down (in contrast to the folksonomies that emerged in Web 2.0 ), and the formal basis of ontologies is too strict and inflexible. Since the Semantic Web is based on ontologies, it cannot avoid the problems of ontologies.

- Aaron Swartz , The Programmable Web : Swartz sees the failure of the Semantic Web in the premature standardization of insufficiently matured technology, and in the excessive complexity of the standards, attacking XML in particular , and comparing it to the simplicity of JSON . The special thing about Swartz's criticism is that he understands the technologies extremely well and longs for the goals of the Semantic Web, but that the standards actually used and the processes that led to their creation are inadequate.

Semantic Web as a research area

In contrast to many other web technologies, the goal of the Semantic Web has been the subject of brisk research activities. There have been annual academic conferences since 2001 (especially the International Semantic Web Conference and the Asian Semantic Web Conference ), and the most important academic conference on the web, the International World Wide Web Conference , claimed a noticeable share of research results on the Semantic Web. Researchers in the field of Semantic Web came mainly from the areas of knowledge representation, logic, in particular description logics , web services, and ontologies.

The research questions are diverse and often interdisciplinary. So z. B. Examines questions of the decidability of the combination of certain language elements for ontology languages, how data that are described in different vocabularies can be automatically integrated and queried together, what user interfaces to the Semantic Web can look like (this is how numerous browsers for linked data were created), such as the Data entry from Semantic Web data can be simplified, as Semantic Web Services , i.e. web services with semantically described interfaces, can automatically work together to achieve complex goals, effective publication and use of Semantic Web data, and much more.

Relevant journals are primarily the Journal of Web Semantics at Elsevier , the Journal of Applied Ontologies and the Semantic Web Journal at IOS Press. Prominent researchers in the Semantic Web field include: a., Wendy Hall , Jim Hendler, Rudi Studer , Ramanathan Guha, Asuncíon Gómez-Pérez, Mark Musen, Frank van Harmelen, Natasha Noy, Ian Horrocks, Deborah McGuinness, John Domingue , Carol Goble, Nigel Shadbold, David Karger, Dieter Fensel , Steffen Staab , Chris Bizer, Chris Welty, Nicola Guarino. Research projects with a focus on the Semantic Web are or have been a. GoPubMed , Greenpilot , Medpilot , NEPOMUK , SemanticGov , Theseus .

This brisk research has certainly helped to give the Semantic Web a reputation for being academic and complex. Numerous results have also been derived from this research.

See also

- DBpedia

- F-Logic

- FOAF

- Semantic Grid

- Semantic MediaWiki

- Semantic Publishing

- Semantic Web Services

- Semantic search

- Semantic search engine

- Semantic desktop

- Semantic Wiki

- Social Semantic Web

- Swoogle

- Wikidata

literature

- Aaron Swartz's A Programmable Web: An Unfinished Work. donated by his publisher Morgan & Claypool Publishers after Aaron Swartz's death in January 2013.

- Tim Berners-Lee with Mark Fischetti: Weaving the web: the original design and ultimate destiny of the World Wide Web by its inventors . 1st edition. HarperCollins, San Francisco, 1999, ISBN 0-06-251586-1 ; current edition: HarperBusiness, New York 2006, ISBN 0-06-251587-X . German edition: Tim Berners-Lee with Mark Fischetti: The Web Report: the creator of the World Wide Web about the unlimited potential of the Internet . Econ, Munich 1999, ISBN 3-430-11468-3

- Tim Berners-Lee, James Hendler, Ora Lassila: The Semantic Web: a new form of Web content that is meaningful to computers will unleash a revolution of new possibilities . In: Scientific American , 284 (5), May 2001, pp. 34–43 (German: My computer understands me . In: Spectrum of Science , August 2001, pp. 42–49)

- Lee Feigenbaum, Ivan Herman, Tonya Hongsermeier, Eric Neumann, Susie Stephens: My computer understands me - gradually . In: Spektrum der Wissenschaft , November 2008, pp. 92–99.

- Toby Segaran, Colin Evans, Jamie Taylor: Programming the semantic web . O'Reilly & Associates, Sebastopol CA 2009 ISBN 978-0-596-15381-6 .

- Grigoris Antoniou, Frank van Harmelen: A Semantic Web Primer . 2nd edition. The MIT Press, 2008, ISBN 0-262-01242-1

- Dieter Fensel , Wolfgang Wahlster , Henry Lieberman, James Hendler: Spinning the Semantic Web: Bringing the World Wide Web to Its Full Potential. The MIT Press, 2003, ISBN 0-262-06232-1

- Bo Leuf : The Semantic Web. Crafting Infrastructure for Agents. Wiley, 2006, ISBN 0-470-01522-5

- Vladimir Geroimenko, Chaomei Chen: Visualizing the Semantic Web. Springer Verlag, 2003, ISBN 1-85233-576-9

- Jeffrey T. Pollock: Semantic Web For Dummies. For dummies. 2009, ISBN 978-0-470-39679-7

- Pascal Hitzler, Markus Krötzsch, Sebastian Rudolph, York Sure: Semantic Web. Basics . Springer Verlag, 2008, ISBN 978-3-540-33993-9 . English edition: Pascal Hitzler, Markus Krötzsch, Sebastian Rudolph: Foundations of Semantic Web Technologies . CRC Press, 2009, ISBN 978-1-4200-9050-5

Web links

- Andy Carvin: Tim Berners-Lee: Weaving a Semantic Web ( Memento of February 8, 2009 in the Internet Archive ) . Published by the Digital Divide Network, October 2004 (archived February 8, 2009)

- W3C Semantic Web Initiative (English)

- Linked Data - Connect Distributed Data across the Web (English)

- Tim Berners-Lee: Semantic Web Future . (English)

Webcast video

- Tim Berners-Lee: The next Web of open, linked data (2009) (English)

- Tim Berners-Lee: The Future of the Web (2006) (English)

References and comments

- ^ John Markoff: Entrepreneurs See a Web Guided by Common Sense . In: New York Times , September 12, 2006

- ^ Robert Tolksdorf: Web 3.0 - the dimension of the future . In: Der Tagesspiegel , August 31, 2007

- ↑ Giant Global Graph | Decentralized Information Group (DIG) breadcrumbs. November 21, 2007, accessed March 10, 2019 .

- ↑ Tim Berners-Lee, James Hendler, Ora Lassila: The Semantic Web: a new form of Web content that is meaningful to computers will unleash a revolution of new possibilities. In: Scientific American , 284 (5), pp. 34–43, May 2001 (German: My computer understands me. In: Spectrum of Science , August 2001, pp. 42–49)

- ↑ A revised version of the lectures from 2005 can also be found on the author's website