RAID

A RAID system is used to organize several physical mass storage devices (usually hard disk drives or solid-state drives ) to form a logical drive that allows greater reliability or a higher data throughput than a single physical storage medium. The term is an acronym for English " r edundant a rray of i ndependent d isks" "redundant array of, or" redundant array of independent disks "(originally English inexpensive disks"; German "redundant array of inexpensive disks", which was abandoned for marketing reasons ).

While most of the techniques and applications used in computers aim to avoid redundancies (in the form of multiple occurrences of the same data), redundant information is specifically generated in RAID systems so that the RAID as a whole retains its integrity and functionality if individual storage media fail after the failed component has been replaced by a rebuild, the original state can be restored. Under no circumstances should this redundancy be equated with data backup .

history

The acronym RAID was first in 1987 by David Patterson , Garth A. Gibson and Randy H. Katz of the University of California, Berkeley , USA as an abbreviation for " R edundant A rray of I nexpensive D defined isks". In the publication "A case for redundant arrays of inexpensive disks (RAID)" published for the International Conference on Management of Data (1988, Chicago, Illinois, USA) the authors develop proposals to accelerate slow disk access and to reduce the mean time between failures ( MTBF). For this purpose, the data should be stored on many smaller (cheaper) disks instead of a few large (expensive) ones; hence the original reading as “Redundant Arrays of Inexpensive Disks” - RAID stands in contrast to the SLEDs ( Single Large Expensive Disks ) of that time. In their work, the authors investigate the possibility of operating inexpensive, smaller hard drives (actually accessories for personal computers ) in a network as a larger logical drive in order to save the costs of a large (at the time disproportionately expensive) SLED hard drive ( mainframe technology) . The increased risk of failure in the network should be countered by storing redundant data. The individual arrangements were discussed as a RAID level.

“A case for redundant arrays of inexpensive disks (RAID)

ABSTRACT: Increasing performance of CPUs and memories will be squandered if not matched by a similar performance increase in I / O. While the capacity of Single Large Expensive Disks (SLED) has grown rapidly, the performance improvement of SLED has been modest. Redundant Arrays of Inexpensive Disks (RAID), based on the magnetic disk technology developed for personal computers, offers an attractive alternative to SLED, promising improvements of an order of magnitude in performance, reliability, power consumption, and scalability. This paper introduces five levels of RAIDs, giving their relative cost / performance, and compares RAID to an IBM 3380 and a Fujitsu Super Eagle ”

The variants RAID 0 and RAID 6 were only later coined by industry. Since 1992, standardization is done by the RAB ( R AID A dvisory B Oard), consisting of about 50 manufacturers. The further development of the RAID concept increasingly led to its use in server applications that use the increased data throughput and reliability. The aspect of cost savings was thus eliminated. The ability to switch to such a system, individual hard drives during operation, corresponds to the common use today Translation: R edundant A rray of I ndependent D isks (redundant array of independent disks) .

Structure and connection - hardware RAID, software RAID

The operation of a RAID system requires at least two storage media. These are operated together and form a network that is more efficient than the individual media from at least one aspect. The following advantages can be achieved with RAID systems (although some are mutually exclusive):

- Increase in reliability ( redundancy )

- Increase in data transfer rate (performance)

- Building large logical drives

- Exchange of storage media (also during operation)

- Increasing the memory (also during operation)

- Cost reduction through the use of several small, inexpensive media

The exact way in which the individual storage media interact is specified by the RAID level . The most common RAID levels are RAID 0, RAID 1, and RAID 5. They are described below.

As a rule, the RAID implementations only recognize the total failure of a medium or errors that are signaled by the medium (see e.g. SMART ). In theory, many implementations can only recognize individual bit errors and not correct them. Since bit errors are now rare and the detection of individual errors without the possibility of correction is of relatively little use, some implementations today dispense with the additional integrity check when writing (read after write) or reading (read and compare) and thus offer considerably higher performance in some cases . For example, some RAID 5 implementations today forego checking the plausibility using parity stripes when reading, and many RAID 1 implementations also work in the same way. In this way, these systems achieve data throughputs when reading that are otherwise only achieved with RAID 0. In such implementations, the cache of a storage medium is also not necessarily deactivated. Nevertheless, some RAID levels (RAID 2, depending on the manufacturer, also RAID 6) pay special attention to data integrity and error correction ( ECC ), the cache memories of the disks are consequently deactivated and all possible checks are then carried out at any time (read after write etc.), which can result in considerable performance losses.

From the point of view of the user or an application program, a RAID system does not differ from a single storage medium.

Hardware RAID

Of hardware RAID is when the interaction of the storage media of a specially developed hardware is organized -Baugruppe, the RAID controller. The hardware RAID controller is typically located in physical proximity to the storage media. It can be contained in the computer case. In the data center environment in particular, it is often located in its own housing, a disk array , in which the hard drives are also housed. The external systems are often referred to as DAS or SAN , or NAS , although not all of these systems also implement RAID. Professional hardware RAID implementations have their own embedded CPUs; they use large, additional cache memories and thus offer the highest data throughput and at the same time relieve the main processor . Well thought-out handling and solid manufacturer support ensure the best possible support for the system administrator, especially in the event of malfunctions. Simple hardware RAID implementations do not offer these advantages to the same extent and are therefore in direct competition with software RAID systems.

Host raid

So-called host RAID implementations are offered in the lower price segment (practically exclusively for IDE / ATA or SATA hard drives). Outwardly, these solutions are similar to hardware RAID implementations. They are available as a card extensions from the low-price sector, often they are also directly into the motherboard (Engl. Mainboards ) for the home computer and personal computer integrated. Most of the time, these implementations are limited to RAID 0 and RAID 1. In order to keep such non-professional implementations as affordable as possible, they largely do without active components and implement the RAID level using software that is integrated in the hardware drivers, but which uses the main processor for the necessary computing work and the internal bus systems more burdened. It is more of a software RAID implementation tied to special hardware. The binding to the controller is a significant disadvantage, makes recovery difficult and harbors the risk of data loss if the controller malfunctions. Such controllers are therefore often referred to as fake RAID in Linux jargon (cf. also the so-called win or soft modems , which also put additional stress on the main processor and bus systems).

Software RAID

Of software RAID is when the interaction of the hard disks completely software- organized. The term host-based RAID is also common because it is not the storage subsystem but the actual computer that manages the RAID. Most modern operating systems such as FreeBSD , OpenBSD , Apple macOS , HP HP-UX , IBM AIX , Linux , Microsoft Windows from Windows NT or SUN Solaris are capable of doing this. In this case, the individual hard drives are either connected to the computer via simple hard disk controllers or external storage devices such as disk arrays from companies such as EMC , Promise, AXUS, Proware or Hitachi Data Systems (HDS) are connected to the computer. The hard disks are first integrated into the system as so-called JBODs (“just a bunch of disks”) without a RAID controller , then the RAID functionality is implemented using software RAID (e.g. under Linux with the mdadm program ). A special variant of the software RAID are file systems with an integrated RAID functionality. One example is the RAID-Z developed by Sun Microsystems.

Per

The advantage of software RAID is that no special RAID controller is required. The control is done by the RAID software, which is either already part of the operating system or is installed later. This advantage is particularly useful in disaster recovery when the RAID controller is defective and no longer available. Practically all currently available software RAID systems use the hard drives in such a way that they can be read without the specific software.

Contra

With a software RAID, hard disk access places a greater load on both the computer's main processor and the system buses such as PCI than with a hardware RAID. With inefficient CPUs and bus systems, this significantly reduces the system performance; this is irrelevant in the case of high-performance, underutilized systems. Storage servers are often not fully utilized in practice; on such systems, software RAID implementations may even be faster than hardware RAIDs.

Another disadvantage is that with many software RAID no cache can be used, the content of which is retained even after a power failure, as is the case with hardware RAID controllers with a battery backup unit . This problem can be avoided with an uninterruptible power supply for the entire PC. In order to minimize the risk of data loss and data integrity errors in the event of a power failure or system crash, the (write) caches of the hard disks should also be deactivated.

Since the disks of a software RAID can in principle also be addressed individually, there is a risk with mirrored hard disks that changes will only be made to one disk - for example if the RAID software or the driver for a RAID hard disk has been updated after an operating system update -Controller no longer work, but one of the mirrored hard drives can still be addressed via a generic SATA driver. Corresponding warnings or error messages during booting should therefore not be ignored just because the system works anyway. Exceptions are software RAID with data integrity such as B. ZFS. Incomplete saves are rolled back. Incorrect mirror data are recognized and replaced with correct mirror data. There will probably be an error message when reading, because the faulty or old mirror page does not match the current block.

Software raid and storage server

With an approach similar to software RAID, (logical) volumes that are made available by different storage servers can also be mirrored on the application server side. This can be useful in high-availability scenarios because it means that you are independent of the corresponding cluster logic in the storage servers, which is often missing, takes other approaches or is manufacturer-dependent and therefore cannot be used in mixed environments. However, the host operating system must have the appropriate features (e.g. by using GlusterFS , the Logical Volume Manager or NTFS ). Such storage servers are usually redundant in themselves. An overarching cluster is more likely to prevent the failure of the entire server or a computer room (power failure, water damage, fire, etc.). A simple mirror, comparable to RAID 1, is sufficient here; see also main article Storage Area Network .

Problems

Resizing

There are basically two alternatives for resizing RAIDs. Either the existing RAID is adopted and adapted or the data is saved elsewhere, the RAID is set up again in the desired size / level and the previously saved data is restored. The latter trivial case is not dealt with any further below; it is all about changing the size of an existing RAID system.

It is usually not possible to downsize an existing RAID system.

In terms of enlargement, there is generally no guarantee that an existing RAID system can be expanded by adding more hard drives. This applies to both hardware and software RAIDs. This option is only available if a manufacturer has explicitly accepted the extension as an option. It is usually quite time-consuming, since a changed number of drives changes the organization of all data and parity information and therefore the physical order has to be restructured. Furthermore, the operating system and the file system used must be able to incorporate the new disk space.

A RAID expansion requires a new disk that is at least the size of the smallest disk already in use.

exchange

For example, if a RAID array has to be rebuilt after a disk failure, you need a hard disk that is at least as large as the failed hard disk. This can be problematic when using, for example, maximum size plates. If a disk type is only temporarily unavailable and the alternatively available disks are only one byte smaller, the RAID array can no longer simply be restored. As a precaution, some manufacturers (e.g. HP or Compaq) therefore use disks with a modified disk firmware, which deliberately makes the disk slightly smaller. This ensures that disks from other manufacturers with adapted firmware can also be set to the size used by the RAID array. Another approach taken by some manufacturers of RAID controllers is to cut the disk capacity slightly when setting up the array, so that disks from different series or from different manufacturers with approximately the same capacity can be used without any problems. Whether a controller supports this function should, however, be checked before setting up an array, since subsequent size changes are usually not possible. Some RAID implementations leave it to the user not to use up some disk space. It is then advisable, but of course also with software RAID, to reserve a small amount of disk space right from the start in the event of a model change and not to use it. For this reason, hard disks of maximum size, for which there is only one manufacturer, should be used carefully in the area of redundant RAID systems.

Defective controller

Hardware can also be defective (e.g. in the form of a RAID controller). This can become a problem, especially if an identical replacement controller is not available. As a rule, an intact set of disks can only be operated on the same controller or the same controller family on which it was created. It often happens (especially with older systems) that only exactly the same controller ( hardware + firmware ) can address the disk set without data loss. If in doubt, you should definitely ask the manufacturer. For the same reason, unknown manufacturers, but also onboard RAID systems, should be used with caution. In any case, it should be ensured that you get an easy-to-configure, suitable replacement even after years.

Linux , the disk sets of some IDE / SATA RAID controllers (Adaptec HostRAID ASR, Highpoint HPT37X, Highpoint HPT45X, Intel Software RAID, JMicron JMB36x, LSI Logic MegaRAID, Nvidia NForce, Promise FastTrack, Silicon Image Medley, SNIA can help under certain circumstances DDF1, VIA software RAID and compatibles) can be read in directly by the operating system with the dmraid tool.

Incorrectly produced data carrier series

Like other products, hard drives can be produced in defective series. If these then get to the end user and into a RAID system, such serial errors can also occur promptly and then lead to multiple errors - the simultaneous failure of several hard drives. Such multiple errors can then usually only be compensated for by restoring data backups. As a precaution, diversity strategies can be used, i.e. a RAID array can be built from disks of the same performance from several manufacturers, whereby one must note that the disk sizes can vary slightly and that the maximum array size may be derived from the smallest disk.

Statistical error rate for large hard drives

A hidden problem lies in the interaction of the array size and the statistical error probability of the drives used. Hard drive manufacturers indicate a probability of unrecoverable read errors (URE) for their drives. The URE value is an average value that is guaranteed within the warranty period; it increases due to age and wear. For simple drives from the consumer sector (IDE, SATA) the manufacturers typically guarantee URE values of a maximum (max. One faulty bit per read bit), for server drives (SCSI, SAS) it is usually between and . For consumer drives, this means that a maximum of one URE can occur during processing of bits (around 12 TB). For example, if an array consists of eight 2 TB hard disks, the manufacturer only guarantees that, statistically speaking , the rebuild must work in at least one of three cases without URE, even though all drives function correctly according to the manufacturer's specifications. This is hardly a problem for small RAID systems: The rebuild of a RAID 5 array from three 500 GB consumer drives (1 TB user data) will be successful on average in 92 out of 100 cases if only URE errors are considered . For this reason, practically all simple redundant RAID methods (RAID 3, 4, 5 etc.) except RAID 1 and RAID 2 are already reaching a performance limit. The problem can of course be alleviated with higher quality disks, but also with combined RAID levels such as RAID 10 or RAID 50. In reality, the risk of such a URE-based error actually occurring is by far lower, because the manufacturer's specifications are only guaranteed maximum values. Nevertheless, the use of high-quality drives with error rates of (or better) is particularly advisable for professional systems.

Rebuild

The rebuilding process of a RAID array is called rebuild. This is necessary if one or more hard drives (depending on the RAID level) in the RAID system have failed or been removed and then replaced with new hard drives. Since the new hard disks are not written to, the missing data must be written to them with the help of the user data or parity data that are still available. Regardless of the configuration of the RAID system, a rebuild always means a higher load on the hardware components involved.

A rebuild can take more than 24 hours, depending on the RAID level and the number and size of disks. To avoid further disk failures during a rebuild, hard disks from different manufacturing batches should be used for a RAID. Since the plates work under the same operating conditions and are of the same age, they also have a similar life or failure expectancy.

Depending on the controller used, an automatic rebuild can take place via hot spare disks that are assigned to the RAID system. However, not every controller or every software has the ability to connect one or more hot spare disks. These are dormant during normal operation. As soon as the controller detects a defective hard disk and removes it from the RAID array, one of the hot spare disks is added to the array and the rebuild starts automatically. But it finds i. A. There is no regular check of the hot spare disk (s) for availability and read / write functionality, neither for controllers nor for software solutions.

The "Rebuild" state of a system should be re-examined from the aforementioned problem points. The original meaning “RAID” (see explanation at the beginning of the article) and its priority “Fail-safe through redundancy” is changing more and more in practice to a system whose priority is “maximizing the available storage space through a network of cheap Hard disks of maximum capacity ”. The formerly short-term "operating state" rebuild, in which available hot spare hard disks were able to restore the normal state very quickly, is developing into an emergency scenario that lasts for days. The relatively long period of time under maximum load increases the risk of further hardware failure or other malfunctions considerably. A further increase in redundancy, especially in the case of larger hard disk arrays, e.g. B. by using a RAID 6 , a ZFS Raid-Z3 or even a further mirroring of the entire hard disk network (-> GlusterFS , Ceph etc.) seems advisable in such scenarios.

The common RAID levels in detail

The most common RAID levels are RAID levels 0, 1 and 5.

If there are three disks of 1 TB each, each with a failure probability of 1% in a given period, the following applies

- RAID 0 provides 3 TB. The probability of failure of the RAID is 2.9701% (1 in 34 cases).

- RAID 1 provides 1 TB. The probability of failure of the RAID is 0.0001% (1 in 1,000,000 cases).

- RAID 5 provides 2 TB. The probability of failure of the RAID is 0.0298% (1 in 3,356 cases).

Technically, this behavior is achieved as follows:

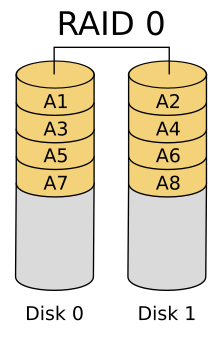

RAID 0: Striping - Acceleration without redundancy

RAID 0 lacks redundancy , so strictly speaking it does not belong to the RAID systems, it is just a fast " array of independent disks ".

RAID 0 offers increased transfer rates by dividing the hard disks involved into contiguous blocks of the same size, whereby these blocks are arranged in a zipper process to form a large hard disk. This means that access to all disks can be carried out in parallel ( striping , which means “to divide into strips”, derived from stripe , or “strips”). The increase in data throughput (with sequential accesses, but especially also with sufficiently high concurrency ) is based on the fact that the necessary hard disk accesses can be processed in parallel to a greater extent. The size of the data blocks is known as the striping granularity (also stripe size , chunk size or interlace size ). Usually a chunk size of 64 kB is chosen for RAID 0 .

However, if one of the hard disks fails (completely) due to a defect, the RAID controller can no longer fully reconstruct the user data without its partial data. It may be possible to partially restore the data, namely precisely for those files that are only stored on the remaining hard drives, which is typically only the case with small files and more with a high level of striping granularity. (In comparison, the use of a separate file system per hard disk would guarantee the seamless usability of the remaining media or the file systems there in the event of a failure of a single storage medium, while the complete failure of a single and correspondingly larger storage medium would result in a complete loss of all data .) RAID 0 is therefore only recommended in applications in which failure safety is of little importance. Even if predominantly read accesses occur (while changing accesses are also carried out redundantly on another medium by means of appropriate procedures), RAID 0 can be recommended. The unavoidable interruption of operation due to a hard disk failure (including individual disks) with simple RAID 0 should be taken into account when planning.

The purpose of this network system therefore extends to applications in which particularly large amounts of data are to be read in a short time, such as music or video playback and the sporadic recording of the same.

The probability of failure of a RAID 0 of hard drives in a certain period is . This only applies under the assumption that the failure probability of a hard drive is statistically independent of the other hard drives and is identical for all hard drives.

A special form is a hybrid RAID 0 combination of SSD and conventional hard disk (see also Fusion Drive under OS X ), with the SSD serving as a large cache memory for the conventional hard disk. A real RAID 0 does not arise here, however, because after the two drives have been separated, the data from both data carriers can still be read separately.

RAID 1: Mirroring

RAID 1 is the combination of at least two hard drives. A RAID 1 stores the same data (mirroring) on all hard disks and thus offers full redundancy. The capacity of the array is at most as large as the smallest hard disk involved.

A huge advantage of RAID 1 over all other RAID methods is its simplicity. Both disks are described identically and contain all the data of a system, so (assuming the right hardware) each disk can normally be operated and used directly in two independent computers (internal or in the external drive). Complex rebuilds are only necessary if the disks are to be operated redundantly again. In the event of a breakdown, as well as migrations or upgrades, this is an enormous advantage.

If one of the mirrored disks fails, every other can continue to deliver all data. This is indispensable, especially in security-critical real - time systems . RAID 1 offers a high level of failure safety, because the total loss of data only occurs if all disks fail. The probability of failure of a RAID 1 of hard drives in a certain period is if the probability of failure of a hard drive is statistically independent of the other hard drives and is identical for all hard drives.

For historical reasons, a distinction is made between mirroring (all hard disks on the same controller ) and duplexing (a separate controller for each hard disk), which today only plays a role when considering the single point of failure : hard disk controllers fall compared to those that are mechanically stressed Share (i.e. hard drives) relatively rarely, so that the risk of a controller failure is often still tolerated due to its low probability.

To increase the read performance, a RAID 1 system can access more than one hard disk while reading and simultaneously read in different sectors from different disks. In a system with two hard disks, the performance can be doubled. The reading characteristics here correspond to a RAID 0 system. However, not all controllers or software implementations offer this function. It increases the reading speed of the system enormously, but at the expense of security. Such an implementation protects against a complete data carrier failure, but not against problems with defective sectors, at least if they only occur after saving (read after write verify) .

To increase security, a RAID 1 system can always access more than one hard drive when reading. The response data streams from the hard disks are compared. If there are any discrepancies, an error message is issued because the mirroring no longer exists. Only a few controllers offer this function, and it also reduces the speed of the system slightly.

A mirror disk is not a substitute for data backup , since accidental or incorrect write operations (viruses, power failure, user errors) are immediately transferred to the mirror disk. This applies in particular to incompletely expired, writing programs (e.g. update transactions on databases without a logging system that were aborted by a power failure), which can result not only in damage to the mirroring, but also in an inconsistent data status despite an intact mirroring. Data backups and transaction logs provide a remedy here .

A special form is a hybrid RAID 1 combination of SSD and conventional hard disk, which combines the advantages of an SSD (read speed) with redundancy.

In practice, however, the entire array is heavily loaded during the rebuild process, so that further failures are to be expected with a higher probability during this period.

RAID 5: performance + parity, block-level striping with distributed parity information

RAID 5 implements striping with parity information distributed at block level. In order to calculate the parity, a logical group is formed from the data blocks of the hard disks involved in the RAID system, each at the same address. One data block of all data blocks in a group contains the parity data, while the other data blocks contain user data. As with RAID 0, the user data of RAID 5 groups is distributed to all hard drives. However, the parity information is not concentrated on one disk as in RAID 4 , but is also distributed.

RAID 5 offers both increased data throughput when reading data and redundancy at relatively low costs, making it a very popular RAID variant. In write-intensive environments with small, unrelated changes, RAID 5 is not recommended, since the throughput decreases significantly due to the two-phase write process in the case of random write accesses. At this point, a RAID 01 configuration would be preferable. RAID 5 is one of the most cost-effective ways of storing data redundantly on multiple hard drives and making efficient use of the storage space. If there are only a few disks, this advantage can be destroyed by high controller prices. Therefore, in some situations, it can make a RAID 10 cheaper.

The total usable capacity is calculated from the formula: (number of hard drives - 1) × capacity of the smallest hard drive . Calculation example with four 1 TB hard drives: (4−1) × (1 TB) = 3 TB of user data and 1 TB of parity .

Since a RAID 5 only fails if at least two disks fail at the same time, a RAID 5 with +1 hard disks results in a theoretical failure probability of if the failure probability of a hard disk is statistically independent of the other hard disks and identical for all hard disks. In practice, however, the entire array is heavily loaded during the rebuild process, so that further failures are to be expected with a higher probability during this period.

The parity data of a parity block is calculated by XORing the data of all data blocks in its group, which in turn leads to a slight to considerable reduction in the data transfer rate compared to RAID 0. Since the parity information is not required when reading, all disks are available for parallel access. This (theoretical) advantage does not apply to small files without concurrent access, only with larger files or suitable concurrency does a noticeable acceleration occur. With n hard disks, write access requires either a volume that fills exactly ( n −1) corresponding data blocks or a two-phase process (read old data; write new data).

More recent RAID implementations calculate the new parity information for a write access not by XORing the data of all corresponding data blocks, but by XORing the old and new data values and the old parity value. In other words: if a data bit changes value, then the parity bit also changes value. This is mathematically the same, but only two read accesses are required, namely to the two old values and not n − 2 read accesses to the other data blocks as before. This allows the construction of larger RAID 5 arrays without a drop in performance, for example with n = 8. In connection with write caches, a data throughput similar to RAID 1 or RAID 10 is achieved with lower hardware costs. Storage servers are therefore usually divided into RAID 5 arrays, if classic RAID methods are still used at all.

With RAID 5, the data integrity of the array is guaranteed if a maximum of one disk fails. After a hard disk failure or during the rebuild on the hot spare disk (or after replacing the defective hard disk), the performance drops significantly (when reading: every (n − 1) th data block must be reconstructed; when writing: every (n −1) -th data block can only be written by reading the corresponding areas of all corresponding data blocks and then writing the parity; there are also the accesses of the rebuild: (n − 1) × read; 1 × write). With the rebuild procedure, the calculation of the parity can therefore be neglected in terms of time; Compared to RAID 1, the process takes insignificantly longer and, based on the volume of user data, only requires the (n − 1) th part of the write access.

A still new method for improving the rebuild performance and thus the reliability is preemptive RAID 5. Internal error correction statistics of the disks are used to predict failure (see SMART ). As a precaution, the hot spare disk is now synchronized with the entire contents of the most suspect disk in the RAID array so that it can be replaced immediately at the predicted failure time. With a smaller footprint, the procedure achieves a level of reliability similar to RAID 6 and other dual-parity implementations. However, due to the high level of effort, preemptive RAID 5 has so far only been implemented in a few “high-end” storage systems with server-based controllers. In addition, a study by Google (February 2007) shows that SMART data is of limited use in predicting the failure of a single hard drive.

Influence of the number of hard drives

Configurations with 3 or 5 hard disks are often found in RAID 5 systems - this is no coincidence, because the number of hard disks has an influence on the write performance.

Influence on the read performance

It is largely determined by the number of hard drives, but also by cache sizes; more is always better here.

Influence on the write performance

In contrast to read performance, determining the write performance with RAID 5 is significantly more complicated and depends on both the amount of data to be written and the number of disks. Assuming hard disks with less than 2 TB disk space, the atomic block size (also called sector size) of the disks is often 512 bytes (see hard disk drive ). If one also assumes a RAID 5 network with 5 disks (4/5 data and 1/5 parity), the following scenario results: If an application wants to write 2,048 bytes, in this favorable case it will be exactly each on all 5 disks a block of 512 bytes is written, with one of these blocks containing no user data. Compared to RAID 0 with 5 disks, this results in an efficiency of 80% (with RAID 5 with 3 disks it would be 66%). If an application only wants to write a block of 512 bytes, there is a less favorable case: the block to be changed and the parity block must be read in first, then the new parity block is calculated and only then can both 512-byte blocks be written. That means an effort of 2 read accesses and 2 write accesses to save a block. If one assumes, in simplified form, that reading and writing take the same time, then the efficiency in this worst case, the so-called RAID 5 write penalty , is still 25%. In practice, however, this worst-case scenario will hardly ever occur with a RAID 5 with 5 disks, because file systems often have block sizes of 2 kB, 4 kB and more and therefore practically only show the well-case write behavior. The same applies to RAID 5 with 3 disks. On the other hand, a RAID 5 system with 4 disks (3/4 data and 1/4 parity) behaves differently. If a block of 2,048 bytes is to be written here, two write operations are necessary, 1,536 bytes are then used with well Case performance written and another 512 bytes with worst case behavior. Cache strategies counteract this worst-case behavior, but this means that with RAID 5 a ratio of two, four or even eight disks for user data plus one disk for parity data should be maintained. Therefore, RAID-5 systems with 3, 5 or 9 disks have a particularly favorable performance behavior.

RAID levels that are less common or have become meaningless

RAID 2: Bit-level striping with Hamming code-based error correction

RAID 2 no longer plays a role in practice. The procedure was only used with mainframes . The data is broken down into bit sequences of a fixed size and mapped to larger bit sequences using a Hamming code (for example: 8 bits for data and 3 bits for the ECC property). The individual bits of the Hamming code word are then divided over individual disks, which in principle allows a high throughput. A disadvantage, however, is that the number of disks has to be an integral multiple of the Hamming code word length if the properties of the Hamming code are to be revealed to the outside world (this requirement arises when one encounters a bit error in the Hamming code analogous to a Hard drive failure in RAID 2 sees).

The smallest RAID 2 network requires three hard disks and corresponds to a RAID 1 with double mirroring. In real use, therefore, you mostly saw no fewer than ten hard drives in a RAID 2 array.

RAID 3: Byte-level striping with parity information on a separate hard disk

The main idea behind RAID 3 is the highest possible gain in performance with redundancy in relation to the purchase price. In RAID 3, the actual user data is normally stored on one or more data disks. In addition, sum information is stored on an additional parity disk. For the parity disk, the bits of the data disks are added up and the calculated sum is examined to see whether it represents an even or an odd sum; an even sum is marked on the parity disk with the bit value 0; an odd sum is marked with the bit value 1. The data disks therefore contain normal user data, while the parity disk only contains the sum information.

Beispiel mit zwei Datenplatten und einer Paritätsplatte:

Bits der Datenplatten ⇒ Summe – gerade oder ungerade ⇒ Summen-Bit der Paritätsplatte 0, 0 ⇒ Summe (0) ist gerade ⇒ Summen-Bit 0 0, 1 ⇒ Summe (1) ist ungerade ⇒ Summen-Bit 1 1, 0 ⇒ Summe (1) ist ungerade ⇒ Summen-Bit 1 1, 1 ⇒ Summe (2) ist gerade ⇒ Summen-Bit 0 Ginge beispielsweise das Bit der ersten Datenplatte verloren, könnte man es aus dem Bit der zweiten Datenplatte und dem Summen-Bit der Paritätsplatte errechnen: Bits der Datenplatten & Summen-Bit der Paritätsplatte ⇒ rekonstruiertes Datum ?, 0 & 0 (also eine gerade Summe) ⇒ 0 (denn das erste Bit kann nicht 1 sein) ?, 1 & 1 (also eine ungerade Summe) ⇒ 0 (denn das erste Bit kann nicht 1 sein) ?, 0 & 1 (also eine ungerade Summe) ⇒ 1 (denn das erste Bit kann nicht 0 sein) ?, 1 & 0 (also eine gerade Summe) ⇒ 1 (denn das erste Bit kann nicht 0 sein)

Beispiel mit drei Datenplatten und einer Paritätsplatte:

Bits der Datenplatten ⇒ Summe – gerade oder ungerade ⇒ Summen-Bit der Paritätsplatte 0, 0, 0 ⇒ Summe (0) ist gerade ⇒ Summen-Bit 0 1, 0, 0 ⇒ Summe (1) ist ungerade ⇒ Summen-Bit 1 1, 1, 0 ⇒ Summe (2) ist gerade ⇒ Summen-Bit 0 1, 1, 1 ⇒ Summe (3) ist ungerade ⇒ Summen-Bit 1 0, 1, 0 ⇒ Summe (1) ist ungerade ⇒ Summen-Bit 1

Ginge beispielsweise das Bit der ersten Datenplatte verloren, könnte man es aus den Bits der anderen Datenplatten und dem Summen-Bit der Paritätsplatte errechnen. Bits der Datenplatten & Summen-Bit der Paritätsplatte ⇒ rekonstruiertes Datum ?, 0, 0 & 0 (also eine gerade Summe) ⇒ 0 (denn das erste Bit kann nicht 1 sein) ?, 0, 0 & 1 (also eine ungerade Summe) ⇒ 1 (denn das erste Bit kann nicht 0 sein) ?, 1, 0 & 0 (also eine gerade Summe) ⇒ 1 (denn das erste Bit kann nicht 0 sein) ?, 1, 1 & 1 (also eine ungerade Summe) ⇒ 1 (denn das erste Bit kann nicht 0 sein) ?, 1, 0 & 1 (also eine ungerade Summe) ⇒ 0 (denn das erste Bit kann nicht 1 sein)

In microelectronics this is identical to the XOR link.

The gain from a RAID 3 is as follows: You can use any number of data disks and still only need a single disk for the parity information. The calculations just presented can also be carried out with 4 or 5 or more data disks (and only one single parity disk). This also results in the greatest disadvantage: The parity disk is required for every operation, especially a write operation, so it forms the bottleneck of the system; these disks are accessed on every write operation.

RAID 3 has since disappeared from the market and has been largely replaced by RAID 5, in which parity is evenly distributed over all disks. Before the transition to RAID 5, RAID 3 was also partially improved by RAID 4, in which input and output operations with larger block sizes were standardized for speed reasons.

It should also be noted here that a RAID 3 array of only two hard disks (one data disk + one parity disk) means that the parity disk contains the same bit values as the data disk, which in effect corresponds to a RAID 1 with two hard disks (a data disk + a copy of the data disk).

RAID 4: Block-level striping with parity information on a separate hard disk

Parity information that is written to a dedicated hard drive is also calculated. However, the units that are written are larger blocks of data ( stripes or chunks ) and not individual bytes, which is what they have in common with RAID 5.

A disadvantage of classic RAID 4 is that the parity disk is involved in all write and read operations. As a result, the maximum possible data transfer speed is limited by the data transfer speed of the parity disk. Since one of the data disks and the parity disk are always used for each operation, the parity disk has to perform far more accesses than the data disks. As a result, it wears out more and is therefore more frequently affected by failures.

Because of the fixed parity disk with RAID 4, RAID 5 is almost always preferred instead .

An exception is a system design in which the read and write operations are carried out on an NVRAM . The NVRAM forms a buffer that increases the transfer speed for a short time, collects the read and write operations and writes them to the RAID 4 disk system in sequential sections. This reduces the disadvantages of RAID 4 and maintains the advantages.

NetApp uses RAID 4 in its NAS systems, the WAFL file system used was specially designed for use with RAID 4. As RAID 4 only works effectively with sequential write accesses, WAFL converts random writes in the NVRAM cache into sequential ones - and notes each individual position for later retrieval. When reading, however, the classic fragmentation problem arises : data that belong together are not necessarily in blocks that are physically one behind the other if they were subsequently updated or overwritten. The most widespread acceleration of read accesses, the cache prefetch , is therefore ineffective. The advantages in writing thus result in a disadvantage in reading. The file system must then be defragmented regularly.

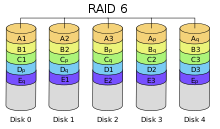

RAID 6: Block-level striping with parity information distributed twice

RAID 6 (offered under various trade names, for example Advanced Data Guarding ) works similarly to RAID 5, but can cope with the simultaneous failure of up to two hard drives. Especially when high-capacity SATA / IDE hard disks are used intensively, restoring redundancy after a disk failure can take hours or even days; with RAID 5 there is no protection against a further failure.

RAID 6 implements striping with duplicate parity information distributed at block level. In contrast to RAID 5, there are several possible forms of implementation with RAID 6, which differ in particular in terms of write performance and computing effort. In general, the following applies: Better writing performance comes at the price of increased computing effort. In the simplest case, an additional XOR operation is calculated using an orthogonal data line, see graphic. The second parity is also distributed to all disks in a rotating manner. Another RAID 6 implementation calculates with only one data line, but does not produce any parity bits , but an additional code that can correct two single-bit errors. The process is computationally more complex. On the subject of multi-bit error correction, see also the Reed-Solomon Code .

The following applies to all RAID 6 implementations: The performance penalty for write operations (write penalty) is slightly greater with RAID 6 than with RAID 5, the read performance is lower with the same total number of disks (one less user data disk) and the price per usable gigabyte is increased one hard drive per RAID array, i.e. on average around a seventh to a fifth. A RAID 6 network requires at least four hard drives.

RAIDn

RAIDn is a development by Inostor Corp., a subsidiary of Tandberg Data . RAIDn removes the previously rigid definition of the RAID level.

This RAID is defined by the total number of hard disks (n) and the number of hard disks that are allowed to fail without data loss (m) . RAID (n, m) or RAID n + m has become established as the notation.

The characteristics of the RAID can be calculated from these definitions as follows:

- Reading speed = n × reading speed of the single disk

- Writing speed = (n - m) × writing speed of the single disk

- Capacity = (n - m) × capacity of the single plate

Some specific definitions have been made as follows:

- RAID (n, 0) corresponds to RAID 0

- RAID (n, n-1) corresponds to RAID 1

- RAID (n, 1) corresponds to RAID 5

- RAID (n, 2) corresponds to RAID 6

RAID DP: Block-level striping with double parity information on separate hard drives

RAID DP ( double, dual or sometimes diagonal parity ) is a version of RAID 4 developed by NetApp . A second parity is calculated using the same formula as the first parity P , but with different data blocks. The first parity is calculated horizontally and the second parity Q is calculated diagonally. In addition, the first parity is included in the calculation of the diagonal parity, but not one hard disk alternately. Since any two hard disk errors can be compensated for in a RAID DP, the availability of such a system is increased compared to a single parity solution (e.g. RAID 4 or RAID 5).

RAID-DP sets usually consist of 14 + 2 disks. Thus, the gross-net waste is as low as with RAID 4 / RAID 5.

RAID DP simplifies recovery. First, the data of the first failed hard disk is calculated with the diagonal parity and then the content of the second hard disk from the horizontal parity.

In contrast to RAID 6 , where a system of equations has to be solved, the arithmetic operations are limited to simple XOR operations. RAID DP can be switched to RAID 4 (and vice versa) at any time by simply switching off (or restoring) the second parity disk. This happens without copying or restructuring the data already saved during operation.

Details on RAID DP can be found in the USENIX publication Row-Diagonal Parity for Double Disk Failure Correction .

RAID DP meets the SNIA RAID 6 definition.

Non-actual RAIDs: hard disk network

NRAID

Strictly speaking, NRAID (= Non-RAID: no actual RAID ) is not a real RAID - there is no redundancy . With NRAID (also known as linear mode or concat (enation) ) several hard drives are linked together. The NRAID function offered by some RAID controllers can be compared with the classic approach using a Logical Volume Manager (LVM) and less with RAID 0, because in contrast to RAID 0, NRAID offers no striping across multiple disks and therefore no profit in data throughput. To do this, hard drives of different sizes can be combined with one another without loss of memory (example: a 10 GB hard drive and a 30 GB hard drive result in a virtual 40 GB hard drive in an NRAID, while in a RAID 0 only 20 GB (10 + 10 GB) could be used).

Since there are no stripes in the underlying linear mode , the first hard disk is first filled with data in such a network and the second disk is only used when additional space is required. If this is also insufficient, the next disk is written to, if available. Consequently, there are two options in the event of a disk failure: On the one hand, it may not (yet) contain any data, then - depending on the implementation of the data recovery - no data may be lost either. On the other hand, the defective disk may already have contained data, in which case one also has the disadvantage that the failure of the individual disk damages the entire network. The lack of striping also makes it easier to restore individual unaffected files. In contrast to RAID 0, the failure of a disk does not necessarily lead to a complete loss of data here, at least as long as the user data is completely on the still functioning disk.

NRAID is not one of the numbered RAID levels, nor does it offer redundancy . But it can be seen as a distant relative of RAID 0. Both make a single logical unit out of several hard disks, the data capacity of which - with the restrictions mentioned for RAID 0 - corresponds to the sum of the capacities of all hard disks used. Nowadays, controllers sold with the NRAID property are able to do so. The panels, which can also be of different sizes, are simply hung together. In contrast to RAID 0, however, no stripe sets are formed. There is neither failure safety nor performance gains. The advantage lies essentially in the size of the resulting drive, as well as in a somewhat improved situation during data recovery. One advantage compared to an LVM solution is that with NRAID it is possible to boot from the RAID array without any problems.

Since most modern operating systems now have a Logical Volume Manager (LVM, is sometimes also referred to as a manager for dynamic volumes), it is often more useful to use this. The LVM integrated in the operating system has practically no measurable performance disadvantages and works independently of special hardware, so it can also combine hard drives of different types ( SCSI , SATA , USB , iSCSI , AoE , and many more). In the case of a defective RAID controller, there is also no need to look for an identical model; the disks can usually simply be connected to any controller with the same hard disk interface. The restoration then takes place via the respective operating system. However, if the system was booted directly from the composite logical volume (if this is possible at all), this can make recovery extremely difficult.

SPAN

Among other things, VIA offers the SPAN option in its RAID configuration . It is used to expand capacity without increasing performance as with NRAID. While with RAID 0 (striping) the data is distributed over several hard disks at the same time, with SPAN the data is put together on one hard disk. With RAID 0, hard disks of the same size should be used whenever possible, as the excess capacity of the larger data carrier is lost. With SPAN, hard disks of different sizes can be combined into one large hard disk without loss of capacity, which corresponds to Linear Mode or NRAID.

JBOD

JBOD means Just a Bunch of Disks , i.e. just a bunch of hard drives . JBOD lacks redundancy , so it does not belong to the RAID systems, it is just a simple "Array of Independent Disks". In practice, the term is used in three different ways to distinguish it from RAID systems:

- Configuration of a RAID controller with several hard disks that do not form a network. Many hardware RAID controllers are able to make the connected hard disks available to the operating system individually; the RAID functions of the controller are switched off and it works as a simple hard disk controller.

- A JBOD can also denote any number of hard drives connected to the computer in any way, regardless of the controller. With the help of volume management software , such a JBOD can be interconnected to form a common logical volume.

- Configuration of a RAID controller as a series (“concatenation”) of one or more hard disks, which appear as a single drive. However, it is also possible to divide a hard disk into several logical volumes in order to make them appear as several hard disks for the operating system, for example to avoid capacity limits. This configuration is identical to NRAID or SPAN and, strictly speaking, is not a RAID system either .

RAID combinations

Although RAID levels 0, 1 and 5 are by far the most widely used, there are also “RAID combinations” in addition to levels 0 to 6. Here a RAID is combined again into a second RAID. For example, several disks can be combined into a parallel RAID 0 and a RAID 5 array can be formed from several of these RAID 0 arrays . These combinations are then referred to as RAID 05 (0 + 5). Conversely, a combination of several RAID 5 arrays to form a RAID 0 array would be referred to as RAID 50 (or RAID 5 + 0 ). Also RAID 1 - and RAID 5 combinations are possible ( RAID 15 and RAID 51 ), the most popular combinations are, however, the RAID 01 , working in parallel with the two plates and this reflects each of two other plates (four plates) , or RAID 10 , in which (at least) two times two disks are mirrored and added to a whole via RAID 0.

RAIDs are rarely combined with more layers (e.g. RAID 100).

RAID 01

A RAID 01 network is a RAID 1 over several RAID 0. The properties of the two RAIDs are combined: security (lower than with RAID 10) and increased data throughput.

It is often said that a conventional, up -to- date RAID-01 system requires at least four hard drives. That is not completely right. At least four (or more generally: an even number ≥ 4) hard drives are only required for the more popular, classic RAID-10 network. But even with just three hard drives, a RAID 01 network can be formed on many RAID controllers . The procedure is as follows: First, the disks (exactly as with RAID 0) are only divided into consecutively numbered chunks (= blocks, numbering starting with 1), then all chunks with odd numbers are mirrored with the next higher neighbor with an even number. 50% of the disks are occupied with user data, the remaining 50% of each disk contain a copy of the user data from one of the other disks. The user data and the mirrored data are distributed (striped) . With three plates it looks like this:

- Disk A: 50% user data + 50% mirroring user data Disk C

- Disk B: 50% user data + 50% mirroring user data Disk A

- Disk C: 50% user data + 50% mirroring user data, disk B

The user data, like the mirrored data, is distributed across disks A, B and C in a RAID 0-typical manner (striped) . If a disk fails, all data is still available. In terms of the probability of failure, there is theoretically no difference to RAID 5 with three hard drives. Two out of three drives must remain intact for the system to function. In contrast to RAID 5, however, RAID 01 with four hard drives has less storage space available.

RAID 03

RAID 03 is equivalent to RAID 30 .

RAID 05

A RAID 05 network consists of a RAID 5 array that consists of several striped RAID 0s . It needs at least three RAID 0, thus at least 6 hard drives. With RAID 05 there is almost twice the chance of failure in comparison to a conventional RAID 5 with single disks, since with a RAID 0 all data is lost if one drive is defective.

RAID 10

A RAID 10 network is a RAID 0 over several RAID 1. The properties of the two RAIDs are combined: security and increased read / write speed.

A RAID 10 network requires at least four hard disks.

While the RAID 1 layer of a RAID 0 + 1 implementation is not able to differentiate between the damage in a subordinate RAID 0 and assign it to the individual hard drives, RAID 10 offers better reliability and faster reconstruction after a RAID 0 + 1 Disk failure as only part of the data has to be reconstructed. Here too, as with RAID 0 + 1, only half of the total hard disk capacity is available.

RAID 1.5

RAID 1.5 is actually not a separate RAID level, but an expression introduced by the Highpoint company for a RAID 1 implementation with increased performance. It uses the typical advantages of a RAID 0 solution even with RAID 1. In contrast to RAID 10, the optimizations can be used with just two hard drives. The two disks are mirrored at single speed, as is usual with RAID 1, while both disks are used with high data throughput when reading, as with RAID 0. The RAID 1.5 extensions, not to be confused with RAID 15 , are not only implemented by Highpoint . Experienced RAID 1 implementations, such as those under Linux or Solaris, also read from all disks and dispense with the term RAID 1.5, which does not offer any extra advantages.

RAID 15

The RAID-15 array is formed by using at least three RAID-1 arrays as components for a RAID 5; it is similar in concept to RAID 10, except that it is striped with parity.

In a RAID 15 with eight hard disks, up to any three hard disks can fail at the same time (up to five in total, provided that a maximum of one mirror set fails completely).

A RAID 15 network requires at least six hard disks.

The data throughput is good, but not very high. The costs are not directly comparable to those of other RAID systems, but the risk of complete data loss is quite low.

RAID 1E

With RAID 1E , individual data blocks are mirrored on the next hard drive. Neither two neighboring hard disks nor the first and last hard disk may fail at the same time. An uneven number of hard drives is always required for a RAID 1E. The usable capacity is reduced by half.

However, there are other versions of RAID 1E that are more flexible than the variant shown here.

RAID 1E0

With a RAID 1E0 several RAID 1E are interconnected with a RAID 0 . The maximum number of redundant disks and the net capacity corresponds to the underlying RAID 1E.

RAID 30 array

RAID 30 was originally developed by American Megatrends . It represents a striped variant of RAID 3 (that is, a RAID 0 that combines several RAID 3s).

A RAID 30 network requires at least six hard disks (two legs with three hard disks each). One hard drive can fail in each leg.

RAID 45 array

A RAID 45 network, similar to the RAID 55 , combines several RAID 4s with one RAID 5 . You need at least three RAID 4 legs, each with three hard disks, and thus nine hard disks. With nine hard disks, only four hard disks can be used, but the ratio improves with the number of hard disks used. RAID 45 is therefore only used in large hard disk arrays. The failure safety is very high, since at least three hard disks of any kind, one hard disk in each leg and a complete leg may fail.

RAID 50 array

A RAID 50 network consists of a RAID 0 array, which consists of several striped RAID 5s.

A RAID 50 network requires at least six hard disks, for example two RAID 5 controllers with three disks per controller connected together with a software stripe RAID 0. This guarantees a very high data throughput when writing and reading, since the computing work is based on two XORs -Units is distributed.

In a RAID 50 system with six hard disks, only one disk may fail at the same time (a total of up to two, provided the two disks do not belong to the same RAID 5 system).

A RAID-50 array is used for databases where write throughput and redundancy are paramount.

RAID 51

The RAID-51 array is formed, similar to RAID 15 , by mirroring the entire array of a RAID 5, and is similar to RAID 01 except for parity protection.

With a six-hard drive RAID-51, up to any three may fail at the same time. In addition, four hard disks may fail as long as only one disk from the mirrored RAID 5 array is affected.

A RAID 51 network requires at least six hard disks.

Data transfer performance is good, but not very high. The costs are not directly comparable with those of other RAID systems.

RAID 53

RAID 53 is a common name for a RAID 30 in practice .

RAID 55

The RAID 55 network is formed in a similar way to RAID 51 in that several RAID 5 systems are interconnected via another RAID 5 to form a RAID 55. In contrast to RAID 51, the overhead is lower and it is possible to read the data more quickly.

With a nine-hard drive RAID 55 system, up to three hard drives can fail at the same time. A maximum of five hard disks may fail (3 + 1 + 1). A RAID 55 network requires at least nine hard disks (three legs of three hard disks each). The data transfer speed is good, but not very fast. The costs are not directly comparable with those of other RAID systems.

RAID 5E

RAID 5E is the abbreviation for RAID 5 Enhanced . It combines a RAID 5 with a hot spare . However, the hot spare is not implemented as a separate drive, but rather distributed over the individual disks. In other words, storage space is reserved on each disk in the event of a failure. If a hard disk fails, the content of this disk is restored in the free space with the help of parity and the array can continue to be operated as RAID 5.

The advantage is not in increased security compared to RAID 5, but in the higher speed due to the constant use of all available disk spindles, including the hot spare disk that usually runs empty .

The technology has long been used by IBM for RAID controllers, but is being increasingly replaced by RAID 5EE .

RAID 5EE

RAID 5EE works similarly to RAID 5E . However, the free storage space is not reserved at the end of the hard disks, but is distributed diagonally across the disks, similar to RAID 5 parity. This means that in the event of a failure, a higher transmission speed remains when restoring the data.

RAID 5DP and RAID ADG

RAID 5DP is the designation used by Hewlett-Packard for the implementation for RAID 6 in the storage systems of the VA series. With the takeover of Compaq AG by Hewlett Packard, the RAID ADG variant developed by Compaq for the Compaq Smart Arrays also became the intellectual property of Hewlett Packard. The acronym ADG stands for Advanced Data Guarding .

RAID 60 array

A RAID 60 network consists of a RAID 0 array that combines several RAID 6s. At least two controllers with four hard disks each, i.e. a total of eight hard disks, are required for this. In principle, the differences between RAID 5 and RAID 6 scale up to the differences between RAID 50 and RAID 60: The throughput is lower, while the reliability is higher. The simultaneous failure of any two drives is possible at any time; further failures are only uncritical if a maximum of two disks per striped RAID 6 are affected.

Matrix RAID

Starting with the Intel ICH6R - Southbridge , a technology has been integrated for the first time since about the middle of 2004 , which is marketed as "Matrix RAID" and takes up the idea of RAID 1.5 . It should combine the advantages of RAID 0 and RAID 1 on just two hard drives. For this purpose, each of the two disks is divided into two areas by the controller. One area is then mirrored to the other hard disk, while the remaining area is split between the two disks. You can then, for example, install your "unimportant" operating system and programs in the divided area in order to benefit from RAID 0, while you can then save your important data in the mirrored area and rely on the redundancy of RAID 1. In the event of a disk crash, you would only have to reload your operating system and programs while the important data is retained in the other hard disk area.

With several hard disks, you can also use other RAID types in a matrix RAID and, for example, operate a partition as RAID 5 from three hard disks.

RAID S or parity RAID

RAID S or parity RAID , sometimes called RAID 3 + 1 , RAID 7 + 1 or RAID 6 + 2 or RAID 14 + 2 denotes a proprietary is Striped -parity RAID manufacturer EMC . EMC originally called this form RAID S for the Symmetrix systems. Since the market launch of the new DMX models, this RAID variant has been called Parity RAID. In the meantime, EMC also offers standard RAID-5. According to information from EMC, up to two hard drives may fail with parity RAID - “+ 2” types.

RAID S is as follows: A volume is always on a physical drive, several volumes (usually three or seven) are arbitrarily combined for parity purposes. This is not exactly identical to RAID 3, 4 or 5, because RAID S always involves numerous (possibly 100 or 1,000) drives that do not all form a single network. Rather, a few disks (typically: 4 to 16) form a RAID-S network, one or more of these groups form logical units - there is an unmistakable similarity to RAID levels 50 or 60 and RAID-Z. In addition, with RAID 5, the volumes on physical drives are called chunks. Several chunks for data are combined with a parity chunk to form a stripe. The basis for a partition or a logical volume is then formed from any number of stripes.

A parity RAID 3 + 1 contains three data volumes and one parity volume. This means that 75% of the capacity can be used. With Parity RAID 7 + 1, however, there are seven data volumes and one parity volume. With this, however, 87.5% of the capacity can be used with less reliability. With normal RAID 5 consisting of four disks, a stripe contains three chunks with data and one parity chunk. With normal RAID 5 consisting of eight disks, a stripe then contains seven chunks with data and also one parity chunk.

EMC also offers the Hypervolume Extension (HVE) as an option for these RAID variants . HVE allows multiple volumes on the same physical drive.

EMC parity RAID EMC parity RAID mit HVE --------------- ----------------------- A1 B1 C1 pABC A B C pABC A2 B2 C2 pABC D E pDEF F A3 B3 C3 pABC G pGHI H I A4 B4 C4 pABC pJKL J K L

Note: A1, B1 etc. represent a data block; each column represents a hard disk. A, B, etc. are entire volumes.

RAID TP or RAID Triple Parity

RAID TP or RAID Triple Parity is a proprietary RAID with triple parity from the manufacturer easyRAID. According to the manufacturer, up to three hard drives can fail with RAID TP without loss of data. Another Triple Parity implementation comes from Sun Microsystems and is marketed as Triple Parity RAID-Z or RAID-Z3. This version integrated in the ZFS uses a Reed-Solomon code to secure the data. Here too, up to three hard drives in a RAID system can be defective without data loss.

With the easyRAID RAID TP , the data blocks and the parities are written to the individual physical hard disks at the same time. The three parities are stored on different stripes on different disks. The RAID triple parity algorithm uses a special code with a Hamming distance of at least 4.

| Disk1 | Disk2 | Disk3 | Disk4 | Disk5 |

|---|---|---|---|---|

| A1 | B1 | pP (A1, B1) | pQ (A1, B1) | pR (A1, B1) |

| C1 | pP (C1, D1) | pQ (C1, D1) | pR (C1, D1) | D1 |

| pP (E1, F1) | pQ (E1, F1) | pR (E1, F1) | E1 | F1 |

| A2 | B2 | pP (A2, B2) | pQ (A2, B2) | pR (A2, B2) |

| C2 | pP (C2, D2) | pQ (C2, D2) | pR (C2, D2) | D2 |

| pP (E2, F2) | pQ (E2, F2) | pR (E2, F2) | E2 | F2 |

Note: A1, B1 etc. represent a data block; each column represents a hard disk. A, B, etc. are entire volumes.

This requires at least four hard drives. The capacity is calculated from the number of hard drives minus three.

RAID-Z in the ZFS file system

RAID-Z is a RAID system integrated by Sun Microsystems in the ZFS file system. ZFS is a further developed file system which contains numerous extensions for use in the server and data center area . These include the enormous maximum file system size, simple management of even complex configurations, the integrated RAID functions, volume management and checksum-based protection against disk and data transfer errors. Automatic error correction is possible with redundant storage. The integration of the RAID functionality into the file system has the advantage that block sizes of the file system and the RAID volumes can be matched to one another, which results in additional optimization options. The RAID subsystem integrated in the file system has the advantage over classic hardware or software RAID implementations that the integrated RAID system allows a distinction to be made between occupied and free data blocks and thus only used disk space is mirrored when a RAID volume is reconstructed must, which results in enormous time savings in the event of damage, especially when the file systems are not very full. ZFS calls this elementary redundant units Redundancy Groups : These are implemented as combinations of RAID 1, RAID Z1 (~ RAID 5) and RAID Z2 (~ RAID 6). One or more redundancy groups (similar to combined RAID 0) together form a ZFS volume (or ZFS pool), from which "partitions" can be requested dynamically. RAID-Z1 works in the same way as RAID 5, compared to a traditional RAID-5 array, RAID-Z1 is protected against synchronization problems (“write hole”) and therefore offers performance advantages - this also applies to RAID-Z2 and RAID 6 In July 2009 RAID-Z3, a RAID-Z implementation with three parity bits, is also available. The term write hole describes a situation that occurs during write accesses when the data has already been written to the hard disk, but the associated parity information has not yet been written. If a problem arises when calculating or writing the parity information during this state, it no longer matches the stored data blocks.

Summary

-

Number of hard drives

- The number of hard disks indicates how many hard disks are required to set up the respective RAID.

-

Net capacity

- The net capacity indicates the usable capacity depending on the number of hard drives used . This corresponds to the number of required hard disks without RAID that have the same storage capacity.

-

Resilience

- Resilience indicates how many hard drives can fail without data loss occurring. It should be noted that, especially with combination RAIDs, there can be a difference between the number of hard disks that can definitely fail ( ) and the number of hard disks that can fail in the best case ( ). It always applies , and for standard RAIDs these two values are identical.

-

Leg

- A leg (English for leg) or lower level RAID is a RAID array that is combined with other legs of the same type via a higher- level RAID array (upper level RAID) . The table below shows the number of hard drives in a leg and the number of legs in the higher-level array (if the RAID is actually combined).

Note: In principle, RAIDs 3 and 4 can also be used with two hard disks, but then you get exactly the same reliability as with RAID 1 with the same number of hard disks. However, RAID 1 is technically simpler and would always be preferred in this case. The same is true for parent arrays or legs in combination RAIDs.

Note: The specified cases, which devices fail, are used for illustrative purposes. The values only indicate that this number of devices can fail in any given case without data being lost. This information does not claim that in the special case, further hard drives cannot fail without data loss.

Other terms

Cache

Cache memory plays a major role in RAID. Basically, the following caches are to be distinguished:

- operating system

- RAID controller

- Enterprise disk array

A write request is usually already acknowledged today when the data has arrived in the cache and thus before the data has actually been permanently stored; it can also happen that the cache is not emptied in the order in which it was filled; This can lead to a period of time in which data that has already been assumed to be saved can be lost due to a power or hardware failure, which can lead to incorrect file contents (e.g. if the file system assumes that the file will be extended, although the corresponding data is available have not even been written). The cache therefore survives resets in enterprise storage systems. The write cache brings a gain in speed as long as the cache (RAM) is not full, or as long as the write requests are received in a suboptimal order or overlapping, since writing to the cache is faster than writing to disk.

The read cache is often of great importance in database applications today, as it means that the slow storage medium almost only needs to be accessed for writing.

Cache controller with backup battery

The backup battery is functional not to be confused with an uninterruptible power supply . Although this also protects against power failure , it cannot prevent system crashes or freezing of the system. Higher-quality RAID controllers therefore offer the option of protecting your own cache memory from failure. This is traditionally achieved with a backup battery for the controller or, in newer systems, with a non-volatile NAND flash memory and high-capacity capacitors. This backup of the cache memory is intended to ensure that data that is in the cache in the event of a power failure or a system failure and has not yet been written to the disks is not lost and so that the RAID remains consistent. The backup battery is often referred to as a BBU (Battery Backup Unit) or BBWC (Battery Backed Write Cache). Especially for performance-optimized database systems, the additional safeguarding of the cache is important in order to activate the write cache safely. Protecting the cache memory is particularly important for these systems, since data can be lost if the database is damaged and this cannot be recognized immediately. However, since the database continues to work, if the loss is noticed later, the data cannot simply be restored from the (hopefully existing) backup, as the subsequent changes would then be lost. In order to function optimally, this presupposes that the cache of the hard disks built into the hard disks and not secured by batteries is deactivated. If the disk cache were to temporarily store data in addition to the controller cache before writing, it would of course be lost in the event of a system failure.

Logical Volume Manager

The functions of a Logical Volume Manager (LVM) are often mixed with those of a software RAID system. This is mainly due to the fact that both subsystems are usually managed via a common graphical user interface . There is a clear demarcation here. Real RAID systems always offer redundancy (except RAID 0) and consequently always have a RAID engine which generates the additional data required for redundancy. The most common engine variants are data duplication with RAID 1 and XOR formation with RAID 5 and most other methods. With RAID, therefore, additional data streams are always generated to a considerable extent, so the data throughput of the RAID engine is an important performance factor. The task of an LVM is to map physical volumes (or partitions) onto logical volumes. One of the most common use cases is the subsequent enlargement of partitions and file systems that are managed by the LVM. However, an LVM does not generate any additional data streams, it also has no engine and therefore does not offer any redundancy, so it only generates minimal computing effort. Therefore it has practically no effect on performance (although some LVM implementations also have integrated RAID 0 expansions). The main task of the LVM is to distribute data streams from the file systems to the respective volume areas; it is most similar to the way an MMU works . In some systems (e.g. HP-UX or Linux ) software RAID and LVM are optional extensions and can be installed and used completely independently of one another. Some manufacturers therefore license volume management and RAID (mirroring and / or RAID 5) separately.

performance

There are two essential parameters for determining the performance of a hard disk subsystem, the number of I / O operations per time that are possible with random access, i.e. IOPS (I / O per second), and the data throughput for sequential access, measured in MB / s. The performance of a RAID system results from the combined performance of the hard drives used.

The number of IOPS is derived directly from the average access time and the speed of a hard disk. With SATA disks the access time is 8 to 10 ms, half a revolution takes about 2 to 4 ms depending on the speed. In simplified terms, this is equivalent to a duration for non-sequential access of around 10 ms. This means that a maximum of 100 I / Os are possible per second, i.e. a value of 100 IOPS. More powerful SCSI or SAS disks have access times of less than 5 ms and work at higher speeds, so their I / O values are around 200 IOPS. In systems that have many users (or tasks) to process at the same time, the IOPS value is a particularly important variable.

The sequential data throughput is a value that essentially depends on the speed and the write density and the number of surfaces of a hard disk written on. Only with repeated access does the disk cache have a performance-enhancing effect. With the same configuration (write density, surfaces), a current disk with 10,000 revolutions per minute delivers data about twice as fast as a simple IDE disk that works with 5,200 revolutions per minute. For example, the Samsung spin Point VL40 provides 5,400 min -1 a data stream having an average of about 33 MB / s, the WD Raptor WD740GD 10,000 min -1 , however, provides an average of 62 MB / s.

IOPS and MB / s with RAID 0

With small chunk sizes (2 kB), a RAID 0 array of two disks uses both disks practically for every access, so the data throughput doubles (per I / O process), but the IOPS remain unchanged. With large chunks (32 kB), however, usually only one disk is used for a single access, consequently another I / O can take place on the second disk, thus doubling the IOPS values, whereby the data stream (per I / O process ) remains the same and only accumulates in systems with many simultaneous tasks. Servers in particular benefit from larger chunks. Systems with two disks (type: 100 IOPS) in RAID 0 can theoretically achieve up to 200 IOPS at around 60 MB / s.

IOPS and MB / s with RAID 1

Modern RAID 1 implementations behave like the individual disks used when writing. The chunk size is not important for write access. When reading, however, two disks work at the same time, analogous to the RAID 0 systems. Therefore, with RAID 1, the chunk sizes have the same influence on the performance of read accesses as with RAID 0; here too, servers in particular benefit from larger chunks. Systems with two disks (type: 100 IOPS) in RAID 1 can theoretically achieve reading up to 200 IOPS at around 60 MB / s, and writing up to 100 IOPS at around 30 MB / s.

IOPS and MB / s with RAID 10

The combination of RAID 0 and RAID 1 doubles the performance compared to pure RAID 1. Current systems with eight disks (type: 100 IOPS) in RAID 10 can theoretically achieve reading up to 800 IOPS at around 240 MB / s, writing up to to 400 IOPS at around 120 MB / s.

IOPS and MB / s with RAID 5