Dynamic Random Access Memory

Dynamic Random Access Memory ( DRAM ) or the half-German term dynamic RAM describes a technology for an electronic memory component with random access ( Random-Access Memory , RAM), which is mainly used in computers , but also in other electronic devices such as printers is used. The storing element is a capacitor that is either charged or discharged. It is accessible via a switching transistor and either read out or written with new content.

The memory content is volatile, i.e. the stored information is lost if there is no operating voltage or if it is refreshed too late.

introduction

A characteristic of DRAM is the combination of a very high data density combined with very inexpensive manufacturing costs. It is therefore mainly used where large amounts of memory have to be made available with medium access times (compared to static RAM , SRAM).

In contrast to SRAMs, the memory content of DRAMs must be refreshed cyclically (refresh). This is usually required every tens of milliseconds . The memory is refreshed line by line. For this purpose, a memory line is transferred in one step to a line buffer located on the chip and from there is written back to the memory line in an amplified manner. Hence the term "dynamic" comes from. In contrast, with static memories such as SRAM, all signals can be stopped without loss of data. Refreshing the DRAM also consumes a certain amount of energy in the idle state. SRAM is therefore preferred in applications where a low quiescent current is important.

Charge in the storage cell capacitors evaporates within milliseconds, but due to manufacturing tolerances, it can persist in the storage cells for seconds to minutes. Researchers at Princeton University managed to forensically read data immediately after a cold start. To be on the safe side , the components are always specified with the guaranteed worst-case value , i.e. the shortest possible holding time.

Storage manufacturers are continuously trying to reduce energy consumption by minimizing losses due to recharging and leakage currents. Both depend on the supply voltage, which for DDR2-SDRAM is 1.8 volts, while DDR-SDRAM is still supplied with 2.5 volts. In the DDR3 SDRAM introduced in 2007 , the voltage was lowered to 1.5 volts.

A DRAM is designed either as an independent integrated circuit or as a memory cell part of a larger chip.

The "random" in random access memory stands for random access to the memory content or the individual memory cells, as opposed to sequential access such as, for example, with FIFO or LIFO memories (organized on the hardware side) .

construction

A DRAM does not consist of a single two-dimensional matrix, as shown in simplified form in the article semiconductor memory . Instead, the memory cells , which on the surface of this are arranged and wired, divided into a sophisticated hierarchical structure. While the internal structure is manufacturer-specific, the logical structure visible from the outside is standardized by the JEDEC industrial committee . This ensures that chips from different manufacturers and of different sizes can always be addressed according to the same scheme.

Structure of a memory cell

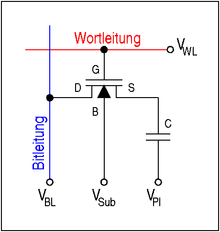

The structure of a single DRAM memory cell is very simple, it only consists of a capacitor and a transistor . Today a MOS field effect transistor is used . The information is stored as an electrical charge in the capacitor. Each memory cell stores one bit . While planar capacitors were mostly used in the past, two other technologies are currently used:

- In the stack technology (English stack , Stack ' ) of the capacitor is built up across the transistor.

- In the trench technology (English trench , trench ' ), the capacitor by etching a 5-10 micron hole (or trench) deep generated in the substrate.

- Schematic structure of the basic technologies for DRAM cells (cross-sections)

The upper connection shown in the adjacent figure is either charged to the bit line voltage V BL or discharged (0 V). The lower connection of all capacitors is connected together to a voltage source, which ideally has a voltage of V Pl = 1/2 · V BL . This allows the maximum field strength in the dielectric of the capacitor to be halved.

The transistor (also called the selection transistor) serves as a switch for reading and writing the information from the cell. For this purpose, via the word line (English word line a positive voltage) to the gate terminal "G" of the n-MOS transistor V WL applied. Characterized a conductive connection between the source ( "S") and the drain regions is ( "D") was prepared which the cell capacitor to the bit line (English bitline connects). The substrate connection “B” ( bulk ) of the transistor is connected either to the ground potential or to a slightly negative voltage V Sub to suppress leakage currents.

Due to their very simple structure, the memory cells require very little chip area. The design-related size of a memory cell is often given as the multiple of the square area F² of the smallest feasible structure length (“ minimum feature size ” or F for short): A DRAM cell today requires 6 or 8 F², while an SRAM cell requires more than 100 F² . Therefore, a DRAM can store a much larger number of bits for a given chip size. This results in much lower manufacturing costs per bit than with SRAM . Among the types of electronic memory commonly used today, only the NAND flash has a smaller memory cell with around 4.5 F² (or 2.2 F² per bit for multilevel cells).

Structure of a memory line ("Page")

Further by connecting the memory cells to a word line to obtain a memory line , commonly referred to as side (English page ) is referred to. The characteristic of a row is the property that when a word line (shown in red) is activated, all the associated cells simultaneously output their stored content to the bit line assigned to them (shown in blue). A common page size is 1 Ki , 2 Ki, 4 Ki (...) cells.

Structure of a cell field

The memory cells are interconnected in a matrix arrangement: 'word lines' connect all control electrodes of the selection transistors in a row, bit lines connect all drain regions of the selection transistors in a column.

At the lower edge of the matrix, the bit lines are connected to the (primary) read / write amplifiers ( sense amplifiers ). Because they have to fit into the tight grid of the cell array, they are two negative feedback in the simplest form CMOS - inverter composed of only four transistors. Their supply voltage is just equal to the bit line voltage V BL . In addition to their function as an amplifier of the read out cell signal, they also have the side effect that their structure corresponds to that of a simple static memory ( latch ) . The primary sense amplifier thus simultaneously serves as a memory for a complete memory line.

The switches shown above the sense amplifiers are used in the inactive state to precharge the bit lines to a level of ½ · V BL , which just represents the mean value of the voltage of a charged and a discharged cell.

A large number of these memory matrices are interconnected on a memory chip to form a coherent memory area, so the chip is internally divided into submatrices (transparent to the outside). Depending on the design, all data lines are routed to a single data pin or distributed over 4, 8, 16 or 32 data pins. This is then the data width k of the individual DRAM chip; several chips must be combined for wider bus widths.

Address decoding

The adjacent diagram shows the basic structure of the address decoding for a single cell field. The row address is fed to the row decoder via n address lines . This selects exactly one from the 2 n word lines connected to it and activates it by raising its potential to the word line voltage V WL . The memory row activated as a result in the cell array now outputs its data content to the bit lines. The resulting signal is amplified, stored and at the same time written back to the cell by the (primary) sense amplifiers.

The decoding of the column address and the selection of the data to be read is a two-step process. In a first step, the m address lines of the column address are fed to the column decoder . This selects one of the column selection lines usually connected 2 m and activates them. In this way - depending on the width of the memory - k bit lines are selected simultaneously. In a second step, in the column selection block, this subset of k bit lines from the total of k * 2 m bit lines is connected to the k data lines in the direction of the outside world. These are then amplified by a further read / write amplifier (not shown).

In order to limit the crosstalk between neighboring memory cells and their leads, the addresses are usually scrambled during decoding , namely according to a standardized rule so that they cannot be found in the order of their binary significance in the physical arrangement.

Internal processes

Initial state

- In the idle state of a DRAM, the word line is at low potential ( U WL = 0 V). As a result, the cell transistors are non-conductive and the charge stored in the capacitors remains - apart from undesired leakage currents.

- Both switches sketched in the diagram of the cell field above the sense amplifiers are closed. They keep the two bit lines, which are jointly connected to a sense amplifier, at the same potential (½ * U BL ).

- The voltage supply to the read amplifier ( U BL ) is switched off.

Activation of a memory line

- From the bank and line address transferred with an Activate (see diagrams for "Burst Read" access) it is first determined in which bank and, if applicable, in which memory block the specified line is located.

- The switches for the 'bit line precharge' are opened. The bit lines that have been charged up to half the bit line voltage are thus decoupled from each voltage source.

- A positive voltage is applied to the word line. The transistors of the cell array thus become conductive. Due to the long word lines, this process can take several nanoseconds and is therefore one of the reasons for the "slowness" of a DRAM.

- A charge exchange takes place between the cell capacitor and one of the two bit lines connected to a sense amplifier. At the end of the charge exchange, the cell and bit line have a voltage of

- charged. The sign of the voltage change (±) depends on whether a '1' or a '0' was previously stored in the cell. Due to the high bit line capacitance C BL / C = 5… 10 (due to the line length), the voltage change is only 100 mV. This reloading process also takes a few nanoseconds due to the high bit line capacitance.

- Towards the end of this recharging process, the supply voltage ( U BL ) of the primary read amplifier is switched on. These begin by amplifying the small voltage difference between the two bit lines and charge one of them to U BL and discharge the other to 0 V.

Reading data

- To read data, the column address must now be decoded by the column decoder.

- The column select line (CSL) corresponding to the column address is activated and connects one or more bit lines at the output of the primary sense amplifier to data lines which lead out of the cell array. Due to the length of these data lines, the data at the edge of the cell field must be amplified again with a (secondary) sense amplifier.

- The data read out are read parallel into a shift register, where with the external clock (English clock synchronized) and amplified output.

Writing of data

- The data to be written into the DRAM are read in almost simultaneously with the column address.

- The column address is decoded by the column decoder and the corresponding column selection line is activated. This re-establishes the connection between a data line and a bit line.

- In parallel with the decoding of the column address, the write data arrive at the column selection block and are passed on to the bit lines. The (weak) primary sense amplifiers are overwritten and now assume a state that corresponds to the write data.

- The sense amplifiers now support the reloading of the bit lines and the storage capacitors in the cell array.

Deactivation of a memory line

- The word line voltage is reduced to 0 V or a slightly negative value. This makes the cell transistors non-conductive and decouples the cell capacitors from the bit lines.

- The power supply to the sense amplifiers can now be switched off.

- The bit line precharge switches connecting the two bit lines are closed. The initial state (U = ½ U BL ) is thus restored on the bit lines .

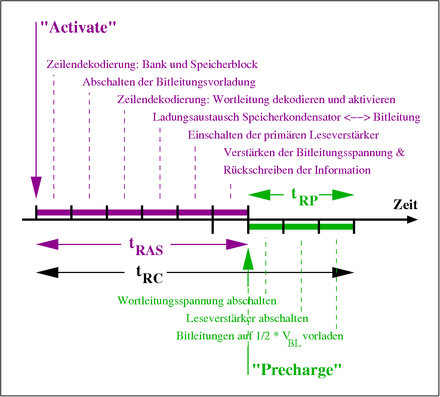

Timing parameters of the internal processes

NB, memory bars are often marked with a number set in the form of CL12-34-56 , the first number standing for the CL , the second for t RCD , the third for t RP ; an occasionally appended fourth pair of digits denotes t RAS . This number set is also referred to as (CL) (tRCD) (tRP) (tRAS) .

t RCD

The parameter t RCD ( RAS-to-CAS delay , row-to-column delay ) describes the time for a DRAM, which (after the activation of a word line activate ) must have elapsed before a read command ( read ) shall be sent. The parameter is due to the fact that the amplification of the bit line voltage and the writing back of the cell content must be completed before the bit lines can be further connected to the data lines.

CL

The parameter CL ( CAS latency , also t CL ) describes the time that passes between the sending of a read command and the receipt of the data.

See also: Column Address Strobe Latency

t RAS

The parameter t RAS ( RAS pulse width , Active Command Period , Bank Active Time ) describes the time that must have elapsed after activating a line (or a line in a bank) before a command to deactivate the line ( precharge , Closing the bank) may be sent. The parameter is given by the fact that the amplification of the bit line voltage and the writing back of the information into the cell must be completely completed before the word line can be deactivated. d. H. the smaller the better.

t RP

The parameter “ t RP ” ( “Row Precharge Time” ) describes the minimum time that must have elapsed after a precharge command before a new command to activate a line in the same bank can be sent. This time is defined by the condition that all voltages in the cell field (word line voltage, supply voltage of the sense amplifier) are switched off and the voltages of all lines (especially those of the bit lines) have returned to their starting level.

t RC

The parameter "t RC " ( "Row Cycle Time" ) describes the length of time that must have elapsed between two successive activations of any two rows in the same bank . The value largely corresponds to the sum of the parameters t RAS and t RP and thus describes the minimum time required to refresh a memory line.

DRAM-specific properties

Address multiplex

addressing

The address lines of a DRAM are usually multiplexed , whereas with SRAMs the complete address bus is usually routed to pins for the purpose of higher speed, so that access can take place in a single operation.

Asynchronous DRAMs ( EDO, FPM ) have two input pins RAS ( Row Address Select / Strobe ) and CAS ( Column Address Select / Strobe ) to define the use of the address lines: if there is a falling edge from RAS, the address on the address lines is displayed as Row address interpreted, with a falling edge from CAS it is interpreted as a column address.

RAS

Row Address Strobe , this control signal is present during a valid row address. The memory module stores this address in a buffer.

CAS

Column Address Select or Column Address Strobe , this control signal is present during a valid column address . The memory module stores this address in a buffer.

Synchronous DRAMs ( SDRAM , DDR-SDRAM ) also have the control inputs RAS and CAS , but here they have lost their immediate function. Instead, with synchronous DRAMs, the combination of all control signals ( CKE , RAS , CAS , WE , CS ) are evaluated with a rising clock edge in order to decide whether and in what form the signals on the address lines must be interpreted.

The advantage of saving external address lines is offset by an apparent disadvantage in the form of delayed availability of the column address. However, the column address is only required after the row address has been decoded, a word line has been activated and the bit line signal has been evaluated. However, this internal process requires approx. 15 ns, so that the column address received with a delay does not have a negative effect.

Burst

The images on the right show read access in the so-called burst mode for an asynchronous and a synchronous DRAM , as is used with the BEDO DRAM . The characteristic element of a burst access (when reading or writing) is the immediate succession of the data (Data1,…, Data4). The data belong to the same row of the cell field and therefore have the same row address (English row ) but different column addresses (Col1, ..., Col4). The time required for the provision of the next data bit within the burst is very short compared to the time required for the provision of the first data bit, measured from the activation of the line.

While all column addresses within the burst had to be specified for asynchronous DRAMs (Col1, ..., Col4), only the start address is specified for synchronous DRAMs (SDR, DDR). The column addresses required for the rest of the burst are then generated by an internal counter.

The high data rate within a burst is explained by the fact that the read amplifier only needs to be read (or written) within a burst. The sense amplifiers made up of 2 CMOS inverters (4 transistors) correspond to the basic structure of the cell of a static RAM (see adjacent diagrams). To provide the next burst data bit, only the column address needs to be decoded and the corresponding column selection line activated (this corresponds to the connection lines to the gate connection of the transistors M5 and M6 of an SRAM cell).

Refresh

The refreshment of the memory content , which is necessary at short time intervals , is generally referred to by the English term refresh . The necessity arises from the occurrence of undesired leakage currents which change the amount of charge stored in the capacitors. The leakage currents have an exponential temperature dependency: The time after which the content of a memory cell can no longer be correctly assessed ( retention time ) is halved with a temperature increase of 15 to 20 K. Commercially available DRAMs usually have a prescribed refresh period of 32 ms or 64 ms.

Technically, the primary sense amplifiers in the memory chip (see figure above) are equipped with the function of a latch register. They are designed as SRAM cells, i.e. as flip-flops . When a certain row (English page , Ger. Page ) is selected, the entire line is copied into the latches of the sense amplifier. Since the outputs of the amplifier are also connected to its inputs at the same time, the amplified signals are written back directly into the dynamic memory cells of the selected row and are thus refreshed.

There are different methods of this refresh control:

- RAS-only refresh

- This method is based on the fact that activating a row is automatically linked to an evaluation and writing back of the cell content. For this purpose, the memory controller must externally apply the line address of the line to be refreshed and activate the line via the control signals (see diagram for RAS-only refresh with EDO-DRAM ).

- CAS before RAS refresh

- This refresh method got its name from the control of asynchronous DRAMs, but has also been retained with synchronous DRAMs under the name Auto-Refresh . The naming was based on the otherwise impermissible signal sequence - this type of signal setting is avoided in digital technology because it is relatively error-prone (e.g. during synchronization) - that a falling CAS edge was generated before a falling RAS edge ( see diagram for CBR refresh with EDO-DRAM ). In response to the signal sequence, the DRAM performed a refresh cycle without having to rely on an external address. Instead, the address of the line to be refreshed was provided in an internal counter and automatically increased after the execution.

- Self-refresh

- This method was introduced for special designs of asynchronous DRAMs and was only implemented in a binding manner with synchronous DRAMs. With this method, external control or address signals (for the refresh) are largely dispensed with (see diagram for self-refresh with EDO-DRAM ). The DRAM is in a power-down mode , in which it does not react to external signals (an exception are of course the signals that indicate that it is remaining in the power-saving mode). To obtain the stored information, a DRAM-internal counter is used, which initiates an auto-refresh ( CAS-before-RAS-Refresh ) at predetermined time intervals . In recent DRAMs (DDR-2, DDR-3), the period for the refresh is controlled mostly dependent on the temperature (a so-called T emperature C ontrolled S eleven R efresh, TCSR), in order to reduce the operating current in the self-refresh at low temperatures.

Depending on the circuit environment, normal operation must be interrupted for the refresh; for example, the refresh can be triggered in a regularly called interrupt routine. For example, it can simply read any memory cell in the respective line with its own counting variable and thus refresh this line. On the other hand, there are also situations (especially in video memories) in which the entire memory area is addressed at short intervals anyway, so that no separate refresh operation is required. Some microprocessors , such as the Z80 or the latest processor chipsets , do the refresh fully automatically.

Bank

Before the introduction of synchronous DRAMs, a memory controller had to wait until the information of an activated row was written back and the associated word line was deactivated. Only exactly one line could be activated in the DRAM at a time. Since the length of a complete write or read cycle ( row cycle time, t RC ) was around 80 ns, access to data from different rows was quite time-consuming.

With the introduction of synchronous DRAMs, 2 (16 MiB SDRAM), then 4 (64 MiB SDRAM, DDR-SDRAM), 8 (DDR-3-SDRAM) or even 16 and 32 ( RDRAM ) memory banks were introduced. Memory banks are characterized by the fact that they each have their own address registers and sense amplifiers, so that one row could now be activated per bank . By operating several banks at the same time, you can avoid high latency times, because while one bank is delivering data, the memory controller can already send addresses for another bank.

Prefetch

The significantly lower speed of a DRAM compared to an SRAM is due to the structure and functionality of the DRAM. (Long word lines must be charged, a read cell can only output its charge slowly to the bit line, the read content must be evaluated and written back.) Although these times can generally be shortened using an internally modified structure, the storage density would decrease and so that the space requirement and thus the production price increase.

Instead, a trick is used to increase the external data transfer rate without having to increase the internal speed. In so-called prefetching , the data are read from several column addresses for each addressing and written to a parallel-serial converter ( shift register ). The data is output from this buffer with the higher (external) clock rate. This also explains the data bursts introduced with synchronous DRAMs and in particular their respective minimum burst length (it corresponds precisely to the length of the shift register used as a parallel-to-serial converter and thus the prefetch factor):

- SDR-SDRAM

- Prefetch = 1: 1 data bit per data pin is read out per read request.

- DDR SDRAM

- Prefetch = 2: 2 data bits per data pin are read out per read request and output in a data burst of length 2.

- DDR2 SDRAM

- Prefetch = 4: 4 data bits per data pin are read out per read request and output in a data burst of length 4.

- DDR3 and DDR4 SDRAM

- Prefetch = 8: 8 data bits per data pin are read out per read request and output in a data burst of length 8.

redundancy

As the storage density increases, the probability of defective storage cells increases. So-called redundant elements are provided in the chip design to increase the yield of functional DRAMs . These are additional row and column lines with corresponding memory cells. If defective memory cells are found during the test of the chips, the affected word or row line is deactivated. They are replaced by one (or more) word or row lines from the set of otherwise unused redundant elements (remapping).

The following procedures are used to permanently save this configuration change in the DRAM:

- With the help of a focused laser pulse, appropriately prepared contacts in the decoding circuits of the row or column address are vaporized ( laser fuse ).

- With the help of an electrical overvoltage pulse, electrical contacts are either opened ( e-fuse ) or closed (e.g. by destroying a thin insulating layer) ( anti e-fuse ).

In both cases, these permanent changes are used to program the address of the line to be replaced and the address of the redundant line to be used for it.

The number of redundant elements built into a DRAM design is approximately 1 percent.

The use of redundant elements to correct faulty memory cells must not be confused with active error correction based on parity bits or error-correcting codes (FEC). The error correction described here using redundant elements takes place once before the memory component is delivered to the customer. Subsequent errors (degradation of the component or transmission errors in the system) cannot be eliminated in this way.

See also: Memory module: ECC

Modules

Often entire memory modules are mistaken for the actual memory components . The difference is reflected in the size designation: DIMMs are measured in Mebi or Gibi byte (MiB or GiB), whereas the individual module chip on the DIMM is measured in Mebi or Gibi bit . Advances in manufacturing technology mean that manufacturers can accommodate more and more memory cells on the individual chips, so that 512 MiBit components are readily available. A memory module that complies with the standard is only created by interconnecting individual SDRAM chips.

history

| Year of introduction |

typ. burst rate (ns) |

DRAM type |

|---|---|---|

| 1970 | 60 ... 300 | classic DRAM |

| 1987 | 40 ... 50 | FPM-DRAM ( Fast Page Mode DRAM ) |

| 1995 | 20 ... 30 | EDO-RAM ( Extended Data Output RAM ) |

| 1997 | 6… 15 | SDRAM ( Synchronous Dynamic RAM ) |

| 1999 | 0.83 ... 1.88 | RDRAM ( Rambus Dynamic RAM ) |

| 2000 | 2.50… 5.00 | DDR-SDRAM (Double Data Rate SDRAM) |

| 2003 | 1.00 ... 1.25 | GDDR2-SDRAM |

| 2004 | 0.94 ... 2.50 | DDR2 SDRAM |

| 2004 | 0.38 ... 0.71 | GDDR3-SDRAM |

| 2006 | 0.44 ... 0.50 | GDDR4-SDRAM |

| 2007 | 0.47 ... 1.25 | DDR3 SDRAM |

| 2008 | 0.12 ... 0.27 | GDDR5-SDRAM |

| 2012 | 0.25 ... 0.62 | DDR4 SDRAM |

| 2016 | 0.07 ... 0.10 | GDDR5X-SDRAM |

| 2018 | 0.07 | GDDR6-SDRAM |

The first commercially available DRAM chip was the Type 1103 introduced by Intel in 1970 . It contained 1024 memory cells (1 KiBit ). The principle of the DRAM memory cell was developed in 1966 by Robert H. Dennard at the Thomas J. Watson Research Center of IBM .

Since then, the capacity of a DRAM chip has increased by a factor of 8 million and the access time has been reduced to a hundredth. Today (2014) DRAM ICs have capacities of up to 8 GiBit (single die) or 16 GiBit (twin die) and access times of 6 ns. The production of DRAM memory chips is one of the top-selling segments in the semiconductor industry. There is speculation with the products; there is a spot market .

In the beginning, DRAM memories were constructed from individual memory modules (chips) in DIL design. For 16 KiB of working memory (for example in the Atari 600XL or CBM 8032 ) 8 memory modules of type 4116 (16384 cells of 1 bit) or two modules of type 4416 (16384 cells of 4 bit) were required. For 64 KiB 8 blocks of type 4164 ( C64- I) or 2 blocks of type 41464 (C64-II) were needed. IBM PCs were initially sold at 64 KiB as the minimum memory configuration. Nine building blocks of type 4164 were used here; the ninth block stores the parity bits .

Before the SIMMs came onto the market, there were, for example, motherboards for computers with Intel 80386 processors that could be equipped with 8 MiB of working memory made up of individual chips. For this, 72 individual chips of the type 411000 (1 MiBit) had to be pressed into the sockets. This was a lengthy and error-prone procedure. If the same board was to be equipped with only 4 MiB of RAM, with the considerably cheaper 41256 (256 KiB) chips being used instead of the 411000 type, 144 individual chips had to be inserted: 9 chips make 256 KiB, 16 such groups with 9 chips each resulted in 4 MiB. Larger chips were therefore soldered to form modules that required considerably less space.

application

random access memory

Normally the DRAM in the form of memory modules is used as the main memory of the processor . DRAMs are often classified according to the type of device interface . In the main applications, the interface types Fast Page Mode DRAM (FPM), Extended Data Output RAM (EDO), Synchronous DRAM (SDR), and Double Data Rate Synchronous DRAM (DDR) have developed in chronological order . The properties of these DRAM types are standardized by the JEDEC consortium . In addition, the Rambus DRAM interface exists parallel to SDR / DDR , which is mainly used for memory for servers .

Special applications

Special RAM is used as image and texture memory for graphics cards , for example GDDR3 (Graphics Double Data Rate SDRAM ).

The refreshing of the memory cells can be optimized by restricting them to a special area, so this can be set in the time of the line return in the case of an image memory, for example. It is also u. It can be tolerated if a single pixel temporarily shows the wrong color, so you don't have to take the worst memory cell on the chip into account. Therefore - despite the same production technologies - significantly faster DRAMs can be produced.

Further types have been developed for special applications: Graphics DRAM (also Synchronous Graphics RAM, SGRAM) is optimized for use on graphics cards , for example, due to its higher data widths , but the basic functionality of a DDR DRAM, for example, is used. The forerunners of graphics RAM were video RAM (VRAM) - a Fast Page Mode RAM optimized for graphics applications with two ports instead of one - and then Window RAM (WRAM), which had EDO features and a dedicated display port .

DRAM types optimized for use in network components have been given the names Network-RAM , Fast-Cycle-RAM and Reduced Latency RAM by various manufacturers . In mobile applications, such as cell phones or PDAs , low energy consumption is important - for this purpose, mobile DRAMs are developed, in which the power consumption is reduced through special circuit technology and manufacturing technology. The pseudo-SRAM (also cellular RAM or 1T-SRAM = 1-transistor-SRAM from other manufacturers ) takes on a hybrid role : the memory itself is a DRAM that behaves like an SRAM to the outside world. This is achieved in that a logic circuit converts the SRAM-typical access mechanism to the DRAM control and the regular refreshing of the memory contents, which is fundamentally necessary for dynamic memories, is carried out by circuits contained in the module.

In the early days of the DRAMs, as these are often still in a ceramic - DIL - housing were built, there were craft solutions, using them as image sensors for DIY cameras. To do this, the metal cover on the ceramic housing was carefully removed, and the die was then directly underneath - without any potting compound. A lens was placed in front of it , which precisely reproduced the image on the die surface. If the chip was completely filled with 1 at the beginning of the exposure , i.e. all storage capacitors were charged, the charges were discharged at different speeds by incident light, depending on the intensity. After a certain (exposure) time, the cells were read out and the image was then interpreted in 1-bit resolution. For grayscale you had to take the same image several times with different exposure times. An additional complication resulted from the fact that the memory cells are not simply arranged according to their binary addresses in order to avoid crosstalk, but these address bits are deliberately “scrambled”. Therefore, after reading out, the image data first had to be brought into the correct arrangement with the inverse pattern. This is hardly possible with today's chips, as they are usually embedded in plastic potting compound; in addition, digital cameras are now generally accessible and affordable.

Types

There are a variety of types of DRAM that have evolved over time:

A number of non-volatile RAM ( NVRAM ) technologies are currently under development, such as:

The storage capacity is specified in bits and bytes .

RAM used as main memory is often used in the form of memory modules :

The net total size of RAM modules used as main memory is practically always a power of 2.

literature

- Christof Windeck: Riegel-Reigen: Construction of current memory modules. In: c't No. 7, 2006, p. 238 ( download of the journal article for a fee )

- Siemens AG (Ed.): Memory Components Data Book. Munich 1994.

- The DRAM story . In: SSCS IEEE SOLID-STATE CIRCUITS SOCIETY NEWS . tape 13 , no. 1 , 2008 ( complete edition as PDF [accessed August 1, 2009]).

Web links

German:

- Christian Hirsch: Compact working memory thanks to Z-RAM . In: heise online . August 15, 2007.

English:

-

Memory 1997 ( PDF , approx. 167 kB ) - Document from Integrated Circuit Engineering Corporation, ISBN 1-877750-59-X (166 kB)

- Section 7. DRAM Technology (PDF, approx. 770 kB) - Chapter 7 of "Memory 1997"

- The evolution of IBM CMOS DRAM Technology - IBM article , July 25, 1994

Individual evidence

- ↑ J. Alex Halderman, Seth D. Schoen, Nadia Heninger, William Clarkson, William Paul, Joseph A. Calandrino, Ariel J. Feldman, Jacob Appelbaum, Edward W. Felten: Lest We Remember: Cold Boot Attacks on Encryption Keys . In: Proc. 2008 USENIX Security Symposium. February 21, 2008, pp. 45-60.

![{\ begin {matrix} U & = & {\ frac {U _ {{BL}}} {2}} \ cdot (1 \ pm {\ frac {C} {C + C _ {{BL}}}}) \\ [2ex] & = & \ underbrace {{\ frac {U _ {{BL}}} {2}}} _ {{\ mathrm {original {\ ddot {u}} nable \ atop bit line voltage}}} \ pm \ underbrace {{\ frac {U _ {{BL}}} {2}} \ cdot {\ frac {C} {C + C _ {{BL}}}}} _ {{\ mathrm {Voltage {\ ddot {a}} change}}} \\\ end {matrix}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/aa8aef3d4434f1d95ec6bd76b457122bd11f2147)