processor

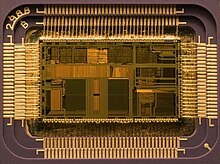

A processor is a (mostly very much reduced and mostly freely) programmable arithmetic logic unit , i.e. a machine or an electronic circuit that controls other machines or electrical circuits according to given commands and thereby drives an algorithm ( process ), which usually includes data processing. The following article only describes this meaning, using the example of the processor of a computer. The best known are processors as main processor , central processing unit or (more generally) central processing unit (short ZVE , English central processing unit , short CPU ) for computers or computer-like devices in which they execute commands; They are most common as microcontrollers in embedded systems (e.g. in washing machines , ticket machines , DVD players , smartphones , etc.).

Understanding of terms

In the earlier language, the term “processor” was understood to mean both the component (a semiconductor chip in a plastic housing that is plugged into a socket with its pins ) and a data processing logic unit. Nowadays, however, many processor chips have several so-called processor cores , each core representing a (largely) independent logic unit. Today, the term processor is generally understood primarily to mean the component; if the data processing logic unit is meant, it is usually referred to as the processor core .

Translated meanings of the term CPU

The term CPU is also used colloquially in other contexts for central processing unit (ZE), in this case it can stand for a central main computer (a complete computer) to which individual terminal workstations are connected. Sometimes the term is also used as a metaphor , for example in computer games as "A game against the CPU".

General information

The main components of a processor (core) are the arithmetic unit (especially the arithmetic-logic unit , ALU) and the control unit (including the address unit). In addition, they usually contain several registers and a memory manager (English Memory Management Unit , MMU) that manages the main memory . The central tasks of the processor include the processing of the machine program : arithmetic and logical operations for processing data from internal or external sources, for example the main memory.

In addition to these main components, which provide the basic functions, there can be other processing units that provide specialized functions and are intended to relieve the actual processor - these units are usually referred to as coprocessors . Examples of this are the mathematical coprocessor for floating point operations (the floating point unit ), which was separate until the 1990s, as well as graphics and sound processors . In this context, the central processor with its basic functions described in the previous paragraph is also referred to as the main processor (or, in short, as the CPU ). Further synonyms are central processing unit ( ZVE for short or central unit ). The modern form of the processor is the microprocessor , which combines all the components of the processor in an integrated circuit (microchip). Modern processors for desktop computers and notebooks but also for smartphones and tablet computers are often multi-core processors with two, four or more processor cores . The processor cores are often independent "processors" with control / tail unit and arithmetic unit on a chip. Examples of this are the Intel Core 2 , the AMD Athlon X2 or the Nvidia Tegra 3. A clear delimitation of the terms processor, main processor, CPU and processor core cannot be found in the literature, see section Main processor, CPU and processor core .

Processors are often in the area of embedded systems ( English embedded systems used) for the control of household appliances, industrial equipment, consumer electronics, etc. In mainframes (English main frames earlier usually proprietary processor architectures have been used), such as in IBM ( PowerPC , Cell processor ) or SUN ( SPARC processor ); today, adapted versions of the popular PC processor models are predominantly used.

Processors for embedded systems make up around 95 percent of the processor market, 90 percent of which are so-called microcontrollers , which in addition to the actual processor contain other functions (e.g. special hardware interfaces or directly integrated sensors ). Only about 5 percent are used in PCs , workstations or servers .

Historical development

In the 1930s, the arithmetic unit of a computer initially consisted of relays and mechanical components, e.g. B. with the Zuse Z3 . So these first computers were electromechanical computers that were slow and extremely prone to failure. In the 1940s people began to build computers with the help of electron tubes , such as the ENIAC . This made the computers faster and less prone to failure. Initially these computers were expensive individual projects, but the technology matured more and more over the course of the 1950s. Tube computers gradually became items of series production that were quite affordable for universities, research institutes and companies. To achieve this goal, it was necessary to reduce the number of tubes required to a minimum. For this reason, tubes were only used where they were essential. So one began with accommodating the main memory and CPU register on a magnetic drum , performing arithmetic operations serially and implementing the sequence control with the help of a diode matrix. A typical representative of this generation of computers was the LGP-30 .

The term CPU was first mentioned in the early 1950s. In a brochure from IBM (705 EDPM) from 1955, the term “Central Processing Unit” was first spelled out, later added the abbreviation CPU in brackets and then only used in its short form. Older IBM brochures do not use the term; For example, the brochure " Magnetic Cores for Memory in Microseconds in a Great New IBM Electronic Data Processing Machine for Business " from 1954, in which the IBM 705 is also presented, but only mentions " data processing " in the relevant places .

In the 1950s, the unreliable electron tubes were replaced by transistors , which also reduced the power consumption of computers. Initially, the processors were made up of individual transistors. Over the years, however, more and more transistor functions have been accommodated on integrated circuits (ICs). Initially it was just individual gates , but more and more entire registers and functional units such as adders and counters were integrated, and eventually even register banks and arithmetic units on one chip. The main processor could be accommodated in a single control cabinet , which led to the term mainframe , ie "main frame" or "main cabinet ". This was the time of the minicomputer , which no longer filled an entire hall, but only one room. The increasing integration of more and more transistor and gate functions on one chip and the steady shrinking of the transistor dimensions then almost inevitably led to the integration of all functions of a processor on one chip, the microprocessor, in the early 1970s . Initially smiled at because of their comparatively low performance (legend has it that an IBM engineer said about the first microprocessor: “Nice, but what's that good for?”), Microprocessors have now replaced all previous technologies for building a main processor.

This trend continued in the decades that followed. The math coprocessor was integrated into the (main) processor at the end of the 1980s and the graphics processor at the end of the 2000s, cf. APU .

Structure / functional units

A processor (core) consists at least of registers (memory), an arithmetic unit (the arithmetic logic unit , ALU for short), a control unit and the data lines ( buses ) that enable communication with other components (see figure below). These components can generally be further subdivided, for example the control unit for more efficient processing of commands contains the command pipeline with mostly several stages, including the command decoder , and an address unit; the ALU contains, for example, hardware multipliers . In addition, especially in modern microprocessors, there are sometimes much more finely subdivided units that can be flexibly used / allocated, as well as multiple executed units that allow the simultaneous processing of several commands (see for example simultaneous multithreading , hyper-threading , out-of- order execution ).

The memory management unit and a (possibly multi-level) cache are often integrated in today's processors (level 1 cache "L1" to level 4 cache "L4"). Sometimes an I / O unit is also integrated, often at least an interrupt controller.

In addition, there are also often specialized computing units such. B. a floating point unit , a unit for vector functions or for signal processing . From this point of view, the transitions to microcontrollers or a system-on-a-chip , which combine other components of a computer system in an integrated circuit, are sometimes fluid.

Main processor, CPU and processor core

A processor primarily consists of the control unit and the arithmetic unit (ALU). However, there are other processing units that do not contain a control or tail unit, but are often also referred to as processors. These units, generally known as coprocessors , generally provide specialized functions. Examples are the floating point unit as well as graphics and sound processors . The term CPU ( central processing unit [ ˈsɛntɹəl ˈpɹəʊsɛsɪŋ ˈju: nɪt ]) or the German main processor is used to distinguish these coprocessors from a “real” processor with a control and arithmetic unit .

Modern microprocessors are often designed as so-called multi - core processors ( multi-core processors ). Together with the appropriate software, they allow a further increase in the overall computing power without a noticeable increase in the clock frequency (the technology that was used until the 2000s to increase the computing power of a microprocessor). Multi-core processors consist of several independent units with a computing and control unit, around which other components such as cache and memory management unit (MMU) are arranged. These units are a processor core (Engl. Core ), respectively. The terms single-core processor , dual-core , quad-core and hexa-core processor (six -core processor ) are common (only rarely: triple-core , octa-core (eight-core), deca Core processor (ten core)). Since the cores are independent processors, the individual cores are often referred to as a CPU. This term “CPU” is used synonymously with “core”, for example in multi-core processors or system-on-a-chip (SoC) with further integrated units, e.g. B. a graphics processor (GPU) to distinguish the cores with control and arithmetic unit from the other units, see u. a. Accelerated Processing Unit (APU).

The classic division that a control unit and an ALU are referred to as a CPU, core or processor is becoming increasingly blurred. Today's processors (including single-core processors) often have control units that each manage several hardware threads ( multi- / hyper-threading ); the operating system “sees” more processor cores than are actually (fully-fledged) control units. In addition, a control unit often operates several ALUs and other assemblies such as B. floating point arithmetic unit , vector unit (see also AltiVec , SSE ) or a cryptography unit. Conversely, several control units sometimes have to share these special processing units, which prevents a clear assignment.

Control or tail unit

The control unit, also known as the tail unit, controls the execution of the instructions. It ensures that the machine command in the command register is decoded by the command decoder and executed by the arithmetic unit and the other components of the computer system. For this purpose, the instruction decoder translated binary machine instructions using the command table (English instruction table ) in appropriate instructions ( microcode ) that activate the required for the execution of the command circuits. Three essential registers , i.e. very quickly accessible processor-internal memories, are required:

- The instruction register : It contains the machine instruction currently to be executed.

- The command counter (English program counter ): This register points to the next command when the command is executed. (A jump instruction loads the address of its jump destination here.)

- The status register : It uses so-called flags to indicate the status that other parts of the computer system, etc. a. the processing unit and the control unit, is generated when certain commands are executed in order to be able to evaluate it in subsequent commands. For example, result of an arithmetic or comparison operation reveals zero ',' Minus' or the like, a., Carry (carry) is taken into account in an arithmetic operation.

In RISC processors, no command decoder is sometimes necessary - in some RISC processors the command bits interconnect the corresponding ALU and register units directly. There is no microcode there either. Most modern processor architectures are RISC-like or have a RISC core for the frequent, simple instructions as well as a translating emulation layer in front of them, which translates complex instructions into several RISC instructions.

An explicit command register can also be replaced by a pipeline . Sometimes several commands are being processed at the same time, then the order of their processing can also be rearranged ( out-of-order execution ).

Arithmetic unit and register

The arithmetic unit carries out the elementary operations of a processor. It consists on the one hand of the arithmetic-logical unit (ALU) and on the other hand of the working registers. It can perform both arithmetic (e.g. adding two numbers) and logical (e.g. AND or OR) operations. Due to the complexity of modern processors, which usually have several arithmetic units with specialized functions, one generally speaks of the operational unit .

The working registers can hold data (as data registers) and, depending on the processor type, also addresses (as address registers). Usually operations can only be carried out directly with the values in the registers. They therefore represent the first level of the memory hierarchy . The properties and in particular the size and number of registers (depending on the processor type) may vary. a. the performance of the respective processor.

A special address register is the stack pointer ( English stack pointer ), the return address when a subroutine call receiving. Register copies are then often saved on the stack and new, local variables are created.

Data lines

The processor is connected to other components via various buses (signal lines).

- Data is exchanged with the main memory via the data bus , such as the information for the working register and the command register. Depending on the processor architecture used, a main processor (a CPU) has a single bus for data from the main memory ( Von Neumann architecture ) or several (usually two) separate data lines for the program code and normal data ( Harvard architecture ).

- The address bus is used to transfer memory addresses. One memory cell of the RAM is addressed (selected) into which - depending on the signal from the control bus - the data currently on the data bus is written or from which the data is read, i.e. H. placed on the data bus.

- With the control bus (control bus ) the processor controls u. a. whether data is currently being written or read, whether it is giving the bus to another bus master as part of direct memory access (DMA), or the address bus means a peripheral connection instead of the RAM (for isolated I / O ). Input lines trigger a reset or interrupt , for example , supply it with a clock signal or receive a "bus request" from a DMA device.

As part of the control unit, the so-called bus interface is connected between the data lines and the register , which controls the accesses and prioritizes when different subunits are requested at the same time.

Caches and MMU

Modern processors that are used in PCs or other devices that require fast data processing are equipped with so-called caches. Caches are intermediate stores that temporarily store the most recently processed data and commands and thus enable rapid reuse. They represent the second level of the memory hierarchy . Normally a processor today has up to four level caches:

- Level 1 cache (L1 cache): This cache runs with the processor clock. It is very small (around 4 to 256 kilobytes ) but can be accessed very quickly due to its position in the processor core.

- Level 2 cache (L2 cache): The L2 cache is usually located in the processor, but not in the core itself. It is between 64 kilobytes and 12 megabytes .

- Level 3 cache (L3 cache): With multi-core processors , the individual cores share the L3 cache. It is the second slowest of the four caches, but mostly already very large (up to 256 megabytes ).

- Level 4 cache (L4 cache): If available, then mostly outside the CPU on an interposer or the mainboard. It is the slowest of the four caches (rarely over 128 megabytes ).

The memory management unit translates the virtual addresses of the processes being executed into real addresses for all processor cores simultaneously and ensures cache coherence : If a core changes memory content, it must be ensured that the other caches do not contain any outdated values. Depending on their exact location, the cache levels contain data either relating to virtual or real addresses.

Processing of a single command

To illustrate the roles of the sub-units more concretely, here is the sequence of processing a single machine command. The individual steps listed can sometimes run simultaneously or overlap; the numbering has nothing to do with the number of clock cycles that the command requires. Additional details such as processor pipelines or branch prediction (branch prediction) lead to further timing refinements which are initially omitted here for the purposes of simplification. For the same reason, complex calculations depending on the selected addressing type for determining a final memory address are not mentioned.

- Loading the next command: The command counter, which contains the address of the next command, is placed on the address bus by the control unit via the bus interface; then a read pulse is signaled to the memory management.

The command counter is incremented to the next address in parallel. - The memory management puts the data value from this (virtual) RAM address on the data lines; it found the value in the cache or in the RAM. After the delay caused by the finite access time of the RAM, the content of this memory cell is present on the data lines.

- The control unit copies this data into the command register via the bus interface.

- The command is pre-decoded to determine whether it is completely loaded.

- If it is an instruction that consists of several bytes, they are fetched from the memory (if this has not already happened due to a larger bus width) by repeating steps 1 to 4 and copied into the relevant processor register.

- If the command also includes reading out a memory cell in the RAM, the control unit places the address for this data on the address lines, and a read pulse is signaled. You must then wait long enough for the RAM to reliably provide this information. The data bus is then read out and copied into the responsible processor register.

- The command is fully decoded and the sub-units required for its processing are activated, the internal data paths are switched accordingly.

- The arithmetic unit takes care of the actual processing within the processor, for example the addition of two register contents. The result ends up in one of the processor registers.

- If the instruction is a jump or branch instruction, the result is not stored in a data register, but in the instruction counter.

- Depending on the result value, the control unit may update the status register with its status flags.

- If the command also includes the restoring of a result / register content in the RAM, the control unit places the address for this data on the address lines and the data content on the data lines, a write pulse is signaled. You then have to wait a long enough time for the RAM to be able to safely accept this information.

- The command has now been processed and you can proceed to the next command in step 1 above.

Different architectures

The two essential basic architectures for CPUs are the Von Neumann and Harvard architecture .

In the Von Neumann architecture , named after the mathematician John von Neumann , there is no separation between the memory for data and program code. In contrast, with the Harvard architecture, data and program (s) are stored in strictly separate memory and address spaces, which are typically accessed in parallel by two separate bus systems .

Both architectures have their specific advantages and disadvantages. In the Von Neumann architecture, program code and data can basically be treated identically. This means that uniform operating system routines can be used for loading and saving. In contrast to the Harvard architecture, the program code can also modify itself or be treated as “data”. B. can be easily edited and modified using the debugger . Disadvantages / risks lie in the area of software ergonomics and stability, for example runtime errors such as a buffer overflow can modify the program code.

Due to the separation into two physical memories and buses, the Harvard architecture has a potentially higher performance, since data and program access can take place in parallel. With a Harvard architecture, the physical separation of data and program makes it easy to achieve a separation of access rights and memory protection . To z. For example, to prevent program code from being overwritten in the event of software errors, a memory that can only be read during operation (e.g. ROM , punch cards ) was used (especially historically) for program code , whereas writable and readable memory (e.g. memory cards ) was used for data. B. RAM , toroidal core memory ).

From a program point of view, practically all modern CPUs present themselves as Von Neumann architecture, but their internal structure corresponds in many aspects to a parallel Harvard architecture for performance reasons. It is not uncommon for a CPU to have several independent data paths internally (especially with the L1 cache) and cache hierarchy levels in order to achieve high performance with as many parallel data paths as possible. The potentially possible data incoherence and access race conditions are prevented internally by complex data protocols and management.

Nowadays, memory areas that only contain data are marked as non-executable , so that exploits that store executable code in data areas cannot execute them. Conversely, writing to areas with program code can be refused ( buffer overflow exploits).

Instruction set

The instruction set describes the totality of the machine instructions of a processor. The scope of the instruction set varies considerably depending on the processor type. A large instruction set is typical for processors with CISC architecture (English Complex Instruction Set Computing - computing complex instruction set ), a small instruction set is typical for processors with RISC - processor architecture (English Reduced Instruction Set Computing - computing reduced instruction set ).

The traditional CISC architecture tries to express more and more complex functions directly through machine commands. It is particularly characterized by the large number of available machine commands, which usually exceeds 100 (far). These are also able to carry out complex operations directly (such as floating point number operations). This means that complex processes can be implemented using just a few "powerful" commands. The juxtaposition of complex (lengthy) and simple (quickly executable) instructions makes efficient processor design difficult, especially pipeline design .

In the 1980s, the RISC concept emerged as a reaction to this, with the conscious decision not to provide complex functionality in the form of instructions. Only simple instructions that are similarly complex are provided. The attempt is made to provide commands that can be processed very quickly, but only a few (fewer than 100), very simple ones. This significantly simplified the processor design and enabled optimizations that usually allowed a higher processor cycle and, in turn, a faster execution speed. This is due, among other things, to the fact that fewer clock cycles are required and the decoding is faster due to the lower complexity. A simpler processor design, however, means a shift in the development effort towards software as a provider of more complex functionality. The compiler now has the task of an efficient and correct implementation with the simplified instruction set.

Today, the widespread x86 architecture - as a (earlier) typical representative of the CISC class - is internally similar to a RISC architecture: Simple commands are mostly direct µ-operations ; complex commands are broken down into µ-ops. These µ-Ops are similar to the simple machine commands of RISC systems, as is the internal processor structure (e.g. there is no longer any microcode for the µ-operations, they are "used directly").

Another type of processor design is the use of VLIW . There several instructions are summarized in one word. This defines from the beginning on which unit which instruction runs. Out-of-order execution, as found in modern processors, does not exist for this type of instruction.

A distinction is also made between the number of addresses in the machine command :

- Zero address commands ( stack computer )

- One-address commands ( accumulator calculator )

- Two, three and four address commands (ALTIVEC had four operand commands, for example)

functionality

The processing of commands in a processor core basically follows the Von Neumann cycle .

- "FETCH": The address of the next machine command is read from the command address register . This is then loaded from the main memory (more precisely: from the L1 cache ) into the command register.

- "DECODE": The command decoder decodes the command and activates the appropriate circuits that are necessary for the execution of the command.

- "FETCH OPERANDS": If further data has to be loaded for execution (required parameters), these are loaded from the L1 cache memory into the working registers.

- "EXECUTE": The command is executed. This can be, for example, operations in the arithmetic logic unit, a jump in the program (a change in the command address register), the writing of results back to the main memory or the activation of peripheral devices . The status register , which can be evaluated by subsequent commands, is set depending on the result of some commands .

- "UPDATE INSTRUCTION POINTER": If there was no jump command in the EXECUTE phase, the command address register is now increased by the length of the command so that it points to the next machine command.

Occasionally, a differentiation is also made between a write-back phase , in which any calculation results that may arise are written to certain registers (see Out-of-order execution , step 6). So-called hardware interrupts should also be mentioned . The hardware of a computer can make requests to the processor. Since these requests occur asynchronously, the processor is forced to check regularly whether there are any and to process them before continuing the actual program.

control

All programs are stored in memory as a sequence of binary machine instructions. Only these commands can be processed by the processor. However, this code is almost impossible for a human to read. For this reason, programs are first written in assembly language or a high-level language ( e.g. BASIC , C , C ++ , Java ) and then translated by a compiler into an executable file , i.e. in machine language, or executed by an interpreter at runtime .

Symbolic machine commands

In order to make it possible to write programs in an acceptable time and in an understandable way, a symbolic notation for machine commands , so-called mnemonics, was introduced . Machine commands are represented by key words for operations (e.g. MOV for move , ie "move" or "[ver] shift") and by optional arguments (such as BX and 85F3h ). Since different processor types have different machine commands, there are also different mnemonics for them. Assembly languages are based on this processor type-dependent mnemonic and include memory reservation, administration of addresses, macros, function headers, definition of structures, etc.

Processor-independent programming is only possible through the use of abstract languages. These can be high-level languages, but also languages such as FORTH or even RTL . It is not the complexity of the language that is important, but its hardware abstraction.

-

MOV BX, 85F3h

(movw $ 0x85F3,% bx)

The value 85F3h (decimal: 34291) is loaded into register BX. -

MOV BX, word ptr [85F2h]

(movw 0x85F2,% bx)

The contents of the memory cells with the addresses 85F2h and 85F3h (decimal: 34290 and 34291) relative to the start of the data segment (determined by DS) are loaded into register BX. The content of 85F2h is written to the lower-order part BL and the content of 85F3h to the higher-order part BH. -

ADD BX, 15

(addw $ 15,% bx)

The value 15 is added to the content of the working register BX. The flag register is set according to the result.

Binary machine commands

Machine commands are very processor-specific and consist of several parts. These include at least the actual command, the operation code (OP-CODE), the type of addressing , and the operand value or an address. They can be roughly divided into the following categories:

- Arithmetic commands

- Logical commands

- Jump commands

- Transport orders

- Processor control commands

Command processing

All processors with higher processing power are now modified Harvard architectures , since the Harvard architecture brings with it some problems due to the strict separation between commands and data. For example, self-modifying code requires that data can also be executed as commands. It is also important for debuggers to be able to set a breakpoint in the program sequence, which also requires a transfer between the command and data address space. The Von Neumann architecture can be found in small microcontrollers. The layman must not be confused by the common address space of commands and data from one or more cores. The combination of command and data address space takes place at the level of cache controllers and their coherence protocols . This applies not only to these two address spaces (where this is usually done on L2 level), but also between those of different cores in multiprocessor systems (here it is mostly done on L3 level) or in multi-socket systems when they are combined Main memory.

With a few exceptions (e.g. the Sharp SC61860) , microprocessors are capable of interruption , program sequences can be interrupted or canceled by external signals without this having to be provided for in the program sequence. An interrupt system requires, on the one hand, interrupt logic (i.e. instructions that can be pushed in on demand) and, on the other hand, the ability to save and restore the internal state of the processor so as not to affect the original program. If a microprocessor does not have an interrupt system, the software must poll the hardware itself.

In addition to the orderly execution of commands, modern high-performance processors in particular are proficient in other techniques to accelerate program processing. Modern high-performance microprocessors in particular use parallel techniques such as pipelining and superscalarity in order to enable a possibly possible parallel processing of several commands, with the individual sub-steps of command execution being slightly offset from one another. Another way of accelerating the execution of programs is out-of-order execution , in which the commands are not executed strictly according to the order specified by the program, but the processor independently assigns the order of the commands tried to optimize. The motivation for a deviation from the specified command sequence is that, due to branch commands, the program run cannot always be reliably foreseen. If you want to execute commands in parallel to a certain extent, it is necessary in these cases to decide on a branch and to execute the respective command sequence speculatively. It is then possible that the further program run leads to another command sequence having to be executed, so that the speculatively executed commands have to be reversed. In this sense, one speaks of a disorderly command execution.

Addressing types

Machine commands refer to specified source or target objects that they either use and / or act on . These objects are specified in coded form as part of the machine command, which is why their effective (logical *) memory address must be determined during or before the actual execution of the command. The result of the calculation is made available in special addressing devices of the hardware (registers) and used when executing commands. Different addressing types (variants) are used for the calculation, depending on the structure of the command, which is uniformly defined for each command code.

(*) The calculation of the physical addresses based on the logical addresses is independent of this and is usually carried out by a memory management unit .

The following graphic provides an overview of the most important types of addressing; for more information on addressing, see Addressing (computer architecture) .

| Register addressing | implicitly |

| explicit | |

| Single-stage memory addressing |

right away |

| directly | |

| Register-indirect | |

| indexed | |

| Program counter-relative | |

| Two-level memory addressing |

indirect-absolute |

| Other … |

Register addressing

When addressing a register , the operand is already available in a processor register and does not have to be loaded from memory first.

- If the register addressing takes place implicitly , the register defined implicitly for the opcode is also addressed (example: the opcode implicitly refers to the accumulator ).

- With explicit register addressing, the number of the register is entered in a register field of the machine command.

- Example: | C | R1 | R2 | Add content of R1 to the content of R2; C = command code, Rn = register (n)

One-step addressing

With single-level addressing types, the effective address can be determined by a single address calculation. The memory does not have to be accessed again during the address calculation.

- With direct addressing, the command does not contain an address but the operand itself; mostly only applicable for short operands like '0', '1', 'AB' etc.

- With direct addressing, the command contains the logical address itself, so there is no need to calculate the address.

- With register indirect addressing, the logical address is already contained in an address register of the processor. The number of this address register is transferred in the machine command.

- With indexed addressing, the address is calculated by means of addition: The content of a register is added to an additional address specified in the command. One of the two address details contains i. d. Usually one base address, while the other contains an 'offset' to this address. See also register types .

- Example: | C | R1 | R2 | O | Load content from R2 + content (offset) into R1; O = offset

- With program counter-relative addressing, the new address is determined from the current value of the program counter and an offset.

Two-stage addressing

With two-stage addressing types, several computing steps are necessary to obtain the effective address. In particular, additional memory access is usually necessary in the course of the calculation. Indirect absolute addressing is an example here . The command contains an absolute memory address. The memory word that can be found at this address contains the effective address sought. The given memory address in the memory must first be accessed in order to determine the effective address for executing the command. This characterizes all two-stage procedures.

Example: | C | R1 | R2 | AA | Load to R1 = content R2 + content at addr (AA)

Performance characteristics

The performance of a processor is largely determined by the number of transistors as well as the word length and the processor speed.

Word length

The word length determines how long a machine word of the processor can be, i.e. H. The maximum number of bits it can consist of. The following values are decisive:

- Working or data register: The word length determines the maximum size of the processable integers and floating point numbers.

- Data bus : The word length defines how many bits can be read from the main memory at the same time.

- Address bus : The word length defines the maximum size of a memory address, i. H. the maximum size of the main memory.

- Control bus : The word length defines the type of peripheral connections.

The word width of these units is usually the same, with current PCs it is 32 or 64 bits.

Processor clock

The clock rate ( English clock rate ) is often presented as an assessment criterion for a processor especially in advertising. However, it is not determined by the processor itself, but is a multiple of the mainboard clock. This multiplier and the basic clock can be set manually or in the BIOS on some mainboards , which is referred to as overclocking or underclocking . With many processors, however, the multiplier is locked so that it either cannot be changed at all or only certain values are permitted (often the standard value is also the maximum value, so that only underclocking is possible via the multiplier). Overclocking can cause irreparable damage to hardware.

CPU-Ausführungszeit = CPU-Taktzyklen × Taktzykluszeit

The following also applies:

Taktzykluszeit = 1 / Taktrate = Programmbefehle × CPI × Taktzykluszeit

However, the speed of the entire system also depends on the size of the caches, the RAM and other factors.

Some processors have the option to increase or decrease the clock rate, if necessary. For example, when watching high-resolution videos or playing games that place high demands on the system, or vice versa, the processor is not stressed too much.

scope of application

In the field of personal computers , the historically grown x86 architecture is widespread, whereby the corresponding article is recommended for a more detailed discussion of this topic.

The use of embedded processors and microcontrollers in, for example, engine control units, clocks, printers and a large number of electronically controlled devices is more interesting and less well known .

See also

- Digital signal processor (DSP)

- Microprogram controller

- Ring (CPU)

- Physics Accelerator (PPU)

- Neuromorphic Processor (NPU)

- List of microprocessors

literature

- Helmut Herold, Bruno Lurz, Jürgen Wohlrab: Basics of Computer Science . Pearson Studium, Munich 2007, ISBN 978-3-8273-7305-2 .

Web links

- comprehensive collection of processors (English)

- FAQ of the Usenet hierarchy de.comp.hardware.cpu + mainboard. *

- cpu-museum.de Illustrated CPU Museum (English)

- CPU Collection / CPU Museum

- Do-it-yourself project of a CPU from individual TTL blocks

- Verify Intel Engineering Samples

- 25 Microchips that shook the world , an article by the Institute of Electrical and Electronics Engineers , May 2009

Individual evidence

- ↑ Dieter Sautter, Hans Weinerth: Lexicon Electronics And Microelectronics . Springer, 1993, ISBN 978-3-642-58006-2 , pp. 825 ( limited preview in Google Book search).

- ^ Peter Fischer, Peter Hofer: Lexicon of Computer Science . Springer, 2011, ISBN 978-3-642-15126-2 , pp. 710 ( limited preview in Google Book search).

- ↑ Helmut Herold, Bruno Lurz, Jürgen Wohlrab: Fundamentals of Computer Science . Pearson Studium, Munich 2007, ISBN 978-3-8273-7305-2 , p. 101.

- ↑ Tim Towell: Intel Architecture Based Smartphone Platforms. (PDF) (No longer available online.) November 6, 2012, p. 7 , archived from the original on June 3, 2013 ; Retrieved March 22, 2013 .

- ^ Peter Rechenberg: Computer science manual . Hanser Verlag, 2006, ISBN 978-3-446-40185-3 , pp. 337 .

- ↑ Learning to Program - A Beginners Guide - Part Four - A Simple Model of a Computer (English); accessed on September 11, 2016.

- ↑ ARM Technical Support Knowledge Articles: What is the difference between a von Neumann architecture and a Harvard architecture? (English); accessed on September 11, 2016.