Computer science

Computer science is the "science of the systematic representation, storage, processing and transmission of information , especially the automatic processing with digital computers ". Historically, computer science has developed on the one hand from mathematics as a structural science , and on the other hand as an engineering discipline from the practical need for fast and, in particular, automatic execution of calculations .

Development of computer science

origin

Already Leibniz had with binary number representations busy. Together with Boolean algebra , which was first worked out in 1847 by George Boole , they form the most important mathematical foundations of later computing systems. In 1937 Alan Turing published his work On Computable Numbers with an Application to the Decision Problem , in which the Turing machine named after him was introduced, a mathematical machine model that is of great importance for theoretical computer science to this day . The concept of predictability is still based on universal models such as the Turing machine and the complexity theory , which began to develop in the 1960s. Predictability is still based on variants of these models.

etymology

The word computer science was created by adding the ending -ik to the root of information . The term computer science was coined by Karl Steinbuch as an artificial word from information and technology at Standard Elektrik AG (SEG), whose computer science department he was in charge of. In his publication "Computer Science: Automatic Information Processing" he defined the term in 1957 in the SEG news. In order to emphasize the importance of automation or mathematics for computer science, computer science is sometimes given out as a suitcase word consisting of information and automation or information and mathematics .

After an international colloquium in Dresden on February 26, 1968, computer science established itself as a name for science based on the French (informatique) and Russian models (Информатика) in the German-speaking area. In July of the same year, the term computer science was used for the first time as the German name for a new subject in a Berlin speech by Minister Gerhard Stoltenberg . While the term computer science is common in the English-speaking world , the German equivalent of computer science has not caught on. However, the term informatics is used in English for certain parts of applied informatics - for example in the case of bioinformatics or geoinformatics . When it comes to translations into English, the term Informatics is sometimes preferred to Computer Science in German-speaking countries.

Development of computer science into science

In Germany, the beginnings of computer science go back to the Institute for Practical Mathematics (IPM) of the Technical University of Darmstadt (TH Darmstadt) , which the mathematician Alwin Walther built up since 1928. In 1956, the first students at the Darmstadt electronic calculator were able to deal with the problems of calculators. At the same time, the first programming lectures and internships were offered at the TH Darmstadt. Due to the reputation that the TH Darmstadt had at the time in computer research, the first congress on the subject of computer science ( electronic calculating machines and information processing ) with international participation took place in October 1955 at the TH Darmstadt. In the 1960s, Germany lacked competitiveness in the field of data processing (DV). To counteract this, on April 26, 1967, the Federal Committee for Scientific Research adopted the program for the promotion of research and development in the field of data processing for public tasks . The so-called "Advisory Board for Data Processing" was responsible for the implementation and consisted mainly of representatives from universities and non-university research institutions. At the seventh meeting of the advisory board on November 15, 1967, Karl Ganzhorn , who was responsible for research and development at IBM Germany at the time, signaled the problems of industry in finding qualified personnel. The director of the Institute for Message Processing at the TH Darmstadt, Robert Piloty , pointed out that the German universities are responsible for training qualified staff. As a result, the committee “IT chairs and training” was formed. Piloty took the chair. The committee formulated recommendations for the training of computer scientists, which provided for the establishment of a course in computer science at several universities and technical colleges. In 1967 the Technical University of Munich offered a course in information processing as part of the mathematics course on the initiative of Friedrich Ludwig Bauer . In 1968 the TH Darmstadt introduced the first computer science course. In 1969 the course "data technology (technical informatics)" followed and in 1970 a mathematics course, which concluded with the degree "graduate engineer in mathematics with a focus on computer science". On September 1, 1969, the Technical University of Dresden began as the first university in the GDR to train Dipl.-Ing. for information processing. Also in 1969, the Furtwangen engineering school (later Furtwangen University of Applied Sciences ) began training, here still called information technology. In the winter semester of 1969/70, the University of Karlsruhe (today the Karlsruhe Institute of Technology ) was the first German university to offer a degree in computer science leading to a degree in computer science. The Johannes Kepler University (JKU) Linz started in the winter semester 1969/70 as the first Austrian university to specialize in computer science and to train as a graduate engineer. The Technical University of Vienna followed in the 1970/71 winter semester . A few years later, the first computer science faculties were founded, after a Department of Computer Science had existed at Purdue University since 1962 . Today, computer science is neither clearly classified as an engineering nor as a mathematical-scientific discipline, but as a discipline that combines both.

Organizations

The Gesellschaft für Informatik (GI) was founded in 1969 and is the largest specialist agency in German-speaking countries. The two large American associations, the Association for Computing Machinery (ACM) since 1947 and the Institute of Electrical and Electronics Engineers (IEEE) since 1963, are particularly important internationally. The most important German-speaking organization that deals with the ethical and social effects of computer science, the forum for computer scientists for peace and social responsibility .

Calculating machines - forerunners of the computer

The efforts to develop two types of machines can be seen as the first forerunners of computer science beyond mathematics: those with the help of which mathematical calculations can be carried out or simplified (“ calculating machines ”) and those with which logical conclusions can be drawn and arguments checked can be (" logical machines "). As simple computing devices, the abacus and later the slide rule rendered invaluable services. In 1641 Blaise Pascal constructed a mechanical calculating machine that could carry out additions and subtractions, including transfers . Only a little later, Gottfried Wilhelm Leibniz presented a calculating machine that could do all four basic arithmetic operations. These machines are based on intermeshing gears. A step towards greater flexibility was taken from 1838 onwards by Charles Babbage , who aimed to control arithmetic operations using punch cards . Only Herman Hollerith was able to profitably implement this idea thanks to technical progress from 1886 onwards. His counting machines based on punched cards were used, among other things, to evaluate a census in the USA.

The history of logical machines is often traced back to the 13th century and traced back to Ramon Llull . Even if his calculating disk- like constructions, in which several mutually rotating disks could represent different combinations of terms, were not mechanically very complex, he was probably the one who made the idea of a logical machine known. Apart from this very early forerunner, the history of logical machines is even more delayed than that of calculating machines: a slide rule-like device by the third Earl Stanhope , who is credited with checking the validity of syllogisms (in the Aristotelian sense), dates back to 1777 . A real “machine” has been handed down for the first time in the form of the “Logical Piano” by Jevons for the late 19th century. Only a little later, the mechanics were replaced by electromechanical and electrical circuits. The logical machines reached their peak in the 1940s and 1950s, for example with the machines from the English manufacturer Ferranti . With the development of universal digital computers - in contrast to calculating machines - the history of independent logical machines came to an abrupt end, as the tasks they worked on and solved were increasingly implemented in software on precisely those computers whose hardware predecessors they belong to.

Development of modern calculating machines

One of the first large calculators is that of Konrad Zuse created, still purely mechanical Z1 of 1937. Four years later realized Zuse his idea by means of electric relays : The Z3 of 1941 separated the world's first functional programmable digital computer already command and data storage and Input / output desk. A little later, efforts to build calculating machines for cracking German secret messages were pushed ahead with great success under the direction of Alan Turing ( Turingbombe ) and Thomas Flowers (Colossus). Developed in parallel Howard Aiken with Mark I (1944) the first program-controlled relay computers of the US, where the development has been significantly advanced. Further relay computers were built in the Bell laboratories (George Stibitz). The Atanasoff-Berry computer is considered the first tube computer. One of the main actors here is John von Neumann , after whom the Von Neumann architecture , which is still important today , is named. In 1946 the tube computer ENIAC was developed , in 1949 the EDSAC was built. In 1948, IBM began developing computers and became the market leader within ten years. With the development of transistor technology and microprocessor technology , computers became more and more powerful and inexpensive from this time on. In 1982, the Commodore company finally opened the mass market with the C64, especially for home users, but also far beyond.

Programming languages

The invention of "automatic programming" by Heinz Rutishauser (1951) was significant for the development of programming languages. In 1956, Noam Chomsky described a hierarchy of formal grammars with which formal languages and special machine models correspond. These formalizations became very important for the development of programming languages . Important milestones were the development of Fortran (from English: "FORmula TRANslation", formula translation; first high-level programming language , 1957), ALGOL (from English: "ALGOrithmic Language", algorithm language; structured / imperative ; 1958/1960/1968), Lisp ( from English: "LISt Processing", processing of lists; functional , 1959), COBOL (from English: "COmmon Business Orientated Language", programming language for commercial applications, 1959), Smalltalk ( object-oriented , 1971), Prolog ( logical , 1972) and SQL ( Relational Databases , 1976). Some of these languages represent typical programming paradigms of their respective time. Other programming languages that have been in use for a long time are BASIC (since 1960), C (since 1970), Pascal (since 1971), Objective-C ( object-oriented , 1984), C ++ (object-oriented, generic , multi-paradigm, since 1985) , Java (object-oriented, since 1995) and C # (object-oriented, around 2000). Languages and paradigm shifts were intensively accompanied or promoted by computer science research.

As with other sciences, there is also an increasing trend towards specialization.

Computer science disciplines

Computer science is divided into the sub-areas of theoretical computer science , practical computer science and technical computer science .

The applications of computer science in the various areas of daily life as well as in other specialist areas, such as business informatics , geoinformatics and medical informatics, are referred to as applied informatics . The effects on society are also examined in an interdisciplinary manner.

Theoretical computer science forms the theoretical basis for the other sub-areas. It provides fundamental knowledge for the decidability of problems, for the classification of their complexity and for the modeling of automata and formal languages .

The disciplines of practical and technical informatics are based on these findings. You deal with central problems of information processing and look for applicable solutions.

The results are ultimately used in applied computer science. Hardware and software implementations can be assigned to this area and thus a large part of the commercial IT market. In addition, the interdisciplinary subjects investigate how information technology can solve problems in other areas of science, such as the development of spatial databases for geography , but also business or bioinformatics .

Theoretical computer science

As the backbone of computer science, the field of theoretical computer science deals with the abstract and mathematics- oriented aspects of science. The area is broad and deals with topics from theoretical linguistics ( theory of formal languages or automaton theory ), computability and complexity theory . The aim of these sub-areas is to provide comprehensive answers to fundamental questions such as “What can be calculated?” And “How effectively / efficiently can you calculate something?”.

Automata Theory and Formal Languages

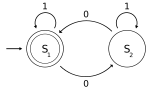

In computer science, automatons are “imaginary machines” that behave according to certain rules. A finite automaton has a finite set of internal states. It reads in an "input word" character by character and carries out a state transition for each character. In addition, it can output an "output symbol" with every state transition. When the input is complete, the machine can accept or reject the input word.

The formal language approach has its origins in linguistics and is therefore well suited for describing programming languages. However, formal languages can also be described by machine models, since the set of all words accepted by an machine can be viewed as a formal language.

More complex models have a memory, for example, push-down machines or the Turing machine, which according to the Church-Turing thesis can reproduce all functions that can be calculated by humans.

Computability theory

In the context of computability theory , theoretical computer science examines which problems can be solved with which machines. A computer model or a programming language is called Turing complete if it can be used to simulate a universal Turing machine . All computers and most of the programming languages used today are Turing-complete, which means you can use them to solve the same tasks. Alternative calculation models such as the lambda calculus , WHILE programs , μ-recursive functions or register machines also turned out to be Turing-complete. From these findings the Church-Turing thesis developed , which although formally cannot be proven, is generally accepted.

The concept of decidability can be illustrated as the question of whether a certain problem can be solved algorithmically. A decidable problem is, for example, the property of a text to be a syntactically correct program. A non-decidable problem is, for example, the question of whether a given program with given input parameters ever comes to a result, which is referred to as a stall problem .

Complexity theory

The complexity theory deals with the resource requirements of algorithmic treatable problems on different mathematically defined formal computer models, as well as the quality of the algorithms that solve them. In particular, the resources “ runtime ” and “ storage space ” are examined and their requirements are usually represented in Landau notation . First and foremost, the runtime and the storage space requirement are noted depending on the length of the input. Algorithms that differ at most by a constant factor in their running time or their storage requirements are identified by the Landau notation of the same class, i.e. H. a set of problems with the equivalent running time required by the algorithm for the solution.

An algorithm, the running time of which is independent of the input length, works “in constant time”, you write . For example, the program "return the first item in a list" will work in constant time. The program "check whether a certain element is contained in an unsorted list of length n" needs "linear time", that is , because the input list has to be read exactly once in the worst case.

Complexity theory has so far only provided upper bounds for the resource requirements of problems, because methods for exact lower bounds have hardly been developed and only a few problems are known (for example for the task of sorting a list of values using a given order relation through comparisons , the lower bound ). Nevertheless, there are methods to classify particularly difficult problems as such, whereby the theory of NP-completeness plays a central role. Accordingly, a problem is particularly difficult if, by solving it, one can also automatically solve most of the other natural problems without using significantly more resources.

The biggest open question in complexity theory is the question of “ P = NP ?” . The problem is one of the Millennium Problems advertised by the Clay Mathematics Institute with $ 1 million. If P is not equal to NP, NP-complete problems cannot be solved efficiently.

Theory of programming languages

This area deals with the theory, analysis, characterization and implementation of programming languages and is actively researched in both practical and theoretical computer science. The sub-area strongly influences related subjects such as parts of mathematics and linguistics .

Formal Methods Theory

The theory of formal methods deals with a variety of techniques for the formal specification and verification of software and hardware systems . The motivation for this area comes from engineering thinking - rigorous mathematical analysis helps improve the reliability and robustness of a system. These properties are particularly important in systems that work in safety-critical areas. Researching such methods requires, among other things, knowledge of mathematical logic and formal semantics .

Practical computer science

The Practical computer science develops basic concepts and methods to solve specific problems in the real world, such as the management of data in data structures or the development of software . The development of algorithms plays an important role here . Examples of this are sorting and search algorithms .

One of the central topics of practical computer science is software technology (also called software engineering ). She deals with the systematic creation of software. Concepts and proposed solutions for large software projects are also developed, which should allow a repeatable process from the idea to the finished software.

| C source code | Machine code (schematic) | |

|---|---|---|

/**

* Berechnung des ggT zweier Zahlen

* nach dem Euklidischen Algorithmus

*/

int ggt(int zahl1, int zahl2) {

int temp;

while(zahl2 != 0) {

temp = zahl1%zahl2;

zahl1 = zahl2;

zahl2 = temp;

}

return zahl1;

}

|

→ Compiler → |

… 0010 0100 1011 0111 1000 1110 1100 1011 0101 1001 0010 0001 0111 0010 0011 1101 0001 0000 1001 0100 1000 1001 1011 1110 0001 0011 0101 1001 0111 0010 0011 1101 0001 0011 1001 1100 … |

An important topic in practical computer science is compiler construction , which is also examined in theoretical computer science. A compiler is a program that translates other programs from a source language (for example Java or C ++ ) into a target language. A compiler enables a person to develop software in a more abstract language than the machine language used by the CPU .

An example of the use of data structures is the B-tree , which allows quick searches in large databases in databases and file systems .

Technical computer Science

The technical computer science deals with the hardware based foundations of computer science, such as microprocessor technology , computer architecture , embedded and real-time systems , computer networks together with the associated low-level software, and the specially developed modeling and valuation methods.

Microprocessor technology, computer design process

The microprocessor technology is the rapid development of semiconductor technology dominates. The structure widths in the nanometer range enable the miniaturization of highly complex circuits with several billion individual components. This complexity can only be mastered with fully developed design tools and powerful hardware description languages . The path from the idea to the finished product leads through many stages, which are largely computer-aided and ensure a high degree of accuracy and freedom from errors. If hardware and software are designed together because of high performance requirements, this is also known as hardware-software codesign.

Architectures

The computer architecture or system architecture is the subject that researches concepts for the construction of computers and systems. In the computer architecture z. B. the interaction of processors , memory as well as control units ( controllers ) and peripherals are defined and improved. The research area is based on the requirements of the software as well as on the possibilities that arise from the further development of integrated circuits . One approach is reconfigurable hardware such as B. FPGAs (Field Programmable Gate Arrays), the circuit structure of which can be adapted to the respective requirements.

Based on the architecture of the sequentially operating Von Neumann machine , there are present machines in the control of a processor, the even more processor cores, memory controller, and an entire hierarchy of re- cache may contain -Save, one as a random access memory ( Random-Access Memory , RAM) designed working memory (primary memory ) and input / output interfaces to secondary memories (e.g. hard disk or SSD memory ). Due to the many areas of application, a wide range of processors is in use today, from simple microcontrollers , e.g. B. in household appliances over particularly energy-efficient processors in mobile devices such as smartphones or tablet computers to internally working in parallel high-performance processors in personal computers and servers . Parallel computers are gaining in importance in which arithmetic operations can be carried out on several processors at the same time. The progress in chip technology already enables the implementation of a large number (currently 100 ... 1000) of processor cores on a single chip ( multi-core processors , multi / many core systems, “ system-on-a-chip ” (SoCs)).

If the computer is integrated into a technical system and performs tasks such as control, regulation or monitoring, largely invisible to the user, it is referred to as an embedded system. Embedded systems are found in a variety of everyday devices such as household appliances, vehicles, entertainment electronics devices, mobile phones, but also in industrial systems such as B. used in process automation or medical technology. Since embedded computers are always and everywhere available, one speaks of omnipresent or ubiquitous computing ( ubiquitous computing ). These systems are more and more networked; B. with the Internet (" Internet of Things "). Networks of interacting elements with physical input from and output to their environment are also referred to as cyber-physical systems . One example is wireless sensor networks for environmental monitoring.

Real-time systems are designed so that they can respond in good time to certain time-critical processes in the outside world with an appropriate response speed. This assumes that the execution time of the response processes is guaranteed not to exceed the corresponding predefined time limits. Many embedded systems are also real-time systems.

Computer communication plays a central role in all multi-computer systems. This enables the electronic data exchange between computers and thus represents the technical basis of the Internet. In addition to the development of routers , switches or firewalls , this also includes the development of the software components that are necessary to operate these devices. In particular, this includes the definition and standardization of network protocols such as TCP , HTTP or SOAP . Protocols are the languages in which computers exchange information with one another.

In distributed systems , a large number of processors work together without a shared memory. Processes that communicate with each other via messages usually regulate the cooperation of individual, largely independent computers in a network ( cluster ). Keywords in this context are, for example, middleware , grid computing and cloud computing .

Modeling and evaluation

Due to the general complexity of such system solutions, special modeling methods have been developed as a basis for evaluating the architecture approaches mentioned, so that evaluations can be carried out before the actual system implementation. On the one hand, the modeling and evaluation of the resulting system performance, e.g. B. using benchmark programs. Methods for performance modeling are e.g. B. queue models , Petri nets and special traffic theoretical models have been developed. Computer simulation is often used, particularly in processor development.

In addition to performance, other system properties can also be studied on the basis of the modeling; z. For example, the energy consumption of computer components is currently playing an increasingly important role to be taken into account. In view of the growth in hardware and software complexity, problems of reliability, fault diagnosis and fault tolerance , particularly in safety-critical applications, are also of great concern. There are corresponding solution methods, mostly based on the use of redundant hardware or software elements.

Relationships with other IT areas and other specialist disciplines

Computer engineering has close ties to other areas of computer science and engineering. It is based on electronics and circuit technology, with digital circuits in the foreground ( digital technology ). For the higher software layers, it provides the interfaces on which these layers are based. In particular, through embedded systems and real-time systems, there are close relationships with related areas of electrical engineering and mechanical engineering such as control , regulation and automation technology as well as robotics .

Computer science in interdisciplinary sciences

Under the collective term of applied computer science "one summarizes the application of methods of core computer science in other sciences ..." . Around computer science, some interdisciplinary sub-areas and research approaches have developed, some of them into separate sciences . Examples:

Computational sciences

This interdisciplinary field deals with the computer-aided analysis, modeling and simulation of scientific problems and processes. According to the natural sciences , a distinction is made here:

- The Bioinformatics (English bioinformatics , and computational biology ) deals with the informatics principles and applications of the storage, organization and analysis of biological data . The first pure bioinformatics applications were developed for DNA sequence analysis. The primary aim is to quickly find patterns in long DNA sequences and to solve the problem of how to superimpose two or more similar sequences and align them with one another in such a way that the best possible match is achieved ( sequence alignment ). With the clarification and extensive functional analysis of various complete genomes (e.g. of the nematode Caenorhabditis elegans ), the focus of bioinformatic work shifts to questions of proteomics , such as B. the problem of protein folding and structure prediction , i.e. the question of the secondary or tertiary structure for a given amino acid sequence.

- The biodiversity computer science involves the storing and processing of information on biodiversity. While bioinformatics deals with nucleic acids and proteins, the objects of biodiversity informatics are taxa , biological collection records and observation data.

- Artificial Life (English Artificial life ) in 1986 established as an interdisciplinary research discipline. The simulation of natural life forms with software ( soft artificial life ) and hardware methods ( hard artificial life ) is a main goal of this discipline. Today there are applications for artificial life in synthetic biology, in the health sector and medicine, in ecology, in autonomous robots, in the transport and traffic sector, in computer graphics, for virtual societies and in computer games.

- The Chemo computer science (English chemo informatics , Cheminformatics or chemiinformatics ) designates a branch of science to the field of chemistry combines with the methods of computer science and vice versa. It deals with the search in chemical space which consists of virtual ( in silico ) or real molecules . The size of the chemical space is estimated to be about 10 62 molecules and is far larger than the amount of real synthesized molecules. Thus, under certain circumstances, millions of molecules can be tested in silico with the help of such computer methods without having to explicitly generate them in the laboratory using methods of combinatorial chemistry or synthesis .

Engineering informatics, mechanical engineering informatics

The engineer computer science , English as Computational Engineering Science is designated, an interdisciplinary education at the interface between the engineering sciences , mathematics and computer science at the fields of electrical engineering , mechanical engineering , process engineering , systems engineering .

The mechanical engineering informatics essentially includes the virtual product development ( production informatics ) by means of computer visualistics , as well as automation technology .

Business informatics, information management

The business computer science (English (business) information systems , and management information systems ) is an "interface discipline" between the computer science and economics , especially of business administration . It has developed into an independent science through its interfaces. One focus of business informatics is the mapping of business processes and accounting in relational database systems and enterprise resource planning systems. The Information Engineering of information systems and information management play an important role in the economy computer science. This was developed at the Furtwangen University of Applied Sciences as early as 1971. From 1974 the then TH Darmstadt, the Johannes Kepler University Linz and the TU Vienna set up a course in business informatics.

Socioinformatics

The Socio computer science concerned with the impact of information systems on society as such. B. support organizations and society in their organization, but also how society influences the development of socially embedded IT systems, be it as prosumers on collaborative platforms such as Wikipedia, or by means of legal restrictions, for example to guarantee data security.

Social informatics

The social computer science is concerned on the one hand with the IT operations in social organizations, on the other hand with engineering and computer science as a tool of social work, such as the Ambient Assisted Living .

Media informatics

The media computer science has the interface between man and machine as a center of gravity and deals with the connection between computer science, psychology , ergonomics , media technology , media design and teaching .

Computational linguistics

Computational linguistics investigates how natural language can be processed with the computer. It is a sub-area of artificial intelligence , but also an interface between applied linguistics and applied computer science . Related to this is the term cognitive science , which represents its own interdisciplinary branch of science, which u. a. Linguistics , computer science, philosophy , psychology and neurology combine. Computational linguistics is used in speech recognition and synthesis , automatic translation into other languages and information extraction from texts.

Environmental informatics, geoinformatics

The environmental computer science deals with interdisciplinary analysis and assessment of environmental issues with aid of computer science. The focus is on the use of simulation programs , geographic information systems (GIS) and database systems.

The Geoinformatics (English geoinformatics ) is the study of the nature and function of geographic information and its provision in the form of spatial data and the applications it supports apart. It forms the scientific basis for geographic information systems (GIS). All geospatial applications have in common the spatial reference and, in some cases, its mapping in Cartesian spatial or planar representations in the reference system .

Other computer science disciplines

Other interfaces of computer science to other disciplines are available in the information industry , medical computer science , logistics computer science , maintenance computer science and legal computer science , information management ( management computer science , operating computer science ), Architecture computer science ( Bauinformatik ) and agricultural computer science , Computational Archeology , Sports computer science , as well as new interdisciplinary directions such as neuromorphic Engineering . The collaboration with mathematics or electrical engineering is not referred to as interdisciplinary due to the relationship . Computer science didactics deal with computer science lessons , especially in schools . The elementary computer science concerned with the teaching of basic computer science concepts in preschool and primary education.

Artificial intelligence

The Artificial Intelligence (AI) is a large branch of computer science with strong influences from logic , linguistics , neurophysiology and cognitive psychology . The methodology of the AI differs considerably from that of classic computer science. Instead of providing a complete description of the solution, artificial intelligence leaves the solution to the computer itself. Their processes are used in expert systems , in sensor technology and robotics .

Understanding the term “artificial intelligence” often reflects the Enlightenment notion of humans as machines, which so-called “strong AI” aims to imitate: to create an intelligence that can think like humans and solve problems and which is characterized by a form of consciousness or self-confidence as well as emotions .

This approach was implemented using expert systems, which essentially record, manage and apply a large number of rules for a specific object (hence “experts”).

In contrast to strong AI, “weak AI” is about mastering specific application problems. In particular, those applications are of interest, for the solution of which, according to general understanding, a form of “ intelligence ” seems necessary. Ultimately, the weak AI is concerned with the simulation of intelligent behavior using mathematics and computer science; it is not about creating awareness or a deeper understanding of intelligence. One example from weak AI is fuzzy logic .

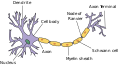

Neural networks also belong in this category - since the early 1980s , this term has been used to analyze the information architecture of the (human or animal) brain. The modeling in the form of artificial neural networks illustrates how complex pattern recognition can be achieved from a very simple basic structure . At the same time it becomes clear that this type of learning is not based on the derivation of rules that can be formulated logically or linguistically - and thus the special abilities of the human brain within the animal kingdom cannot be reduced to a rule-based or language-based “intelligence” term. The effects of these insights on AI research, but also on learning theory , didactics and other areas, are still being discussed.

While the strong AI has failed to this day because of its philosophical question, progress has been made on the side of the weak AI.

Computer science and society

“Informatics and Society” (IuG) is a branch of the science of informatics and researches the role of informatics on the way to the information society. The interactions in computer science examined in this context cover a wide variety of aspects. Based on historical, social and cultural questions, this concerns economic, political, ecological, ethical, didactic and of course technical aspects. The emerging globally networked information society is seen as a central challenge for computer science, in which it plays a defining role as a basic technical science and has to reflect this. IuG is characterized by the fact that an interdisciplinary approach, especially with the humanities, but also z. B. with the law is necessary.

See also

- List of important people in IT

- Society for Computer Science , Austrian Computer Society , Swiss Computer Science Society

- Computer science studies

literature

- Herbert Bruderer: Milestones in computer technology . Volume 1: Mechanical calculating machines, slide rules, historical automatons and scientific instruments, 2nd, strongly exp. Edition, Walter de Gruyter, Berlin / Boston 2018, ISBN 978-3-11-051827-6 .

- Heinz-Peter Gumm, Manfred Sommer: Introduction to Computer Science . 10th edition. Oldenbourg, Munich 2012, ISBN 978-3-486-70641-3 .

- AK Dewdney: The Turing Omnibus: A journey through computer science with 66 stations. Translated by P. Dobrowolski. Springer, Berlin 1995, ISBN 3-540-57780-7 .

- Hans Dieter Hellige (Hrsg.): Stories of computer science. Visions, paradigms, leitmotifs. Berlin, Springer 2004, ISBN 3-540-00217-0 .

- Jan Leeuwen: Theoretical Computer Science. Springer, Berlin 2000, ISBN 3-540-67823-9 .

- Peter Rechenberg, Gustav Pomberger (Hrsg.): Computer science manual. 3. Edition. Hanser 2002, ISBN 3-446-21842-4 .

- Vladimiro Sassone: Foundations of Software Science and Computation Structures. Springer, Berlin 2005, ISBN 3-540-25388-2 .

- Uwe Schneider, Dieter Werner (Hrsg.): Pocket book of computer science. 6th edition. Fachbuchverlag, Leipzig 2007, ISBN 978-3-446-40754-1 .

- Society for Computer Science : What is Computer Science? Position paper of the Gesellschaft für Informatik. (PDF, approx. 600 kB) Bonn 2005., or What is computer science? Short version. (PDF; around 85 kB).

- Les Goldschlager, Andrew Lister: Computer Science - A Modern Introduction. Carl Hanser, Vienna 1986, ISBN 3-446-14549-4 .

- Arno Schulz : IT for users. de Gruyter Verlag, Berlin, New York 1973, ISBN 3-11-002051-3 .

- Horst Völz : That is information. Shaker Verlag, Aachen 2017. ISBN 978-3-8440-5587-0 .

- Horst Völz : How we got to know. Not everything is information. Shaker Verlag, Aachen 2018. ISBN 978-3-8440-5865-9 .

Web links

- Catalog of links on the subject of computer science departments at universities at curlie.org (formerly DMOZ )

- Society for Computer Science (GI)

- Swiss Informatics Society (SI)

- Computer science for teachers in the ZUM wiki

- einstieg-informatik.de

Individual evidence

- ↑ a b Duden Informatik A - Z: Specialized dictionary for studies, training and work, 4th edition, Mannheim 2006. ISBN 978-3-411-05234-9 .

- ^ Rolf Zellmer: The emergence of the German computer industry. From the pioneering achievements of Konrad Zuses and Gerhard Dirks to the first series products of the 50s and 60s . 1990 (403 pages, dissertation at the University of Cologne).

- ↑ Arno Pasternak: Technical and educational fundamentals for computer science lessons in lower secondary level (dissertation). (PDF; 14.0 MB) May 17, 2013, p. 47 , accessed on July 31, 2020 (with a facsimile of the introductory section from SEG-Nachrichten 4/1957).

- ↑ Tobias Häberlein: A practical introduction to computer science with Bash and Python . Oldenbourg Verlag, 2011, ISBN 978-3-486-71445-6 , pp. 5 ( limited preview in Google Book Search [accessed February 25, 2017]).

- ↑ Presentation on the 40-year history of GI and computer science ( Memento from December 23, 2012 in the Internet Archive ) (PDF; 3.1 MB)

- ^ Friedrich L. Bauer: Historical Notes on Computer Science . Springer Science & Business Media, 2009, ISBN 978-3-540-85789-1 , pp. 36 ( limited preview in Google Book Search [accessed February 25, 2017]).

- ^ Wolfgang Coy : Stories of Computer Science. Visions, paradigms, leitmotifs . Ed .: Hans Dieter Hellige. Springer, 2004, ISBN 3-540-00217-0 , pp. 475 .

- ↑ Start of study as from winter semester 2012/2013 - Faculty of Computer Science at the Technical University of Munich. In: www.in.tum.de. Retrieved September 7, 2015 .

- ↑ a b c d Christine Pieper: University computer science in the Federal Republic and the GDR until 1989/1990 . In: Science, Politics and Society . 1st edition. Franz Steiner Verlag, Stuttgart 2009, ISBN 978-3-515-09363-7 .

- ^ Faculty of Computer Science: History. Retrieved September 13, 2019 .

- ↑ 40 Years of Computer Science in Munich: 1967–2007 / Festschrift ( Memento of May 17, 2011 in the Internet Archive ) (PDF) p. 26 on in.tum.de , accessed on January 5, 2014.

- ↑ a b History of the HS Furtwangen

- ↑ History of the university area at KIT

- ↑ https://www.jku.at/die-jku/ueber-uns/geschichte/

- ↑ Brief history of the Vienna University of Technology ( Memento from June 5, 2012 in the Internet Archive )

- ↑ Konrad Zuse - the namesake of the ZIB (accessed on July 27, 2012)

- ^ Christopher Langton: Studying Artificial Life with Cellular Automata. In: Physics 22ID: 120-149

- ↑ Christopher Langton: What is Artificial Life? (1987) pdf ( Memento of March 11, 2007 in the Internet Archive )

- ^ Marc A. Bedau: Artificial life: organization, adaptation and complexity from the bottom up

- ↑ Wolfgang Banzhaf, Barry McMullin: Artificial Life. In: Grzegorz Rozenberg, Thomas Bäck, Joost N. Kok (Eds.): Handbook of Natural Computing. Springer 2012. ISBN 978-3-540-92909-3 (print) 978-3-540-92910-9 (online)