Supercomputers

As supercomputers (including high-performance computer or supercomputer called) are especially fast for its time computer called. It is irrelevant which construction the computer is based on, as long as it is a universally applicable computer. A typical feature of a modern supercomputer is its particularly large number of processors that can access shared peripheral devices and a partially shared main memory . Supercomputers are often used for computer simulations in the field of high-performance computing .

Supercomputers play an essential role in scientific computing and are used in various disciplines, such as simulations in the field of quantum mechanics , weather forecasts , climatology , discovery of oil and gas deposits, molecular dynamics, biological macromolecules, cosmology , astrophysics , fusion research , research into nuclear weapons tests for cryptanalysis .

In Germany, supercomputers can mainly be found at universities and research institutions such as the Max Planck Institutes . Because of their possible uses, they are subject to German arms export control laws .

History and structure

In the history of computer development, supercomputers split off from scientific computers and mainframes in the 1960s . While mainframes were optimized for high reliability, supercomputers were optimized for high computing power. The first officially installed supercomputer Cray-1 managed 130 MegaFLOPS in 1976 .

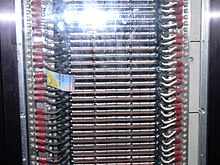

Originally, the outstanding computing power was achieved by making maximum use of the available technology by choosing constructions that were too expensive for larger series production (e.g. liquid cooling, exotic components and materials, compact design for short signal paths); the number of processors was rather higher low. For some time now, so-called clusters have been increasingly established , in which a large number of (mostly inexpensive) individual computers are networked to form one large computer. Compared to a vector computer, the nodes in a cluster have their own peripherals and only their own local main memory . Clusters use standard components, which is why they initially offer cost advantages over vector computers. However, they require much more programming effort. It is important to consider whether the programs used are suitable for being distributed over many processors.

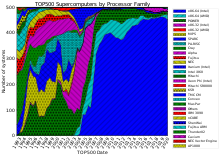

Modern high-performance computers are primarily parallel computers . They consist of a large number of computers networked with one another. In addition, every computer usually has several main processors (CPUs). The same programs cannot run unmodified on a supercomputer as on an ordinary computer, but rather specially coordinated programs that keep the individual processors working in parallel. Supercomputers are (like every commercially available computer today, in the lower price segment) vector computers . Standard architectures from the field of personal computers and servers , such as x86-64 from Intel (Xeon) and AMD (Threadripper), are now dominant . They differ only slightly from ordinary personal computer hardware. But there is still special hardware such as IBM BlueGene / Q and Sparc64.

In supercomputers, the connections between individual computers are implemented using special high-performance networks, InfiniBand , among others, is common . Computers are often equipped with accelerator cards , such as graphics cards or the Intel Xeon Phi . Graphics cards are suitable for use in high performance computing because they represent excellent vector arithmetic units and efficiently solve problems in linear algebra . The associated technology is called General Purpose Computation on Graphics Processing Unit (GPGPU).

For clusters, the individual computers are often nodes (English nodes ) called and centrally configured by Clustermanagament tools and monitored.

Operating system and programming

While various Unix variants were still widespread in supercomputers in the 1990s, the Free Software Linux established itself as the operating system in the 2000s . The TOP500 list of the fastest computer systems (as of June 2012) lists a total of 462 systems operated exclusively under Linux and 11 partially (CNK / SLES 9) systems operated under Linux. This means that 92.4% of the systems run entirely on Linux. Almost all other systems are operated under Unix or Unix-like systems. Windows , the biggest competitor in the desktop area, hardly plays a role in the high-performance computer area (0.4%).

The programming languages used to program programs are mainly Fortran and C or C ++ . In order to generate code as quickly as possible, compilers from supercomputer manufacturers (such as CRAY or Intel) are usually used. High Performance Computing (HPC) programs are typically divided into two categories:

- Shared memory parallelization, usually locally on a single node. Interfaces such as OpenMP or TBB are common for this purpose . A single operating system process generally uses all available CPU cores or CPUs.

- Distributed memory parallelization: An operating system process runs on a core and has to exchange messages with other processes for joint problem solving ( message passing ). This goes within the node or across node boundaries. The Message Passing Interface is the defact standard for programming this type of program.

In practice, one often finds a combination of both parallelization techniques , which is often called hybrid parallelization. It is popular because programs often do not scale well enough to use all cores of a supercomputer with pure message passing .

If supercomputers are equipped with accelerator cards (graphics cards or calculation cards), the programming is broken down again into that of the host computer and that of the accelerator card. OpenCL and CUDA are two interfaces that enable the programming of such components.

As a rule, high-performance computers are not used by a single user or program. Instead, job schedulers such as the Simple Linux Utility for Resource Management (SLURM) or IBM's LoadLeveler are used to allow a large number of users to use parts of the supercomputer for a short time. The allocation takes place exclusively on the level of node allocation or processor allocation. The processor time used is measured in units such as CPU hours or node hours and billed if necessary.

Intended use

The production costs of a supercomputer from the TOP10 are currently in the high double-digit, often already three-digit million euro amount.

Today's supercomputers are mainly used for simulation purposes. The more realistic a simulation of complex relationships becomes, the more computing power is generally required. One advantage of supercomputers is that they can take more and more interdependencies into account thanks to their extremely fast and therefore large computing power . This allows the inclusion of more far-reaching, often inconspicuous secondary or boundary conditions for the actual simulation and thus ensures an increasingly meaningful overall result.

The current main areas of application of supercomputers offered include biology , chemistry , geology , aviation and aerospace , medical , weather , climate research , military and physics .

In the military field, supercomputers have e.g. B. enables new atomic bomb developments to be carried out through simulation, without supporting data through further underground atomic bomb tests. The areas are characterized by the fact that they are very complex systems or subsystems that are linked to one another to a large extent. Changes in one subsystem usually have more or less strong effects on neighboring or connected systems. The use of supercomputers makes it ever easier to consider or even predict many such consequences, which means that countermeasures can be taken well in advance. This applies e.g. B. in simulations of climate change , the predictions of earthquakes or volcanic eruptions as well as in medicine with the simulation of new active substances on the organism . Such simulations are logically, completely independent of the computing power, only as accurate as the programmed parameters or models allow for the calculation. The enormous investment sums in the steady increase in FLOPS and thus the development of ever faster supercomputers are justified primarily with the benefits and the possible “knowledge advantage” for mankind, less with the aspects of general technical progress.

Situation in Germany

Scientific high-performance computing is organized in Germany by the Gauss Center for Supercomputing (GCS), which is a member of the European Partnership for Advanced Computing in Europe (PRACE). The majority of the 16 German federal states maintain state high-computer associations to organize the use of their high-performance computers. In the scientific world, a quota of CPU hours is usually advertised and distributed among applicants.

Selected supercomputers

Current supercomputers

The fastest supercomputers according to performance are now listed every six months in the TOP500 list. The LINPACK benchmark serves as the basis for the assessment. The fastest supercomputers according to energy efficiency or MFLOPS / W have been included in the Green500 list since November 2007. Lenovo installed the largest share (117) of the top 500 most powerful computers worldwide in 2018 .

This Green500 list from November 2014 shows country-by-country averaged efficiencies of 1895 MFLOPS / W (Italy) down to 168 MFLOPS / W (Malaysia).

Selected current supercomputers (worldwide)

As of June 2017 (2016?). However, Piz Daint, Switzerland added.

| Surname | Location | Tera FLOPS | configuration | Energy demand | purpose |

|---|---|---|---|---|---|

| Fugaku | RIKEN Center for Computational Science , Kobe , ( Japan ) | 415,530.00 | 152.064 A64FX (48 cores, 2.2 GHz), 4.64 PB RAM | 15,000 kW | Scientific applications |

| Summit | Oak Ridge National Laboratory ( Tennessee , USA ) | 122,300.00 upgraded to 148,600.00 | 9,216 POWER9 CPUs (22 cores, 3.1 GHz), 27,648 Nvidia Tesla V100 GPUs | 10.096 kW | Physical calculations |

| Sunway TaihuLight | National Supercomputing Center, Wuxi , Jiangsu | 93,014.60 | 40,960 Sunway SW26010 (260 cores, 1.45 GHz), 1.31 PB RAM, 40 server racks with 4 × 256 nodes each, a total of 10,649,600 cores | 15,370 kW | Scientific and commercial applications |

| Sierra | Lawrence Livermore National Laboratory ( California , USA) | 71,600.00 | IBM Power9 (22 cores, 3.1 GHz) 1.5 PB RAM | 7,438 kW | physical calculations (e.g. simulation of nuclear weapons tests) |

| Tianhe-2 | National University for Defense Technology, Changsha , China final location: National Supercomputer Center ( Guangzhou , China ) |

33,862.70 upgraded to 61,400.00 | 32,000 Intel Xeon E5-2692 CPUs (Ivy Bridge, 12 cores, 2.2 GHz) + 48,000 Intel Xeon Phi 31S1P co-processors (57 cores, 1.1 GHz), 1.4 PB RAM | 17,808 kW | Chemical and physical calculations (e.g. studies of petroleum and aircraft development) |

| Hawk | High Performance Computing Center Stuttgart ( Germany ) | 26,000.00 | 11,264 AMD EPYC 7742 (64 cores, 2.25 GHz), 1.44 PB RAM | 3,500 kW | Scientific and commercial applications |

| Piz Daint | Swiss National Supercomputing Center (CSCS) ( Switzerland ) | 21,230.00 | Cray XC50, Xeon E5-2690v3 12C 2.6 GHz, Aries interconnect, NVIDIA Tesla P100, Cray Inc. (361,760 cores) | 2,384 kW | scientific and commercial applications |

| titanium | Oak Ridge National Laboratory ( Tennessee , USA ) | 17,590.00 | Cray XK7, 18,688 AMD Opteron 6274 CPUs (16 cores, 2.20 GHz) + 18,688 Nvidia Tesla K20 GPGPUs, 693.5 TB RAM | 8,209 kW | Physical calculations |

| Sequoia | Lawrence Livermore National Laboratory ( California , USA ) | 17,173.20 | IBM BlueGene / Q, 98,304 Power BQC processors (16 cores, 1.60 GHz), 1.6 PB RAM | 7,890 kW | Simulation of nuclear weapons tests |

| K computer | Advanced Institute for Computational Science ( Japan ) | 10,510.00 | 88,128 SPARC64 -VIII 8-core processors (2.00 GHz), 1,377 TB RAM | 12,660 kW | Chemical and physical calculations |

| Mira | Argonne National Laboratory ( Illinois , USA ) | 8,586.6 | IBM BlueGene / Q, 49,152 Power BQC processors (16 cores, 1.60 GHz) | 3,945 kW | Development of new energy sources, technologies and materials, bioinformatics |

| JUQUEEN | Research Center Jülich ( Germany ) | 5,008.9 | IBM BlueGene / Q, 28,672 Power BQC processors (16 cores, 1.60 GHz), 448 TB RAM | 2,301 kW | Materials science, theoretical chemistry, elementary particle physics, environment, astrophysics |

| Phase 1 - Cray XC30 | European Center for Medium Range Weather Forecasts ( Reading , England ) | 3,593.00 | 7.010 Intel E5-2697v2 "Ivy Bridge" (12 cores, 2.7 GHz) | ||

| SuperMUC IBM | Leibniz Computing Center (LRZ) ( Garching near Munich , Germany ) | 2,897.00 | 18,432 Xeon E5-2680 CPUs (8 cores, 2.7 GHz) + 820 Xeon E7-4870 CPUs (10 cores, 2.4 GHz), 340 TB RAM | 3,423 kW | Cosmology about the creation of the universe, seismology / earthquake forecast, and much more. |

| Stampede | Texas Advanced Computing Center ( Texas , USA ) | 5,168.10 | Xeon E5-2680 CPUs (8 cores, 2.7 GHz) + Xeon E7-4870 CPUs, 185 TB RAM | 4,510 kW | Chemical and physical, biological (e.g. protein structure analysis), geological (e.g. earthquake forecast), medical calculations (e.g. cancer growth) |

| Tianhe-1A | National Supercomputer Center ( Tianjin , China ) | 2,266.00 | 14,336 Intel 6-core Xeon X5670 CPUs (2.93 GHz) + 7,168 Nvidia Tesla M2050 GPGPUs , 224 TB RAM | 4,040 kW | Chemical and physical calculations (e.g. studies of petroleum and aircraft development) |

| Dawning nebulae | National Supercomputing Center ( Shenzhen , China ) | 1,271.00 | Hybrid system of 55,680 Intel Xeon processors (2.66 GHz) + 64,960 Nvidia Tesla GPGPU (1.15 GHz), 224 TB RAM | 2,580 kW | Meteorology, finance, etc. a. |

| IBM Roadrunner | Los Alamos National Laboratory ( New Mexico , USA ) | 1,105.00 | 6,000 AMD dual-core processors (3.2 GHz), 13,000 IBM Cell processors (1.8 GHz), 103 TB RAM | 4,040 kW | Physical simulations (e.g. nuclear weapon simulations) |

| N. n. | Bielefeld University ( Germany ) | 529.70 | 208x Nvidia Tesla M2075-GPGPUs + 192x Nvidia GTX-580-GPUs + 152x dual quad-core Intel Xeon 5600 CPUs, 9.1 TB RAM | Faculty of Physics: Numerical simulations, physical calculations | |

| SGI Altix | NASA ( USA ) | 487.00 | 51,200 4-core Xeon, 3 GHz, 274.5 TB RAM | 3,897 kW | Space exploration |

| BlueGene / L | Lawrence Livermore National Laboratory Livermore ( USA ) | 478.20 | 212,992 PowerPC 440 processors 700 MHz, 73,728 GB RAM | 924 kW | Physical simulations |

| Blue Gene Watson | IBM Thomas J. Watson Research Center ( USA ) | 91.29 | 40,960 PowerPC 440 processors, 10,240 GB RAM | 448 kW | Research department of IBM, but also applications from science and business |

| ASC Purple | Lawrence Livermore National Laboratory Livermore ( USA ) | 75.76 | 12,208 Power5 CPUs, 48,832 GB RAM | 7,500 kW | Physical simulations (e.g. nuclear weapon simulations) |

| MareNostrum | Universitat Politècnica de Catalunya ( Spain ) | 63.8 | 10,240 PowerPC 970MP 2.3 GHz, 20.4 TB RAM | 1,015 kW | Climate and genetic research, pharmacy |

| Columbia | NASA Ames Research Center ( Silicon Valley , California , USA ) | 51.87 | 10.160 Intel Itanium 2 processors (Madison core), 9 TB RAM | Climate modeling, astrophysical simulations |

Selected current supercomputers (throughout Germany)

| Surname | Location | Tera FLOPS (peak) | configuration | TB RAM | Energy demand | purpose |

|---|---|---|---|---|---|---|

| Hawk | High Performance Computing Center Stuttgart ( Germany ) | 26,000.00 | 11,264 AMD EPYC 7742 (64 cores, 2.25 GHz), 1.44 PB RAM | 1440 | 3,500 kW | Scientific and commercial applications |

| JEWELS | research center Julich | 9,891.07 | 2511 nodes with 4 dual Intel Xeon Platinum 8168 each (with 24 cores each, 2.70 GHz), 64 nodes with 6 dual Intel Xeon Gold 6148 each (with 20 cores each, 2.40 GHz) | 258 | 1,361 kW | |

| JUQUEEN | Research Center Jülich ( Germany ) | 5,900.00 | IBM BlueGene / Q, 28,672 Power BQC processors (16 cores, 1.60 GHz) | 448 | 2,301 kW | Materials science, theoretical chemistry, elementary particle physics, environment, astrophysics |

| SuperMUC IBM | Leibniz Computing Center (LRZ) ( Garching near Munich , Germany ) | 2,897.00 | 18,432 Xeon E5-2680 CPUs (8 cores, 2.7 GHz), 820 Xeon E7-4870 CPUs (10 cores, 2.4 GHz) | 340 | 3,423 kW | Cosmology about the formation of the universe, seismology and earthquake prediction |

| HLRN-III (Cray XC40) | Zuse Institute Berlin , regional computing center for Lower Saxony | 2,685.60 | 42,624 cores Intel Xeon Haswell @ 2.5 GHz and IvyBridge @ 2.4 GHz | 222 | 500 - 1,000 kW | Physics, chemistry, environmental and marine research, engineering |

| HRSK-II | Center for Information Services and High Performance Computing , TU Dresden | 1,600.00 | 43,866 CPU cores, Intel Haswell EP CPUs (Xeon E5 2680v3), 216 Nvidia Tesla GPUs | 130 | Scientific applications | |

| HLRE-3 "Mistral" | German Climate Computing Center Hamburg | 1,400.00 | 1,550 nodes with 2 Intel Haswell EP CPUs (Xeon E5-2680v3) (12 cores 2.5 GHz), 1750 nodes with 2 Intel Broadwell EP CPUs (Xeon E5-2695V4) (18 cores 2.1 GHz), 100,000 cores, 54 PB Luster hard drive system, 21 visualization nodes (á 2 Nvidia Tesla K80 GPUs) or (á 2 Nvidia GeForce GTX 9xx) | 120 | Climate modeling | |

| Cray XC40 | German Weather Service (Offenbach) | 1,100.00 | Cray Aries Network; 1,952 CPUs Intel Xeon E5-2680v3 / E5-2695v4 | 122 | 407 kW | Numerical weather forecast and climate simulations |

| Lichtenberg high-performance computer | Darmstadt University of Technology | 951.34 | Phase 1: 704 nodes with 2 Intel Xeon (8 cores), 4 nodes with 8 Intel Xeon (8 cores), 70 nodes with 2 Intel Xeon.

Phase 2: 596 nodes with 2 Intel Xeon (12 cores), 4 nodes with 4 Intel Xeon (15 cores), 32 nodes with 2 Intel Xeon. |

76 | Scientific applications | |

| CARL and EDDY | Carl von Ossietzky University of Oldenburg | 457.2 | Lenovo NeXtScale nx360M5, 12,960 cores (Intel Xeon E5-2650v4 12C 2.2 GHz), Infiniband FDR | 80 | 180 kW | Theoretical chemistry, wind energy research, theoretical physics, neuroscience and hearing research, marine research, biodiversity and computer science |

| Mogon | Johannes Gutenberg University Mainz | 283.90 | 33,792 Opteron 6272 | 84 | 467 kW | Natural sciences, physics, mathematics, biology, medicine |

| OCuLUS | Paderborn Center for Parallel Computing , University of Paderborn | 200.00 | 614 nodes dual Intel E5-2670 (9856 cores) and 64 GB RAM | 45 | Engineering, natural sciences | |

| HLRE 2 | German Climate Computing Center Hamburg | 144.00 | 8064 IBM Power6 Dual Core CPUs, 6 petabyte disk | 20th | Climate modeling | |

| Complex MPI 2 | RWTH Aachen | 103.60 | 176 nodes with a total of 1,408 Intel Xeon 2.3 GHz 8-core processors | 22nd | Scientific applications | |

| HPC-FF | research center Julich | 101.00 | 2160 Intel Core i7 (Nehalem-EP) 4-core, 2.93 GHz processors | 24 | European fusion research | |

| HLRB II | LRZ Garching | 56.52 | 9,728 CPUs 1.6 GHz Intel Itanium 2 (Montecito Dual Core) | 39 | Natural sciences, astrophysics and materials research | |

| ClusterVision HPC | Technological University Bergakademie Freiburg | 22.61 | 1728 cores Intel Xeon X5670 (2.93 GHz) + 280 cores AMD Opteron 6276, (2.3 GHz) | 0.5 | Engineering, quantum chemistry, fluid mechanics, geophysics | |

| CHiC Cluster ( IBM x3455 ) | Chemnitz University of Technology | 8.21 | 2152 cores from 1076 dual core 64 bit AMD Opteron 2218 (2.6 GHz) | Modeling and numerical simulations |

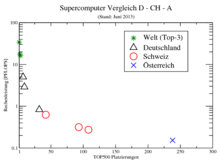

Selected current supercomputers (DACH excluding Germany)

The 3 fastest computers from Switzerland and Austria. Data from Top500 List 2017 entries Pos. 3, 82, 265, 330, 346, 385. In the list of the 500 fastest supercomputers in the world there is none from Liechtenstein. (As of June 2017)

| Surname | Location | Tera FLOPS (peak) | configuration | TB RAM | Energy demand | purpose |

|---|---|---|---|---|---|---|

| Piz Daint (upgrade 2016/2017, as of June 2017) | Swiss National Supercomputing Center (CSCS) ( Switzerland ) | 19,590.00 | Cray XC50, Xeon E5-2690v3 12C 2.6 GHz, Aries interconnect, NVIDIA Tesla P100, Cray Inc. (361,760 cores) | 2,272 kW | ||

| Piz Daint Multicore (as of June 2017) | Swiss National Supercomputing Center (CSCS) ( Switzerland ) | 1,410.70 | Cray XC40, Xeon E5-2695v4 18C 2.1 GHz, Aries interconnect, Cray Inc. (44,928 cores) | 519 kW | ||

| EPFL Blue Brain IV (as of June 2017) | Swiss National Supercomputing Center (CSCS) ( Switzerland ) | 715.60 | BlueGene / Q, Power BQC 16C 1.600GHz, Custom Interconnect; IBM (65,536 cores) | 329 kW | ||

| VSC-3 (as of June 2017) | Vienna Scientific Cluster ( Vienna , Austria ) | 596.00 | Oil blade server, Intel Xeon E5-2650v2 8C 2.6 GHz, Intel TrueScale Infiniband; ClusterVision (32,768 cores) | 450 kW | ||

| Cluster Platform DL360 (as of June 2017) | Hosting Company ( Austria ) | 572.60 | Cluster Platform DL360, Xeon E5-2673v4 20C 2.3 GHz, 10G Ethernet; HPE (26,880 cores) | 529 kW | ||

| Cluster Platform DL360 (as of June 2017) | Hosting Company ( Austria ) | 527.20 | Cluster Platform DL360, Xeon E5-2673v3 12C 2.4 GHz, 10G Ethernet; HPE (20,352 cores) | 678 kW |

The fastest of their time in history

The following table (as of June 2017) lists some of the fastest supercomputers of their time:

| year | Supercomputers | Top speed up to 1959 in operations per second (OPS) from 1960 in FLOPS |

place |

|---|---|---|---|

| 1906 | Babbage Analytical Engine, Mill | 0.3 | RW Munro , Woodford Green , Essex , England |

| 1928 | IBM 301 | 1.7 | different places worldwide |

| 1931 | IBM Columbia Difference tab | 2.5 | Columbia University |

| 1940 | Zuse Z2 | 3.0 | Berlin , Germany |

| 1941 | Zuse Z3 | 5.3 | Berlin , Germany |

| 1942 | Atanasoff-Berry Computer (ABC) | 30.0 | Iowa State University , Ames (Iowa) , USA |

| TRE Heath Robinson | 200.0 | Bletchley Park , Milton Keynes , England | |

| 1,000.0 | corresponds to 1 kilo OPS | ||

| 1943 | Flowers Colossus | 5,000.0 | Bletchley Park , Milton Keynes , England |

| 1946 |

UPenn ENIAC (before the 1948+ modifications) |

50,000.0 | Aberdeen Proving Ground , Maryland , USA |

| 1954 | IBM NORC | 67,000.0 | US Naval Proving Ground , Dahlgren , Virginia , USA |

| 1956 | WITH TX-0 | 83,000.0 | Massachusetts Inst. Of Technology , Lexington , Massachusetts , USA |

| 1958 | IBM SAGE | 400,000.0 | 25 US Air Force bases in the USA and one location in Canada (52 computers) |

| 1960 | UNIVAC LARC | 500,000.0 | Lawrence Livermore National Laboratory , California, USA |

| 1,000,000.0 | corresponds to 1 MFLOPS, 1 Mega-FLOPS | ||

| 1961 | IBM 7030 "Stretch" | 1,200,000.0 | Los Alamos National Laboratory , New Mexico , USA |

| 1964 | CDC 6600 | 3,000,000.0 | Lawrence Livermore National Laboratory , California, USA |

| 1969 | CDC 7600 | 36,000,000.0 | |

| 1974 | CDC STAR-100 | 100,000,000.0 | |

| 1975 | Burroughs ILLIAC IV | 150,000,000.0 | NASA Ames Research Center , California, USA |

| 1976 | Cray-1 | 250,000,000.0 | Los Alamos National Laboratory , New Mexico, USA (over 80 sold worldwide) |

| 1981 | CDC Cyber 205 | 400,000,000.0 | different places worldwide |

| 1983 | Cray X-MP / 4 | 941,000,000.0 | Los Alamos National Laboratory ; Lawrence Livermore National Laboratory ; Battelle ; Boeing |

| 1,000,000,000.0 | corresponds to 1 GFLOPS, 1 Giga-FLOPS | ||

| 1984 | M-13 | 2,400,000,000.0 | Scientific Research Institute of Computer Complexes , Moscow, USSR |

| 1985 | Cray-2 /8 | 3,900,000,000.0 | Lawrence Livermore National Laboratory , California, USA |

| 1989 | ETA10 -G / 8 | 10,300,000,000.0 | Florida State University , Florida , USA |

| 1990 | NEC SX-3 / 44R | 23,200,000,000.0 | NEC Fuchu Plant, Fuchū , Japan |

| 1993 | Thinking Machines CM -5/1024 | 65,500,000,000.0 | Los Alamos National Laboratory ; National Security Agency |

| Fujitsu Numerical Wind Tunnel | 124,500,000,000.0 | National Aerospace Laboratory , Tokyo , Japan | |

| Intel Paragon XP / S 140 | 143,400,000,000.0 | Sandia National Laboratories , New Mexico, USA | |

| 1994 | Fujitsu Numerical Wind Tunnel | 170,400,000,000.0 | National Aerospace Laboratory , Tokyo, Japan |

| 1996 | Hitachi SR2201 / 1024 | 220,400,000,000.0 | Tokyo University , Japan |

| 1996 | Hitachi / Tsukuba CP-PACS / 2048 | 368.200.000.000.0 | Center for Computational Physics , University of Tsukuba , Tsukuba , Japan |

| 1,000,000,000,000.0 | corresponds to 1 TFLOPS, 1 Tera-FLOPS | ||

| 1997 | Intel ASCI Red / 9152 | 1,338,000,000,000.0 | Sandia National Laboratories, New Mexico, USA |

| 1999 | Intel ASCI Red / 9632 | 2,379,600,000,000.0 | |

| 2000 | IBM ASCI White | 7,226,000,000,000.0 | Lawrence Livermore National Laboratory , California, USA |

| 2002 | NEC Earth Simulator | 35,860,000,000,000.0 | Earth Simulator Center , Yokohama- shi, Japan |

| 2004 | SGI Project Columbia | 42,700,000,000,000.0 | Project Columbia, NASA Advanced Supercomputing Facility , USA |

| IBM BlueGene / L | 70,720,000,000,000.0 | US Department of Energy / IBM, USA | |

| 2005 | IBM BlueGene / L | 136,800,000,000,000.0 |

US Department of Energy / US National Nuclear Security Administration , Lawrence Livermore National Laboratory , California, USA |

| 1,000,000,000,000,000.0 | corresponds to 1 PFLOPS, 1 Peta-FLOPS | ||

| 2008 | IBM Roadrunner | 1,105,000,000,000,000.0 |

US Department of Energy / US National Nuclear Security Administration , Los Alamos National Laboratory |

| 2010 | Tianhe-1A | 2,507,000,000,000,000.0 | National Supercomputer Center in Tianjin , China |

| 2011 | K computer | 10,510,000,000,000,000.0 | Advanced Institute for Computational Science, Japan |

| 2012 | Sequoia | 16,324,750,000,000,000.0 | Lawrence Livermore National Laboratory , California, USA |

| 2012 | titanium | 17,590,000,000,000,000.0 | Oak Ridge National Laboratory , Tennessee , USA |

| 2013 | Tianhe-2 | 33,863,000,000,000,000.0 | National Supercomputer Center in Guangzhou , China |

| 2016 | Sunway TaihuLight | 93,000,000,000,000,000.0 | National Supercomputing Center, Wuxi , China |

| 2018 | Summit | 200,000,000,000,000,000.0 | Oak Ridge National Laboratory , Tennessee , USA |

| 1,000,000,000,000,000,000.0 | corresponds to 1 EFLOPS, 1 Exa-FLOPS | ||

| future | Tianhe-3 | 1,000,000,000,000,000,000.0 | China, National Center for Supercomputers - Start of construction Feb. 2017, completion of the prototype announced for early 2018 |

| Frontier | 1,500,000,000,000,000,000.0 | USA, Oak Ridge National Laboratory (ORNL) - Completion announced in 2021 | |

| El Capitan | 2,000,000,000,000,000,000.0 | USA, DOE's Lawrence Livermore National Laboratory (LLNL) - completion 2023 announced |

If you plot the FLOPs of the fastest computers of their time against time, you get an exponential curve, logarithmic roughly a straight line, as shown in the following graph.

Future development of supercomputers

United States

With an executive order , US President Barack Obama ordered the US federal authorities to advance the development of an ExaFlops supercomputer. In 2018, Intel's Aurora supercomputer is expected to have a processing power of 180 PetaFlops . In 2021, the DOE wants to set up the first exascale supercomputer and put it into operation 9 months later.

China

China wants to develop a supercomputer with a speed in the exaflops range by 2020. The prototype of "Tianhe-3" should be ready by the beginning of 2018, reported "China Daily" on February 20, 2017. In May 2018 it was presented.

Europe

In 2011, numerous projects started in the EU with the aim of developing software for exascale supercomputers. The CRESTA project (Collaborative Research into Exascale Systemware, Tools and Applications), the DEEP project (Dynamical ExaScale Entry Platform), and the Mont-Blanc project. The MaX (Materials at the Exascale) is another important project. The SERT project started in March 2015 with the participation of the University of Manchester and the STFC in Cheshire .

See also: European high-performance computing .

Japan

In Japan, in 2013, RIKEN began planning an exascale system for 2020 with a power consumption of less than 30 MW. In 2014, Fujitsu was commissioned to develop the next generation of the K computer . In 2015, Fujitsu announced at the International Supercomputing Conference that this supercomputer would use processors of the ARMv8 architecture.

Other services

Milestones

- 1997: Deep Blue 2 (high-performance computer from IBM) is the first computer to beat a world chess champion in an official duel.

- 2002: Yasumasa Canada determines the circle number Pi with a Hitachi SR8000 from the University of Tokyo to an accuracy of 1.24 trillion digits.

- 2007: Intel's desktop processor Core 2 Quad Q6600 achieves approx. 38.40 GFLOPS and is thus supercomputer level of the early 1990s.

- 2014: NVIDIA's Tesla K80 GPU processor achieves a performance of around 8.7 TeraFLOPS, which is the supercomputer level of the early 2000s. It thus beats the supercomputer of the year 2000, the IBM ASCI White, which at that time offered a performance of 7.226 TeraFLOPS.

Comparisons

- The more than 500,000 active computers of the Berkeley Open Infrastructure for Network Computing (BOINC for short) currently (as of January 2020) provide a peak computing power of approx. 26 PetaFLOPS, which can fluctuate depending on the day.

- The more than 380,000 active computers of the Folding at Home project currently (as of March 2020) provide a computing power of over 1 ExaFLOP.

- The Earth Simulator could do all the calculations of all computers worldwide from 1960 to 1970 in about 35 minutes.

- If each of the approximately 7 billion people in the world completed a calculation every second with a calculator without any interruption, the entire human race would have to work 538 years to do what the Tianhe-2 could do in an hour.

- With its performance, the K computer could "count" the meters of a light year in about a second.

- Hans Moravec put the computing power of the brain at 100 teraflops , Raymond Kurzweil at 10,000 teraflops. Supercomputers have already clearly exceeded this computing power. For comparison, a graphics card for 800 euros (5/2016) has a performance of 10 teraflops. (see technological singularity )

Correlators in comparison

Correlators are special devices in radio interferometry whose performance can also be measured in units of FLOPs. They do not fall under the category of supercomputers because they are specialized computers that cannot solve every type of problem.

- The Atacama Large Millimeter / submillimeter Array (ALMA) correlator is currently (December 2012) executing 17 PetaFLOPS.

- The computing power of the WIDAR correlator on the Expanded Very Large Arrays (EVLA) is given (June 2010) as 40 PetaFLOPS.

- The planned correlator of the Square Kilometer Array (SKA) (construction period 2016 to 2023) should be able to carry out 4 ExaFLOPS (4000 PetaFLOPS) (information as of June 2010).

literature

- Werner Gans: Supercomputing: Records; Innovation; Perspective . Ed .: Christoph Pöppe (= Scientific / Dossier . No. 2 ). Spectrum-der-Wissenschaft-Verl.-Ges., Heidelberg 2007, ISBN 978-3-938639-52-8 .

- Shlomi Dolev: Optical supercomputing . Springer, Berlin 2008, ISBN 3-540-85672-2 .

- William J. Kaufmann, et al .: Supercomputing and the transformation of science . Scientific American Lib., New York 1993, ISBN 0-7167-5038-4 .

- Paul B. Schneck: Supercomputer architecture . Kluwer, Boston 1987, ISBN 0-89838-238-6 .

- Aad J. van der Steen: Evaluating supercomputers - strategies for exploiting, evaluating and benchmarking computers with advanced architectures . Chapman and Hall, London 1990, ISBN 0-412-37860-4 .

Web links

- TOP500 list of most powerful supercomputers (English)

- TOP500 list of the most energy-efficient supercomputer (English)

- The International Conference for High Performance Computing and Communications (English)

- The International Supercomputing Conference (English)

- Newsletter on Supercomputing and big data (English and German)

Individual evidence

- ↑ Mario Golling, Michael Kretzschmar: Development of an architecture for accounting in dynamic virtual organizations . ISBN 978-3-7357-8767-5 .

- ↑ Martin Kleppmann: Designing data- intensive applications: Concepts for reliable, scalable and maintainable systems . O'Reilly, ISBN 978-3-96010-183-3 .

- ↑ Using the example of the SuperMUC : supercomputers and export controls. Information on international scientific collaborations. (PDF; 293 kB) BMBF , accessed on June 14, 2018 .

- ↑ a b List Statistics

- ↑ China defends top position orf.at, June 19, 2017, accessed June 19, 2017.

- ↑ The Green 500 List ( Memento of the original from August 26, 2016 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ↑ Lenovo's largest supplier Top500 Computer Business Wire 6/26/2018

- ↑ USA have again the most powerful supercomputer orf.at, June 24, 2018, accessed June 24, 2018.

- ↑ Jack Dongarra : Trip Report to China and Tianhe-2 Supercomputer, June 3, 2013 (PDF; 8.2 MB)

- ↑ a b http://www.hlrs.de/systems/hpe-apollo-9000-hawk/

- ↑ a b https://www.uni-stuttgart.de/en/university/news/press-release/Hawk-Supercomputer-Inaugurated/

- ↑ asc.llnl.gov ASC Sequoia

- ↑ a b JUQUEEN Research Center Jülich

- ↑ supercomputers. ECMWF , 2013, accessed June 14, 2018 .

- ↑ a b Supercomputers: USA regain top position . Heise Online, June 18, 2012

- ↑ SuperMUC Petascale System . lrz.de

- ↑ Technical data

- ↑ sysGen project reference (PDF; 291 kB) Bielefeld University, Faculty of Physics

- ↑ JUWELS - Configuration. Forschungszentrum Jülich , accessed on June 28, 2018 (English).

- ↑ LRZ: SuperMUC No. 4 on the Top500 list

- ↑ HLRN

- ↑ Andreas Stiller: Supercomputer at the TU Dresden officially goes into operation. In: Heise online . March 13, 2015, accessed June 14, 2018 .

- ^ Andreas Stiller: New petaflops computer at the TU Dresden. In: c't . May 29, 2015, accessed June 14, 2018 .

- ↑ HLRE-3 "Mistral". DKRZ , accessed on June 14, 2018 .

- ↑ a b Computer history at the DKRZ. DKRZ , accessed on June 14, 2018 .

- ↑ Lichtenberg high-performance computer. HHLR, accessed on August 4, 2016 .

- ↑ HPC systems at the University of Oldenburg

- ↑ Oldenburg university computers are among the fastest in the world

- ↑ OCuLUS

- ↑ ClusterVision HPC ( Memento from February 23, 2015 in the Internet Archive )

- ↑ CHiC ( Memento of the original from February 9, 2007 in the Internet Archive ) Info: The archive link was inserted automatically and has not yet been checked. Please check the original and archive link according to the instructions and then remove this notice.

- ↑ IBM 301 Accounting Machine

- ^ The Columbia Difference tabulator - 1931

- ↑ Andreas Stiller: Supercomputer: China overtakes the USA. In: Heise online . June 20, 2016, accessed June 14, 2018 .

- ↑ ORNL Launches Summit supercomputer. Oak Ridge National Laboratory , June 8, 2018, accessed June 14, 2018 .

- ↑ a b China started building a new supercomputer orf.at, February 20, 2017, accessed February 20, 2017.

- ↑ Marc Sauter: Frontier with 1.5 exaflops: AMD builds the world's fastest supercomputers. In: golem.de. May 7, 2019, accessed July 16, 2019 .

- ↑ HPE and AMD power complex scientific discovery in world's fastest supercomputer for US Department of Energy's (DOE) National Nuclear Security Administration (NNSA). March 4, 2020, accessed on March 6, 2020 .

- ↑ The White House: CREATING A NATIONAL STRATEGIC COMPUTING Accessed January 2017

- ↑ golem.de: How the Exaflop mark should be cracked. Accessed : January 2017

- ↑ Aurora supercomputer . top500.org. 2016. Retrieved January 13, 2017.

- ^ First US Exascale Supercomputer Now On Track for 2021 . top500.org. December 10, 2016. Retrieved January 13, 2017.

- ↑ China Research: Exascale Supercomputer.Retrieved January 2017

- ↑ http://german.xinhuanet.com/2018-05/18/c_137187901.htm

- ^ Europe Gears Up for the Exascale Software Challenge with the 8.3M Euro CRESTA project . Project consortium. November 14, 2011. Retrieved December 10, 2011.

- ↑ Booster for Next-Generation Supercomputers Kick-off for the European exascale project DEEP . FZ Jülich. November 15, 2011. Retrieved January 13, 2017.

- ↑ Supercomputer with turbocharger . FZ Jülich. November 5, 2016. Retrieved January 13, 2017.

- ^ Mont-Blanc project sets Exascale aims . Project consortium. October 31, 2011. Retrieved December 10, 2011.

- ↑ MaX website . project consortium. November 25, 2016. Retrieved November 25, 2016.

- ^ Developing Simulation Software to Combat Humanity's Biggest Issues . Scientific Computing. February 25, 2015. Retrieved April 8, 2015.

- ^ Patrick Thibodeau: Why the US may lose the race to exascale . In: Computerworld . 22nd of November 2013.

- ↑ RIKEN selects contractor for basic design of post-K supercomputer . In: www.aics.riken.jp . October 1, 2014.

- ↑ Fujitsu picks 64-bit ARM for Japan's monster 1,000-PFLOPS super . In: www.theregister.co.uk . 20th June 2016.

- ↑ intel.com

- ↑ Michael Günsch: Tesla K80: Dual Kepler with up to 8.7 TFLOPS for supercomputers. In: ComputerBase . November 17, 2014, accessed June 14, 2018 .

- ↑ Host overview on boincstats.com

- ↑ Overview of BOINC services on boincstats.com

- ↑ Folding @ home stats report. Retrieved March 26, 2020 (English).

- ↑ Folding @ home: Thanks to our AMAZING community, we've crossed the exaFLOP barrier! That's over a 1,000,000,000,000,000,000 operations per second, making us ~ 10x faster than the IBM Summit! Pic.twitter.com/mPMnb4xdH3. In: @foldingathome. March 25, 2020, accessed on March 26, 2020 .

- ↑ heise.de: Nvidia GeForce GTX 1080 graphics card: Monster performance for almost 800 euros : 8.87 TFlops.

- ↑ Powerful Supercomputer Makes ALMA a Telescope

- ↑ The highest supercomputer in the world compares astronomy data . Heise online

- ↑ a b Cross-Correlators & New Correlators - Implementation & choice of architecture . (PDF; 9.4 MB) National Radio Astronomy Observatory, p. 27

- ^ The Expanded Very Large Array Project - The 'WIDAR' Correlator . (PDF; 13.2 MB) National Radio Astronomy Observatory, p. 10