Unicode

Unicode (pronunciations: am. English [ ˈjuːnikoʊd ], British English [ ˈjuːnikəʊd ]; dt. [ ˈJuːnikoːt ]) is an international standard in which a digital code is defined in the long term for every meaningful character or text element of all known writing cultures and character systems . The aim is to eliminate the use of different and incompatible codes in different countries or cultures. Unicode is constantly being supplemented with characters from other writing systems.

ISO 10646 is the designation of the Unicode character set used by ISO and has practically the same meaning; it is referred to there as the Universal Coded Character Set (UCS).

history

Conventional computer character sets only contain a limited supply of characters, with Western character encodings this limit is usually 128 (7 bit ) code positions - as in the well-known ASCII standard - or 256 (8 bit) positions, such as e.g. B. at ISO 8859-1 (also known as Latin-1 ) or EBCDIC . After subtracting the control characters, 95 elements for ASCII and 191 elements for the 8-bit ISO character sets can be represented as fonts and special characters . These character encodings only allow a few languages to be represented in the same text at the same time, if one does not manage to use different fonts with different character sets in one text . This hindered the international data exchange considerably in the 1980s and 1990s.

ISO 2022 was a first attempt to be able to represent several languages with just one character encoding. The coding uses escape sequences in order to be able to switch between different character sets (e.g. between Latin-1 and Latin-2). However, the system only caught on in East Asia.

Joseph D. Becker of Xerox wrote the first draft for a universal character set in 1988. According to the original plans, this 16-bit character set should only encode the characters of modern languages:

“Unicode gives higher priority to ensuring utility for the future than to preserving past antiquities. Unicode aims in the first instance at the characters published in modern text (eg in the union of all newspapers and magazines printed in the world in 1988), whose number is undoubtedly far below 2 14 = 16.384. Beyond those modern-use characters, all others may be defined to be obsolete or rare, these are better candidates for private-use registration than for congesting the public list of generally-useful Unicodes. "

“Unicode places more emphasis on ensuring usability for the future than on preserving past antiquities. Unicode primarily targets all characters that are published in modern texts (for example in all newspapers and magazines in the world in 1988), the number of which is undoubtedly well below 2 14 = 16,384. Other characters that go beyond these current characters can be considered obsolete or rare. These should be registered in a private mode instead of overfilling the public list of generally useful Unicodes. "

In October 1991, after several years of development, version 1.0.0 of the Unicode standard was published, which at that time only encoded the European, Middle Eastern and Indian scripts. It was only eight months later, after the Han standardization was completed, that version 1.0.1 appeared, which encoded East Asian characters for the first time. With the publication of Unicode 2.0 in July 1996, the standard was expanded from the original 65,536 to the current 1,114,112 code points, from U+0000to U+10FFFF.

Versions

The publication of new versions sometimes takes a longer period of time, so that at the time of publication only the character tables and individual parts of the specification are initially ready, while the final publication of the main specification takes place some time later.

Content of the standard

The Unicode Consortium provides several documents in support of Unicode. In addition to the actual character set, these are also other documents that are not absolutely necessary, but are helpful for interpreting the Unicode standard.

structure

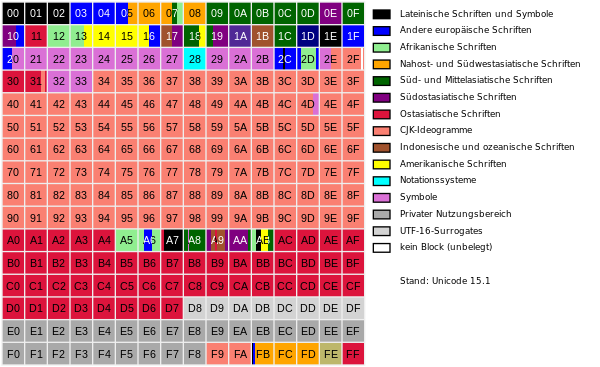

In contrast to earlier character encodings, which mostly only encoded a certain writing system, the aim of Unicode is to encode all writing systems and characters in use. The number of characters is divided into 17 levels ( English planes ), each comprising 2 16 = 65,536 code points. Six of these levels are already in use, the rest are reserved for later use:

- The Basic Multilingual Plane ( BMP ; German multilingual base level , also referred to as Plane 0 ) mainly contains writing systems that are currently in use, punctuation marks and symbols, control characters and surrogate pairs , and a privately usable area (PUA) . The level is highly fragmented and largely occupied, so that there is no more room for new writing systems to be encoded. Access to levels other than the BMP is not yet possible in some programs, or only to a limited extent.

- The Supplementary Multilingual Plane ( SMP ; dt. Additional multi-lingual plane , as Plane 1 hereinafter) was introduced with Unicode 3.1. It mainly contains historical writing systems, but also larger collections of characters that are seldom used, such as B. Domino and Mah Jongg stones and emoji . In the meantime, writing systems are also coded in the SMP, which are still in use but no longer find a place in the BMP.

- The Supplementary Ideographic Plane ( SIP ; dt. Additional ideographic level , also known as Plane 2 hereinafter) which has also been introduced with Unicode 3.1, contains only CJK -special that are rarely used, these include among others the chữ nôm that used were used in Vietnam . If this level is not sufficient for this, Plane 3 is reserved for further CJK characters.

- The Supplementary Special-Purpose Plane ( SSP ; German supplementary level for special uses , also referred to as Plane 14 ) contains a few control characters for language marking.

- The last two levels, Supplementary Private Use Area-A and -B ( PUA ; also Plane 15 and Plane 16 ), are available as privately usable areas (PUA) . Some of them are also referred to as Private Use Planes ( PUP ).

Within these levels belong together characters in blocks are (Engl. Blocks ) are summarized. A Unicode block usually deals with a writing system, but for historical reasons a certain degree of fragmentation has set in. Often characters were added later and placed in other blocks as a supplement.

Code points and characters

Each encoded in the Unicode standard elementary mark is a code point (engl. Codepoints ) are assigned. These are usually shown in hexadecimal (at least four digits, i.e. with leading zeros if necessary) and preceded by a prefix U+, e.g. B. U+00DFfor the ß .

The entire range described by the Unicode standard comprises 1,114,112 code points (U + 0000… U + 10FFFF, 17 levels of 2 16 each , i.e. 65536 characters). However, the standard does not allow them to be used for character encoding in some areas:

- 2048 code points in the range U + D800… U + DFFF are used as parts of surrogate pairs in the UTF-16 coding scheme to represent code points above the BMP (i.e. in the range U + 10000… U + 10FFFF) and are therefore not used as code points themselves available for individual characters.

- 66 code points, 32 in the range U + FDD0… U + FDEF and 2 each at the end of each of the 17 levels (i.e. U + FFFE, U + FFFF, U + 1FFFE, U + 1FFFF,…, U + 10FFFE, U + 10FFFF) are reserved for process-internal uses and are not intended for use as individual characters.

This means that a total of 1,111,998 code points are available for character encoding. However, the number of code points actually assigned is significantly lower; Tables D-2 and D-3 in Appendix D of the Unicode Standard provide an overview of how many code points are assigned in the various versions and what they are used for.

PUA ( Private Use Area )

Special areas are reserved for private use, i. H. in these code points are never assigned for characters standardized in Unicode. These can be used for privately defined characters that have to be agreed individually between the producers and users of the texts they contain. These areas are:

- in the BMP: U + E000… U + F8FF

- in other levels: U + F0000… U + FFFFD and U + 100000… U + 10FFFD

Special conventions have been developed for various applications which specify character assignments specifically for the PUA area of the BMP. On the one hand, precomposed characters consisting of basic characters and diacritical characters are often found here , since in many (especially older) software applications it cannot be assumed that such characters are correctly displayed according to the Unicode rules when entered as a sequence of basic characters and diacritical characters . On the other hand, there are characters that do not comply with the rules for inclusion in Unicode, or whose application for inclusion in Unicode was unsuccessful or failed for other reasons. In many fonts there is a manufacturer logo at position U + F000 (logos are generally not encoded in Unicode).

Sources for PUA characters are e.g. B .:

- MUFI (Medieval Unicode Font Initiative)

- SIL PUA for special letters in various minority languages worldwide

- Languagegeek for indigenous languages of North America

- ConScript for invented writing systems like Klingon

Coding

In addition to the actual character set, a number of character encodings have also been defined that implement the Unicode character set and that can be used to have full access to all Unicode characters. They are called Unicode Transformation Format ( UTF for short ); The most widespread are on the one hand UTF-16 , which has established itself as the internal character representation of some operating systems ( Windows , OS X) and software development frameworks ( Java , .NET ), and on the other hand UTF-8 , which is also used in operating systems (GNU / Linux , Unix) as well as in various Internet services (e-mail, WWW) play a major role. Based on the proprietary EBCDIC format of IBM - mainframe computers is the UTF-EBCDIC defined encoding. Punycode is used to encode domain names with non-ASCII characters. With the Standard Compression Scheme for Unicode, there is a coding format that compresses the texts at the same time . Other formats for encoding Unicode characters include: a. CESU-8 and GB 18030 .

normalization

Many characters that are contained in the Unicode standard are so-called compatibility characters, which from a Unicode point of view can already be represented with other characters or character sequences encoded in Unicode, e.g. B. the German umlauts, which theoretically can be represented with a sequence of the base letter and a combining Trema (horizontal colon). With Unicode normalization , the compatibility characters are automatically replaced by the sequences provided in Unicode. This makes the processing of Unicode texts much easier, since only one possible combination stands for a certain character and not several different ones.

Sorting

For many writing systems, the characters in Unicode are not encoded in an order that corresponds to the sorting common to users of this writing system. Therefore, when sorting z. B. in a database application usually not the order of the code points are used. In addition, the sorting in many writing systems is characterized by complex, context-dependent regulations. Here, the Unicode Collation Algorithm defines how character strings can be sorted within a certain writing system or also across writing systems.

In many cases, however, the actual sequence to be used depends on other factors (e.g. the language used) (e.g. sorted "ä" in German depending on the application as "ae" or "a", but in Swedish after "z" and “å”), so that the Unicode sorting algorithm is to be used if the sorting is not determined by more specific framework conditions.

Standardization institutions

The non-profit Unicode consortium was founded in 1991 and is responsible for the Unicode industry standard . The international standard ISO 10646 is published by ISO ( International Organization for Standardization ) in cooperation with IEC . Both institutions work closely together. Since 1993, Unicode and ISO 10646 have been practically identical in terms of character encoding. While ISO 10646 only defines the actual character encoding, Unicode includes a comprehensive set of rules which, among other things, clearly defines other properties that are important for the specific application, such as the sorting order, reading direction and rules for combining characters.

For some time now, the code scope of ISO 10646 has been exactly the same as that of Unicode, since the code range there was also limited to 17 levels, which can be represented with 21 bits.

Coding criteria

Compared to other standards, Unicode has the special feature that characters that have been encoded are never removed again in order to ensure the longevity of digital data. If the standardization of a character subsequently turns out to be an error, its use is advised against. Therefore, the inclusion of a mark in the standard requires extremely careful examination, which can take years.

Unicode only "abstract signs" are (English: characters ) codes, but not the graphical representation ( glyphs ) of these characters that can vary from font extremely different font, the Latin alphabet as in the form of Antiqua , fracture , the Irish magazine or the different manuscripts . For glyph variants whose normalization is proven to be useful and necessary, 256 "Variation Selectors" are reserved as a precaution, which can be adjusted to the actual code if necessary. In many writing systems, characters can also take different forms or form ligatures depending on their position. Apart from a few exceptions (e.g. Arabic), such variants are also not included in the Unicode standard, but a so-called smart font technology such as OpenType is required, which can replace the forms appropriately.

On the other hand, identical glyphs, if they have different meanings, are also coded multiple times, for example the glyphs А, В, Е, K, М, Н, О, Р, Т and Х, which - with sometimes different meanings - are both in Latin as also occur in the Greek and Cyrillic alphabet.

In borderline cases there is a hard fight to decide whether it is a question of glyph variants or actually different characters ( graphemes ) worthy of their own coding . For example, quite a few experts are of the opinion that the Phoenician alphabet can be viewed as glyph variants of the Hebrew alphabet, since the entire range of Phoenician characters has clear equivalents there and the two languages are also very closely related. Ultimately, however, the notion that it was a matter of separate character systems , called “scripts” in Unicode terminology, finally prevailed .

The situation is different with CJK ( Chinese , Japanese and Korean ): Here the forms of many synonymous characters have diverged over the past centuries. However, the language-specific glyphs share the same codes in Unicode (with the exception of a few characters for reasons of compatibility). In practice, language-specific fonts are mainly used here , which means that the fonts take up a lot of space. The uniform coding of the CJK characters ( Han Unification ) was one of the most important and extensive preparatory work for the development of Unicode. It is particularly controversial in Japan.

When the foundation stone for Unicode was laid, it had to be taken into account that a large number of different encodings were already in use. Unicode-based systems should be able to handle conventionally encoded data with little effort. The widespread ISO-8859-1 coding (Latin1) as well as the coding types of various national standards were retained for the lower 256 characters , e.g. B. TIS-620 for Thai (almost identical to ISO 8859-11 ) or ISCII for Indian scripts , which have only been moved to higher areas in the original order.

Every character of the relevant traditional encodings has been adopted in the standard, even if it does not meet the standards normally applied. A large number of these are characters made up of two or more characters, such as letters with diacritical marks . Incidentally, a large part of the software still does not have the ability to properly assemble characters with diacritics. The exact definition of equivalent codes is part of the extensive set of rules belonging to Unicode.

In addition, there are many Unicode characters that do not have a glyph assigned and that are still treated as "characters". In addition to control characters such as the tab character (U + 0009), the line feed (U + 000A) etc., 19 different characters are explicitly defined as spaces, even those without a width that may be a. can be used as word separators for languages such as Thai , which are written without spaces. For bidirectional text , e.g. B. Arabic with Latin , seven formatting characters are encoded. In addition, there are other invisible characters that should only be evaluated under certain circumstances, such as the Combining Grapheme Joiner .

Use on computer systems

Code point input methods

Direct entry at operating system level

Microsoft Windows

Under Windows (from Windows 2000 ) in some programs (more precisely in RichEdit fields) the code can be entered decimally as Alt+ <decimal Unicode> (with activated Num Lock ) on the numeric keypad. Please note, however, that character numbers smaller than 1000 must be supplemented by a leading zero (e.g. Alt+ 0234for Codepoint 234 10 [ê]). This measure is necessary because the input method Alt+ <one to three-digit decimal character number without a leading zero> (still available in Windows) was already used in MS-DOS times to display the characters of the code page 850 (especially with earlier MS DOS versions also code page 437 ).

Another input method requires that an entry (value) of the type REG_SZ ("character string") name exists in the registrationHKEY_CURRENT_USER\Control Panel\Input Method database in the key EnableHexNumpadand that the value (date) is 1assigned to it. After editing the registry, users under Windows 8.1, Windows 8, Windows 7 and Vista must log out of the Windows user account and log back in; with earlier Windows versions, the computer must be restarted for the changes to take effect in the registry. Then Unicode characters can be entered as follows: First press and hold the (left) Alt key, then press and release the plus key on the numeric keypad and then enter the hexadecimal code of the character, using the numeric keypad for digits got to. Finally release the Alt key.

Although this input method works in principle in every input field of every Windows program, it can happen that quick access keys for menu functions prevent the input of hexadecimal code points: For example, if you want to enter the letter Ø (U + 00D8), the combination Alt+Din many programs leads to the File menu opens instead .

A further disadvantage is that Windows requires the explicit specification of the UTF-16 encoding (used internally in Windows) instead of the Unicode encoding itself and therefore only allows four-digit code values to be entered; For characters that are above the BMP and have code points with five or six-digit hexadecimal notation, so-called surrogate pairs are to be used instead, in which a five or six-digit code point is mapped to two four-digit substitute code points. For example, the treble clef ? (U + 1D11E) must be entered as a hexadecimal UTF-16 value pair D834 and DD1E; direct entry of five or six-digit code points is therefore not possible here.

Apple macOS

In Apple macOS , the entry of Unicode characters must first be activated as a special case via the "Keyboard" system settings. To do this, add the “Unicode hex input” in the “Input sources” tab dialog using the plus symbol. This is located under the heading "Other". Then the Unicode value ⌥Optioncan be entered with the four-digit hex code of the Unicode character while holding down the key; If the hex code is less than four digits long, leading zeros must be entered. If the hex code has five digits, it is not possible to enter it directly via the keyboard and it must be selected in the "Character overview" dialog. If the Unicode-Hex input is activated, there is no German-language keyboard layout (e.g. for umlauts), so you have to switch between the two keyboard modes. The respective status of the keyboard assignment can be shown using an additional option in the menu bar.

Direct input in special software

Microsoft Office

Under Microsoft Office (from Office XP), Unicode can also be entered in hexadecimal by typing <Unicode> or U + <Unicode> in the document and then pressing the key combination Alt+ cor Alt+ in dialog boxes x. This key combination can also be used to display the code of the character in front of the cursor. An alternative option, which also works in older versions, is to call up a table with Unicode characters with “Insert” - “Special Characters”, select a desired one with the cursor and insert it into the text. The program also makes it possible to define macros for characters that are required frequently, which can then be called up with a key combination.

Qt and GTK +

GTK + , Qt and all programs and environments based on them (such as the Gnome desktop environment ) support input using the combination + or, in newer versions, + or + + . After pressing the buttons, an underlined small u appears. Then the Unicode can be entered in hexadecimal form and is also underlined so that you can see what belongs to the Unicode. After pressing the space or enter key , the corresponding character appears. This functionality is not supported on the KDE desktop environment . StrgUmschalttasteStrgUStrgUmschalttasteu

Vim

In the text editor Vim , Unicode characters can be entered with Strg+ v, followed by the key uand the Unicode in hexadecimal form.

Selection via character tables

The charmap.exe program , called the character table , has been integrated into Windows since Windows NT 4.0 . With this program it is possible to insert Unicode characters via a graphical user interface. It also offers an input field for the hexadecimal code.

In macOS , a system-wide character palette is also available under Insert → Special Characters.

The free programs gucharmap (for Windows and Linux / Unix ) and kcharselect (for Linux / UNIX) display the Unicode character set on the screen and provide additional information on the individual characters.

Code point information in documents

HTML and XML support Unicode with character codes that represent the Unicode character regardless of the set character set. The notation is �for decimal notation or �for hexadecimal notation, where 0000 represents the Unicode number of the character. For certain characters, named characters ( named entities ) are also defined, e.g. B. represents äthe ä, but this only applies to HTML; XML and the XHTML derived from it define named notations only for those characters that would be interpreted as parts of the markup language in normal use, i.e. <as <, >as >, &as &and "as ".

criticism

Unicode is criticized mainly from the ranks of scientists and in East Asian countries. One of the criticisms here is the Han standardization ; From an East Asian point of view, this procedure combines characters from various unrelated languages. Among other things, it is criticized that ancient texts in Unicode cannot be reproduced true to the original due to this standardization of similar CJK characters. Because of this, numerous alternatives to Unicode have been developed in Japan, such as the Mojikyō standard.

The coding of the Thai script has been criticized in part because, unlike all other writing systems in Unicode, it is based not on a logical but on a visual order, which among other things makes the sorting of Thai words considerably more difficult. The Unicode encoding is based on the Thai standard TIS-620 , which also uses the visual order. Conversely, the coding of the other Indian scripts is sometimes described as "too complicated", especially by representatives of the Tamil script . The model of separate consonant and vowel characters, which Unicode adopted from the Indian standard ISCII , is rejected by those who prefer separate code points for all possible consonant-vowel connections. The government of the People's Republic of China made a similar proposal to encode the Tibetan script as sequences of syllables rather than individual consonants and vowels.

There have also been attempts by companies to place symbols in Unicode to represent their products.

Fonts

Whether the corresponding Unicode character actually appears on the screen depends on whether the font used contains a glyph for the desired character (i.e. a graphic for the desired character number). Often times, e.g. B. under Windows, if the font used does not contain a character, a character from another font is inserted if possible.

In the meantime, the Unicode / ISO code room has assumed a size (more than 100,000 characters) that can no longer be fully accommodated in a font file. Today's most popular font file formats, TrueType and OpenType , can contain a maximum of 65,536 glyphs. Unicode / ISO conformity of a font does not mean that the entire character set is included, but only that the characters it contains are coded in accordance with the standards. In the publication "decodeunicode", which introduces all characters, a total of 66 fonts are named from which the character tables are composed.

Choice of Unicode fonts

- Arial Unicode MS (is supplied from Microsoft Office XP. Support only up to Unicode 2.0. Contains 50 377 glyphs (38 917 characters) in version 1.01.)

- Bitstream Cyberbit (free for non-commercial use. 29,934 characters in version 2.0 beta.)

- Bitstream Vera (free, sans serif version of Cyberbit)

- Cardo (free for non-commercial use, 2,882 characters in version 0.098, 2004)

- ClearlyU (free, the pixel font family includes a set of 12pt to 100dpi proportional BDF fonts with many required characters from Unicode. 9,538 characters in version 1.9.)

- Code2000 , Code2001 and Code2002: Three free fonts which provide 0, 1 and 2 characters for the three planes. These fonts have not been further developed since 2008 and are therefore largely out of date. This does not apply to Code2000 for the Saurashtra, Kayah Li, Rejang and Cham blocks. There are also numerous alternatives for Code2000 and Code2001, for Code2002 e.g. B. "HanaMinA" with "HanaMinB", "MingLiU-ExtB", "SimSun-ExtB" and "Sun-ExtB".

- DejaVu (free, "DejaVu Sans" contains 3 471 characters and 2 558 kerning pairs in version 2.6)

- Doulos SIL (free; contains the IPA . 3 083 characters in version 4.014.)

- Everson Mono (Shareware; contains most of the non- CJK letters. 9632 characters in Macromedia Fontographer v7.0.0 December 12, 2014.)

- Free UCS outline fonts (free, "FreeSerif" comprises 3,914 characters in version 1.52, MES-1 compliant)

- Gentium Plus (further development of Gentium . Version 1.510 from August 2012 contains 5 586 glyphs for 2 520 characters. - Download page at SIL International )

- HanaMinA and HanaMinB together cover level 2 (U + 2XXXX). HanaMinA the block CJK Compatibility Ideographs, supplement , HanaMinB the blocks CJK Unified Ideographs extension B , CJK Unified Ideographs extension C and CJK Unified Ideographs extension D .

- Helvetica World (licensable from Linotype )

- Junicode (free; includes many ancient characters designed for historians. 1,435 characters in version 0.6.3.)

- Linux Libertine (free, includes western character sets (Latin, Cyrillic, Greek, Hebrew, among others with archaic special characters, ligatures, medieval, proportional and Roman numerals, contains more than 2000 characters in version 2.6.0), 2007)

- Lucida Grande (Unicode font included in macOS ; contains 1,266 characters)

- Lucida Sans Unicode (included in more recent Microsoft Windows versions; only supports ISO-8859-x letters. 1,776 characters in version 2.00.)

- New Gulim (shipped with Microsoft Office 2000. Most of CJK letters. 49,284 characters in version 3.10.)

- Noto is a font family developed by Google and Adobe and offered under the free Apache license . Although an ongoing project, most of the Unicode encoded modern and historical scripts are covered. ( Download page at google.com )

- Sun EXTA covers large parts of the level 0, including 20924 of 20941 characters in the Unicode block Unified CJK ideograms and all 6582 characters in the CJK Unified Ideographs Extension A .

- Sun-ExtB largely covers level 2 (U + 2XXXX): Unicode block CJK ideograms, compatibility, supplement , Unicode block Unified CJK ideograms, extension B and Unicode block Unified CJK ideograms, extension C completely, from the unicode block Unified CJK ideograms, extension D 59 of the 222 characters. Also the unicode block Tai-Xuan-Jing symbols .

- TITUS Cyberbit Basic (free; updated version of Cyberbit. 9,779 characters in version 3.0, 2000.)

- Y.OzFontN (free. Contains many Japanese CJK letters, contains few SMP characters. 59,678 characters in version 9.13.)

Substitute fonts

A substitute font is used as a substitute for characters for which no font with a correct display is available.

Here are z. B. the following fonts:

- Unicode BMP Fallback SIL , a replacement font created by SIL International, which represents all characters of level zero ( B asic M ultilingual P lane) defined in Version 6.1 as a square with an inscribed hex code. Find it at sil.org .

- LastResort , designed by Michael Everson , is a replacement font included in Mac OS 8.5 and higher that uses the first glyph of a block for all characters in the block. Free to download from unicode.org .

See also

literature

- Johannes Bergerhausen, Siri Poarangan: decodeunicode: The characters of the world . Hermann Schmidt, Mainz 2011, ISBN 978-3-87439-813-8 (All 109,242 Unicode characters in one book.).

- Julie D. Allen: The Unicode Standard, version 6.0 . The Unicode Consortium. The Unicode Consortium, Mountain View 2011, ISBN 978-1-936213-01-6 ( online version ).

- Richard Gillam: Unicode Demystified: a practical programmer's guide to the encoding standard . Addison-Wesley, Boston 2003, ISBN 0-201-70052-2 .

Web links

- Official website of the Unicode Consortium (English)

- The universal code: Unicode . SELFHTML

- Imperia Unicode and Multi-Language Howto. ( Memento of October 28, 2014 in the Internet Archive ) - Generally understandable, German-language introduction to Unicode

- UTF-8 and Unicode FAQ for Unix / Linux by Markus Kuhn (English)

- UniSearcher - Searching for Unicodes

- Shapecatcher graphic Unicode character search (English)

- Determine the character name and the code position by entering the character

- Unicode - The Movie All 109,242 Unicode characters in a movie

- Unicode Font Viewer (Freeware)

- All Unicode characters, emojis, and fonts in Windows 10

- Detailed listing of Unicode input methods for Windows (English)

- detailed blog article on the minimal understanding of Unicode (English)

Individual evidence

- ↑ This standard is identical to ECMA 35 (PDF; 304 kB), a standard from Ecma International .

- ↑ Internationalization and the Web

- ↑ Joseph D. Becker: Unicode 88 . (PDF; 2.9 MB) August 29, 1988, p. 5

- ^ History of Unicode Release and Publication Dates

- ↑ Chronology of Unicode Version 1.0

- ↑ Unicode in Japan: Guide to a technical and psychological struggle . ( Memento from June 27, 2009 in the Internet Archive )

- ↑ UnicodeData.txt (1.0.0)

- ↑ UnicodeData.txt (1.0.1)

- ↑ UnicodeData.txt (1.1)

- ↑ a b What’s new in Unicode 5.1? BabelStone

- ↑ UnicodeData.txt (2.0)

- ↑ UTR # 8: The Unicode Standard, Version 2.1

- ↑ Unicode 3.0.0

- ↑ UAX # 27: Unicode 3.1

- ↑ UTR # 28: Unicode 3.2

- ↑ Unicode 4.0.0

- ↑ Unicode 4.1.0

- ↑ Unicode 5.0.0

- ↑ Unicode 5.1.0

- ↑ Unicode 5.2.0

- ↑ Unicode 6.0.0

- ↑ Unicode 6.1.0

- ↑ Unicode 6.2.0

- ↑ Unicode 6.3.0

- ↑ Unicode 7.0.0

- ↑ Unicode 8.0.0

- ↑ Unicode 9.0.0. In: unicode.org. Retrieved June 22, 2016 .

- ↑ Unicode 10.0.0. In: unicode.org. Retrieved April 20, 2017 .

- ↑ Unicode 11.0.0. In: unicode.org. Retrieved July 21, 2018 .

- ↑ Unicode 12.0.0. In: unicode.org. Retrieved February 28, 2019 .

- ↑ Unicode 12.1.0. In: unicode.org. Retrieved May 7, 2019 .

- ↑ What is Unicode?

- ↑ a b c d e The Unicode Standard, p. 33

- ↑ Roadmap to the SIP

- ↑ a b The Unicode Standard, p. 34

- ↑ Unicode 6.3 Chapter 2.8, page 34, first paragraph (since the core specification for version 6.3 has not been changed and has not been published again, the files from version 6.2 for 6.3 continue to apply unchanged.)

- ↑ The Unicode Standard, pp. 21f

- ↑ Unicode 6.3 Appendix D, page 602, Tables D-2 and D-3 (since the core specification for version 6.3 has not been changed and has not been published again, the files from version 6.2 for 6.3 continue to apply unchanged.)

- ^ Medieval Unicode Font Initiative. Retrieved August 21, 2012 .

- ^ Peter Constable and Lorna A. Priest: SIL Corporate PUA Assignments. April 17, 2012. Retrieved August 21, 2012 .

- ↑ Chris Harvey: Languagegeek Fonts. June 29, 2012. Retrieved August 21, 2012 .

- ↑ ConScript Unicode Registry. Retrieved August 21, 2012 .

- ↑ Character sets

- ↑ Java Internationalization FAQ

- ↑ Unicode in the .NET Framework

- ↑ FAQ - Unicode and ISO 10646

- ^ The Unicode Standard, p. 573

- ↑ Unicode Character Encoding Stability Policy

- ^ Unicode Technical Report # 17 - Character Encoding Model

- ↑ Response to the revised “Final proposal for encoding the Phoenician script in the UCS” (L2 / 04-141R2)

- ↑ unicode.org

- ↑ a b Jan Mahn: Strange characters . Special characters on Windows, Linux, macOS. In: c't . No. 20 , 2019, pp. 126–127 ( heise.de [accessed October 14, 2019]).

- ↑ a b Unicode under Mac OS X. apfelwiki.de; Retrieved April 27, 2013

- ↑ Keyboard shortcuts for international characters

- ↑ Character entity references in HTML 4 w3.org

- ^ A b Suzanne Topping: The secret life of Unicode. IBM DeveloperWorks, May 1, 2001, archived from the original on November 14, 2007 ; accessed on November 7, 2015 .

- ^ Otfried Cheong: Han Unification in Unicode. October 12, 1999, archived from the original on March 28, 2010 ; accessed on November 7, 2015 .

- ^ The Unicode Standard, p. 350

- ^ The Unicode Standard, p. 268

- ↑ Krishnamurthy, Elangovan, P. Chellappan Kanithamizh Sangam: Evolution of the 16 Bit Encoding Scheme for Tamil. Archived from the original on August 16, 2012 ; accessed on November 22, 2015 (English).

- ↑ precomposed Tibetan Part 1: BrdaRten. BabelStone

- ↑ winfuture.de